Introduction to Single Cell RNA-seq

Overview

Teaching: 45 min

Exercises: 10 minQuestions

What is single cell RNA-seq?

What is the difference between bulk RNA-seq and single cell RNA-seq?

How do I choose between bulk RNA-seq and single cell RNA-seq

Objectives

Describe the overall experimental process of generating single-cell transcriptomes.

Describe instances where bulk vs. single cell RNA-Seq methods are uniquely appropriate.

Brief overview of single cell transcriptomics technology

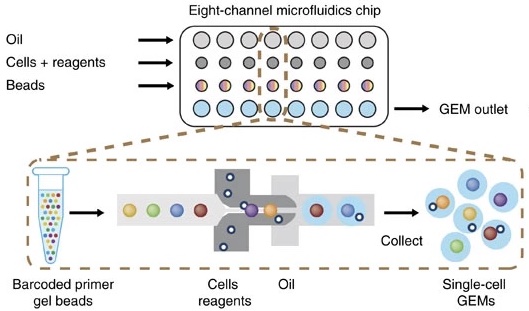

Single cell RNA-sequencing (scRNA-Seq) is a method of quantifying transcript expression levels in individual cells. scRNA-Seq technology can take on many different forms and this area of research is rapidly evolving. In 2022, the most widely used systems for performing scRNA-Seq involve separating cells and introducing them into a microfluidic system which performs the chemistry on each cell individually (droplet-based scRNA-Seq).

In this workshop we will primarily focus on the 10X Genomics technology. 10X Genomics is a market leader in the single cell space and was among the first technologies that made it feasible to profile thousands of cells simultaneously. Single cell technology is changing rapidly and it is not clear whether any other companies will be able to successfully challenge 10X’s dominance in this space.

The steps in droplet scRNA-Seq are:

- Cell isolation:

- If the cells are part of a tissue, the cells are disaggregated using collagenase or other reagents. The specifics of this protocol can vary greatly due to differences between tissues that are biological in nature. If the cells are in culture or suspension, they may be used as-is.

- Assess cell viability.

- If scRNA-Seq is being performed on fresh tissue, the cells are usually checked for viability. We want “happy” cells loaded into the machine. We might hope for >90% viable and set a minimum threshold of >70%, although these numbers can vary greatly depending on the experiment.

- Droplet formation inside instrument:

- Using a microfluidic system, each cell is suspended in a nanoliter-size droplet along with a barcoded primer bead. The cells are kept separate from each other in an oil/water emulsion.

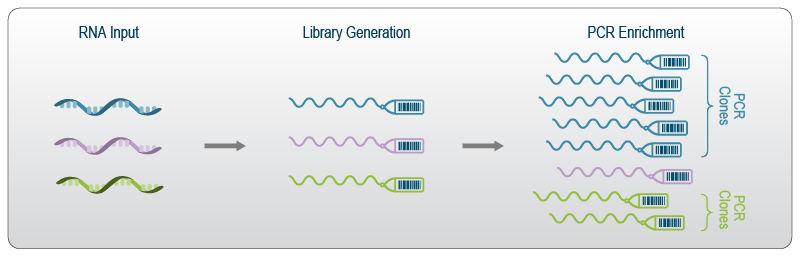

- Cell lysis, generating complementary DNA (cDNA):

- The cells are lysed in each droplet. Each cell was already encapsulated with a barcoded primer bead which has a primer specific to that cell. Often a poly-d(T) primer is used to prime the poly(A) tail of mRNA. Complementary DNA is transcribed and correct primers are added for Illumina sequencing.

- Library generation:

- Oil is removed to destroy droplets and homogenize the mixture. cDNA is amplified by PCR. The cDNA is sequenced on any Illumina machine. Sequencing should be paired-end – one read contains cell and molecule barcodes, while the other read contains the target transcript that was captured. We will discuss the sequence files in more detail in a later lesson. Any service provider who generates scRNA-Seq data for you should know how to properly set up in Illumina run to get you the data you need.

What is scRNA-Seq useful for?

Single cell RNA-Seq is a new technology and its uses are limited only by your imagination! A few examples of problems that have been addressed using scRNA-Seq include:

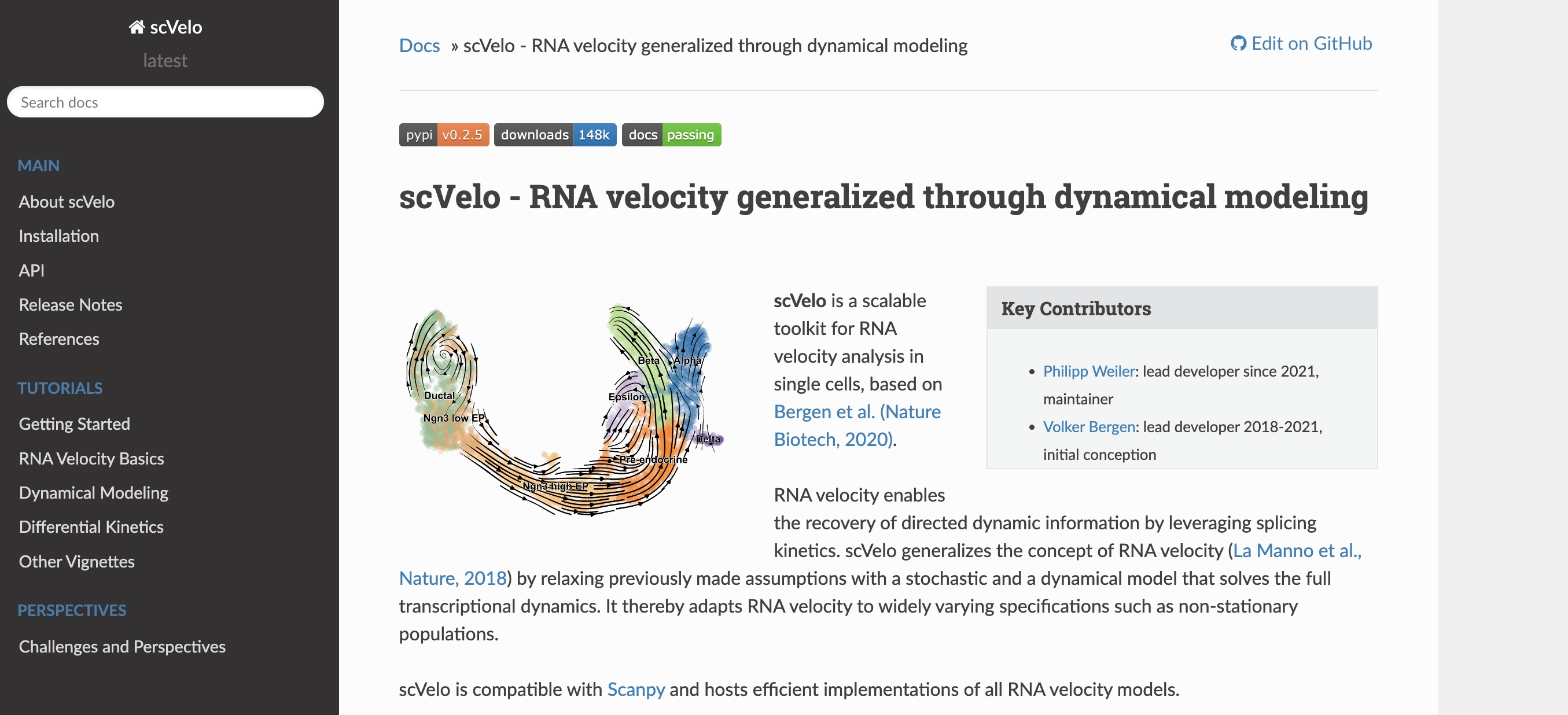

- developmental studies & studies of cellular trajectories.

- detailed tissue atlases.

- characterization of tumor clonality.

- definition of cell-type specific transcriptional responses (e.g. T-cell response to infection)

- profiling of changes in cell state (i.e. homeostasis vs. response state)

- a variety of different types of CRISPR screens

Comparing and contrasting scRNA-Seq with bulk RNA-Seq

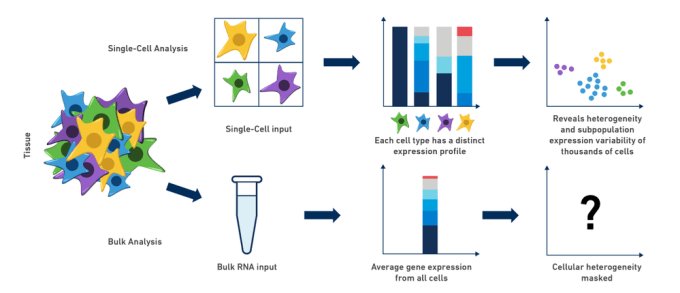

Bulk RNA-Seq and single cell RNA-Seq are related in that they both assess transcription levels by sequencing short reads, but these two technologies have a variety of differences. Neither technology is always better. The approach that one might use should depend upon the information one hopes to gather.

Consider the following points when assessing the differences between the technologies and choosing which to utilize for your own experiment:

- Tissues are heterogeneous mixtures of diverse cell types. Bulk RNA-Seq data consists of average measures of transcripts expressed across many different cell types, while scRNA-Seq data is cell-type resolved.

- Bulk RNA-Seq data may not be able to distinguish between changes in gene expression versus changes in tissue cell composition.

- Bulk RNA-Seq allows for much higher sequencing coverage for each gene and often captures more total genes.

- Bulk RNA-Seq allows for better isoform detection due to the higher sequencing depth and relatively uniform coverage across transcripts (vs. a typical 3’ bias in scRNA-Seq).

- Genes without poly-A tail (e.g. some noncoding RNAs) might not be detected in scRNA-Seq, but can be reliably assessed using bulk RNA-Seq.

https://www.10xgenomics.com/blog/single-cell-rna-seq-an-introductory-overview-and-tools-for-getting-started

https://www.10xgenomics.com/blog/single-cell-rna-seq-an-introductory-overview-and-tools-for-getting-started

Challenge

For each of these scenarios, choose between using bulk RNA-Seq and scRNA-Seq to address your problem.

Differentiation of embryonic stem cells to another cell type

Solution

You would likely find single cell RNA-Seq most powerful in this situation since the cells are differentiating along a continuous transcriptional gradient.

Studying aging with a specific focus on the senescence-involved (e.g. ref) gene Cdkn2a

Solution

Since you are interested in the expression of a single gene (Cdkn2a), bulk RNA-seq may be a better choice because of the greater sequencing depth and the potenial to identify isoforms.

Studying variation in vaccine response by profiling peripheral blood mononuclear cells (PBMCs) –

Solution

Since you are likely interested in gene expression within specific cell types, single cell RNA-seq may be a better choice because you will be able to quantify cell proportions and cell-specific gene expression.

Doing functional genomics in a non-model species

Solution

Non-model organsms may not have a well-developed reference genome or transcript annotation. Thus, you may need to use tools which perform de-novo transcript assembly and then align your reads to that custom transcriptome. De-novo transcript assembly requires greater sequencing depth, which single-cell RNA-Seq may not provide. Therefore we would recommend using bulk RNA-Seq in this situation.

Studying micro RNAs

Solution

MicroRNAs are not currently assayed by most scRNA-Seq technologies. Thus bulk RNA-Seq, with an enrichment for small RNAs, would be the better choice here.

Performing gene expression quantitative trait locus (eQTL) mapping

Solution

You may want good estimates of transcript abundance in your tissue of interest, so bulk RNA-Seq may be a good choice here. However, you may be able to aggregate scRNA-Seq expression for each cell type and perform eQTL mapping. Therefore both technologies could be informative!

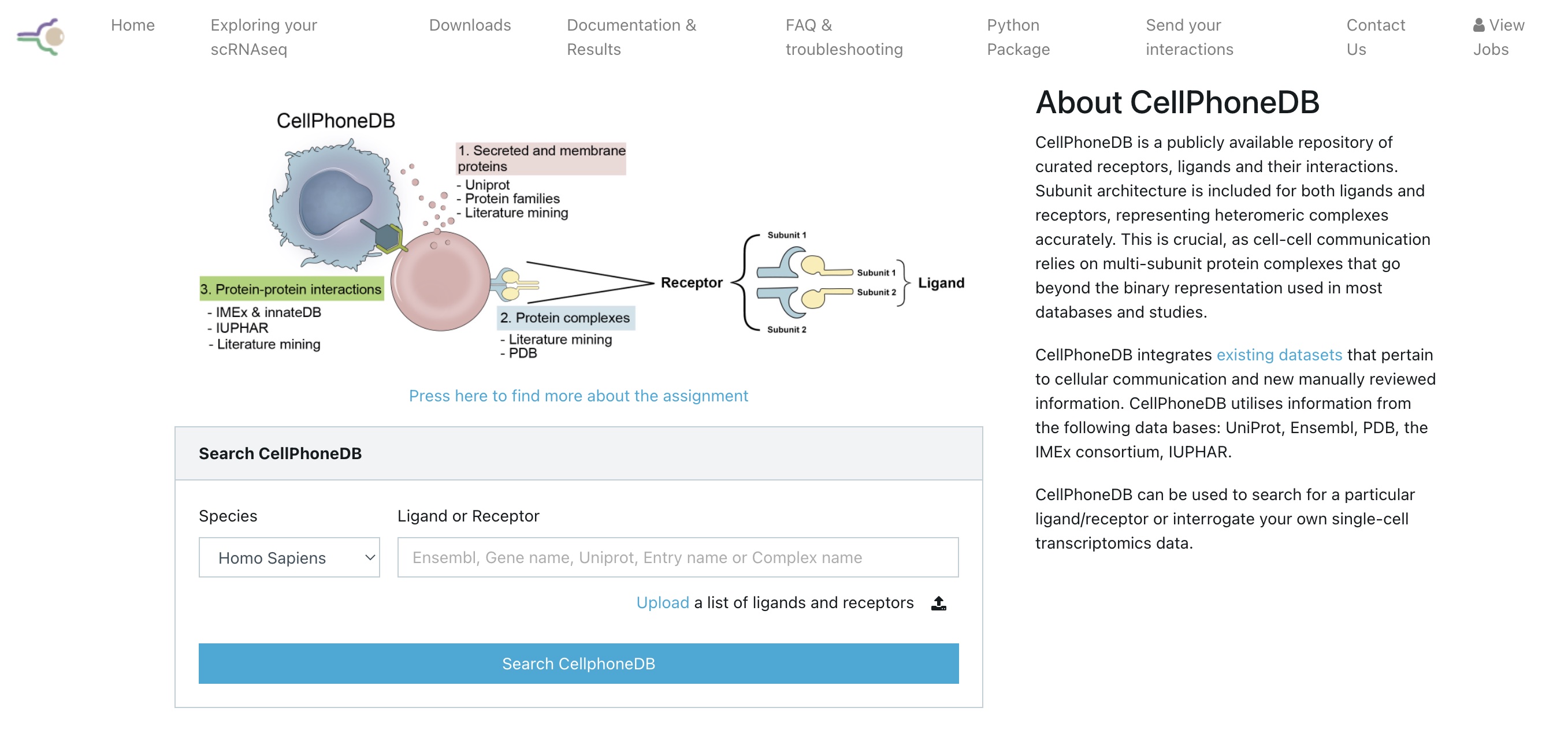

Single cell data modalities

There are several different modalities by which one can gather data on the molecular properties of single cells. 10X Genomics currently offers the following reliable assays:

- RNA-seq - assess gene expression in single cells. A sub-group of single cell gene expression is single nucleus gene expression, which is often used on frozen samples or for tissues where preparing a single cell suspension is difficult or impossible (e.g. brain).

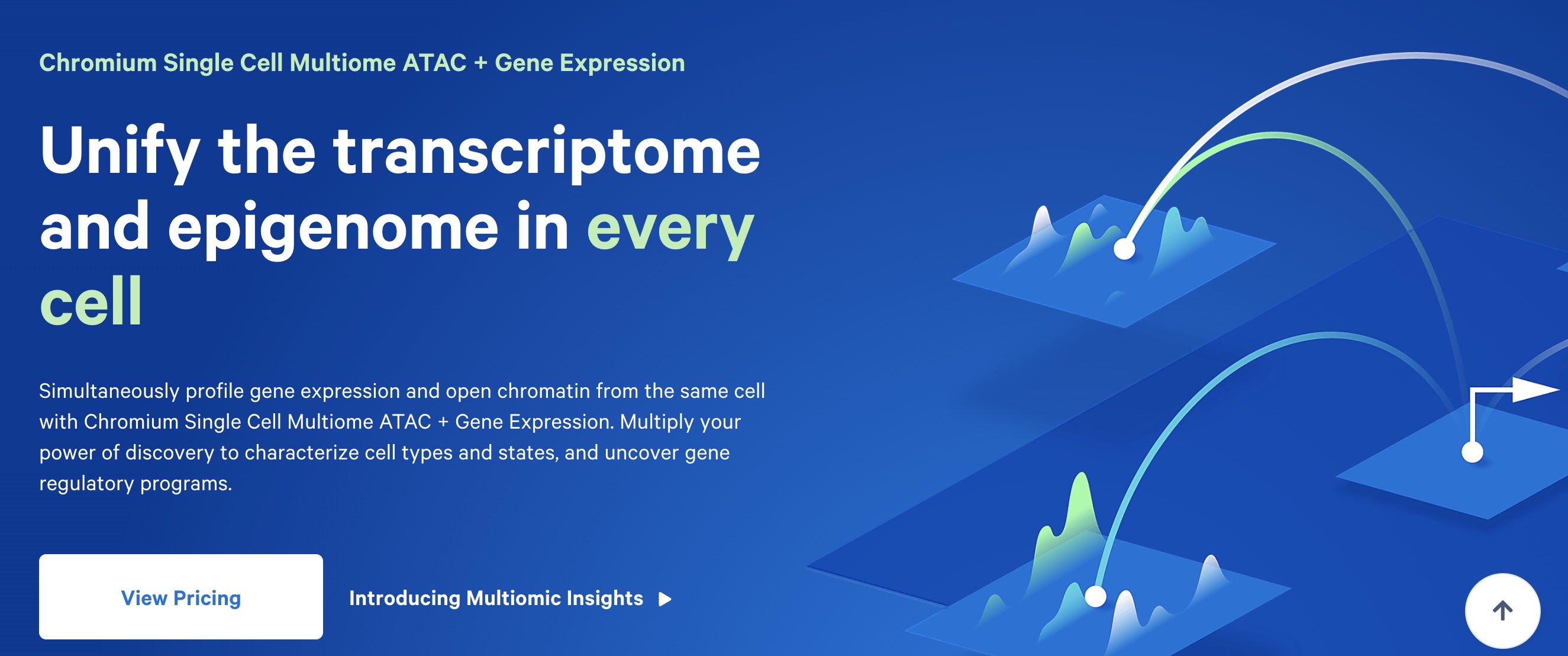

- ATAC-seq - assess chromatin accessibility in single cells

- “Multiome” - RNA + ATAC in the same single cells

- Immune repertoire profiling - assess clonality and antigen specificity of adaptive immune cells (B and T cells)

- CITE-Seq - assess cell surface protein levels

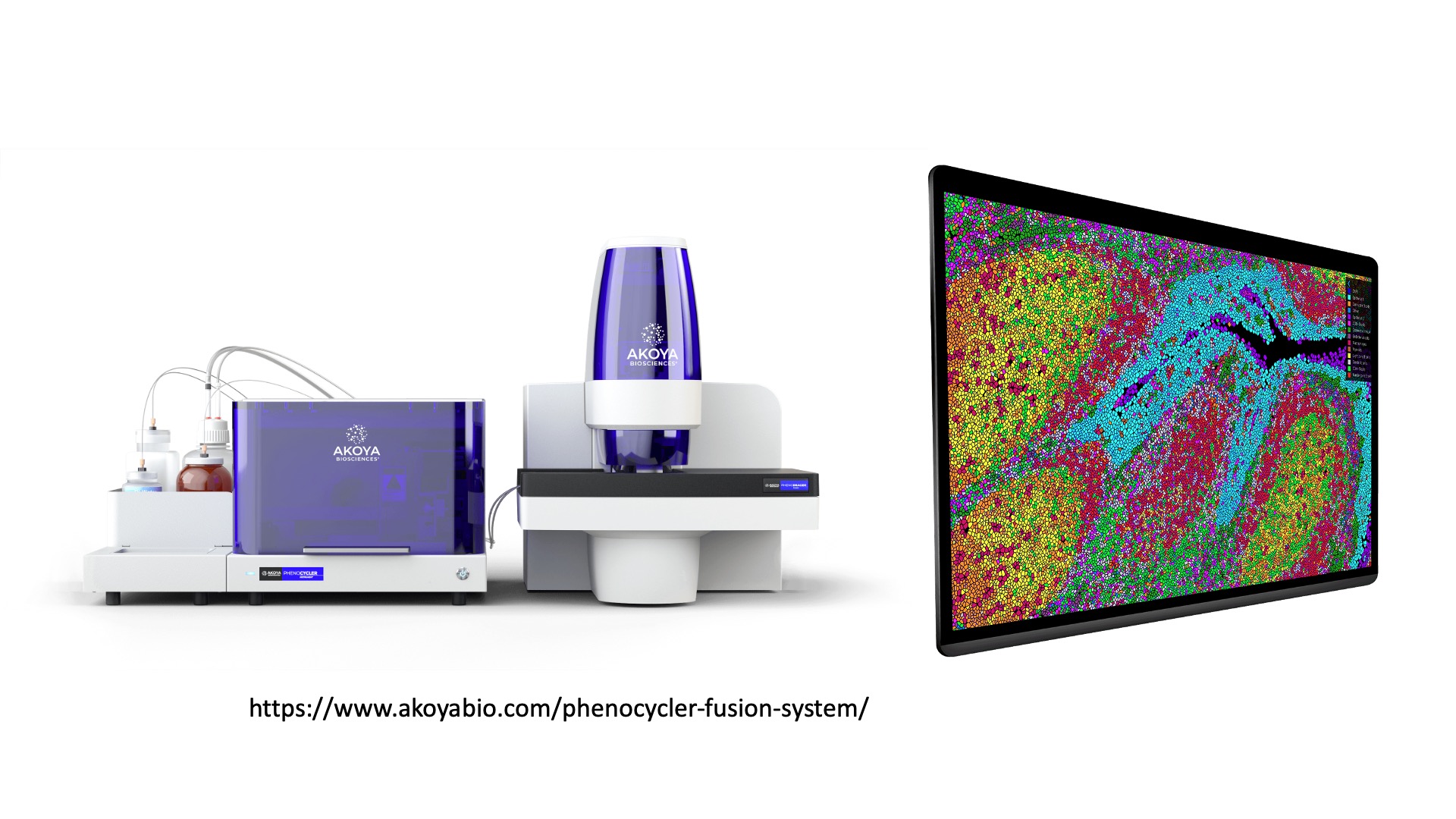

- Spatial transcriptomics/proteomics - assess gene expression and/or protein abundance with near-single cell resolution

Focus of this course: 10X Genomics mouse scRNA-Seq

While there are several commercially available scRNA-seq technologies, this course will focus on data generated by the 10X Genomics platform. This technology is widely used at JAX, and it is flexible, reliable, and relatively cost-efficient. We will focus on a mouse data set, although most of the techniques that you will learn in this workshop apply equally well to other species. Each data modality has its own strengths and weaknesses; in this course we will focus on transcriptomics.

Brief overview of instrumentation and library preparation choices available from 10X Genomics

10X Genomics offers a variety of options for profiling single cells. Here we give a brief overview of some of their offerings to illustrate what is available.

To profile gene expression, users can choose from several options:

- 3’ gene expression – the “usual” option, amplifies from the 3’ end of transcripts

- 5’ gene expression – another option for profiling gene expression that captures from the 5’ end of the transcript. See this link for some information on 3’ vs 5’ gene expression

- “targeted” gene expression – focus on a smaller number of genes that are of particular interest

One can also choose to profile gene expression on different instruments. The “workhorse” 10X Chromium machine is used for profiling gene expression of up to eight samples totaling 25k-100k cells. The newer Chromium X is a higher throughput instrument which can profile up to 16 samples and up to one million cells. There are also variations in the kits that can be purchased to perform library preparation. In general 10X Genomics does a fairly good job of continuously improving the chemistry such that the data quality continues to improve. More information is available from 10X Genomics.

10X Genomics also produces a suite of other instrumentation for applications such as spatial transcriptomics (Visium) or in situ profiling (Xenium), which we will not cover in this course.

Key Points

Single cell methods excel in defining cellular heterogeneity and profiling cells differentiating along a trajectory.

Single cell RNA-Seq data is sparse and cannot be analyzed using standard bulk RNA-Seq approaches.

Bulk transcriptomics provides a more in-depth portrait of tissue gene expression, but scRNA-Seq allows you to distinguish between changes in cell composition vs gene expression.

Different experimental questions can be investigated using different single-cell sequencing modalities.

Experimental Considerations

Overview

Teaching: 45 min

Exercises: 5 minQuestions

How do I design a rigorous and reproducible single cell RNAseq experiment?

Objectives

Understand the importance of biological replication for rigor and reproducibility.

Understand how one could pool biological specimens for scRNA-Seq.

Understand why confounding experimental batch with the variable of interest makes it impossible to disentangle the two.

Understand the different data modalities in single-cell sequencing and be able to select a modality to answer an experimental question.

In this lesson we will discuss several key factors you should keep in mind when designing and executing your single cell study. Single cell transcriptomics is in many ways more customizable than bulk transcriptomics. This can be an advantage but can also complicate study design.

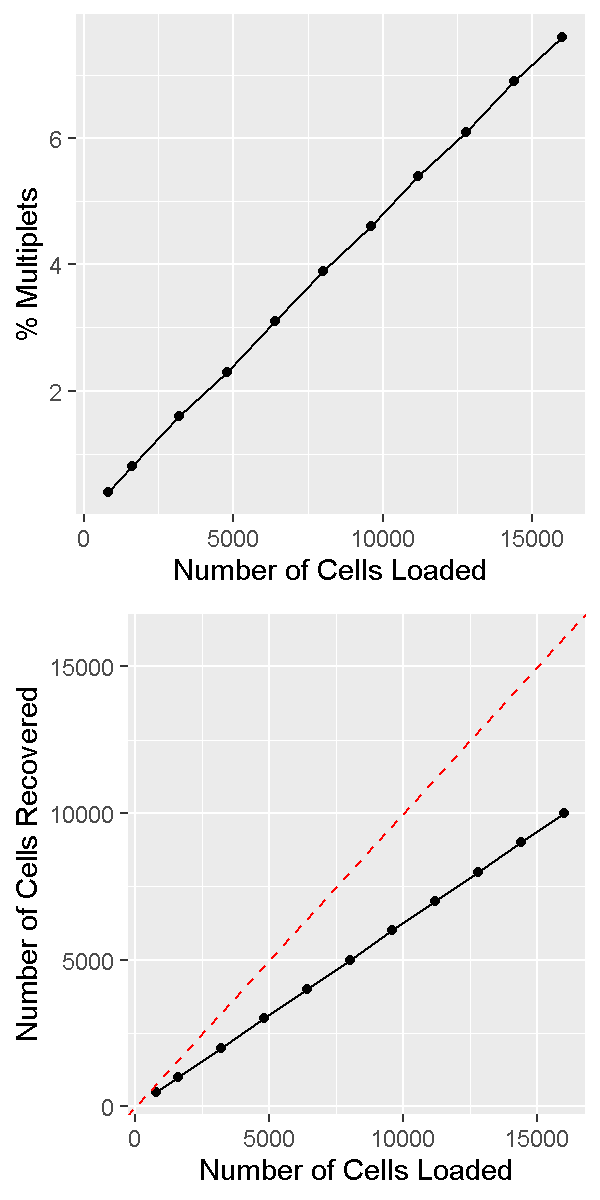

To begin, we note that you should be aware of the distinction between cells loaded vs cells captured. In a 10X scRNA-Seq study you prepare a cell suspension and decide how many cells should be loaded onto the 10X Chromium instrument. The cells flow through the microfluidics into oil-water emulsion droplets, but not all cells are captured. Typically somewhere between 50-70% of cells are captured. 10X provides estimates for the capture rates of samples profiled using their 3’ gene expression kit here.

Doublets/multiplets - a ubiquitous property of droplet single cell assays

In droplet single cell transcriptomics, a key reason the technology “works” is because most droplets contain at most one cell. After all, if droplets contained more than one cell the technology would no longer be profiling single cells! However, due to the nature of the cell loading of droplets (which can be modeled accurately using Poisson statistics), even in a perfect experiment it is inevitable that some droplets will contain more than one cell. These are called multiplets – or doublets, since the vast majority of multiple-cell droplets contain two cells. Contrast with singlets, which contain only one cell.

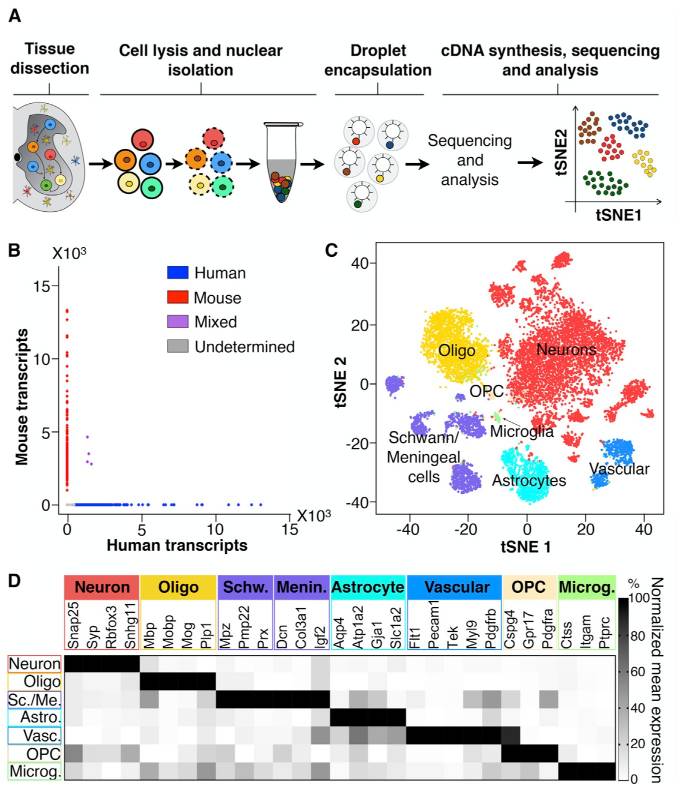

There are two considerations we might keep in mind when thinking about singlets vs. doublets. First, not every droplet contains exactly one gel bead. The initial paper describing the 10X technology, published in 2017, showed the following histogram:

Therefore the large majority of droplets contain zero or one gel beads. This is good. If a droplet contained, say, two gel beads, then even if a single cell was captured in that droplet it would appear to be two cells because the transcripts from that cell would be tagged with one of two cell barcodes.

The second consideration we might keep in mind when thinking about singlets vs. doublets is that it can be hard to obtain a ground truth. If, say, two cells of the same cell type were captured in a single droplet, how would we know there were two? It might be easier if two cells of very different cell types were together, since those cells would likely express very different genes. One way we can look for doublets is to run a so-called “barnyard” experiment where we mix cells from multiple species. For example, Sathyamurthy et al. 2018 link used single nucleus transcriptomics to explore the cellular heterogeneity of the mouse spinal cord. They loaded mouse and human cells together and showed that only a small number of droplets appeared to contain both a human and a mouse cell.

It is important to realize that the doublet rate is not fixed but rather depends on the number of cells loaded into the 10X Chromium machine. The more cells are loaded, the higher the doublet rate. The figure below shows the relationship between the number of cells loaded and the doublet rate. This figure is derived from the 10X User Guide for their Chromium Next GEM Single Cell 3ʹ Reagent Kits v3.1 product, but the basic trends should hold across other versions of this product as well.

In a future lesson we will discuss software options for trying to identify and remove doublets prior to drawing biological conclusions from data. Unfortunately this process is not always straightforward and we are usually forced to accept the reality that we likely cannot remove all doublets from our data.

How to decide on the parameters of the experiment

There are a number of parameters in a typical scRNA-Seq experiment that are customizable according to the particular details of each study.

Number of cells

A typical number of captured cells (target cell number) would be 6,000-8,000 cells on a standard 10X Chromium, or 16,000-20,000 cells on the higher throughput Chromium X. At these cell capture numbers, the doublet rates are expected to stay relatively low (the JAX Single Cell Biology Laboratory estimates empirical doublet rates of 0.9% and 0.4% per 1,000 cells for Chromium and Chromium X, respectively). At these doublet rates we would expect to obtain less than 100 doublets in standard experiments. One may also choose to “overload” or “super load” the Chromium instrument. In this strategy we load more than the typical number of cells, they flow through the instrument more quickly than usual, and more droplets end up with multiple cells. Therefore our data contains more multiplets; however we also obtain more total cells. If we are in a setting where we can tolerate a higher doublet rate this may be an efficient way of profiling more cells.

Sequencing depth

There is no fixed rule for how deeply we should sequence in our scRNA-Seq experiment. Moreover, the depth of sequencing will vary significantly between cells no matter what we choose.

Nevertheless, a reasonable rule of thumb would be to sequence to a depth of approximately 50,000 - 75,000 reads per cell. If we are profiling cells that have relatively few genes expressed, such as lymphocytes, we might target a sequencing depth closer to 25,000 - 50,000 reads per cell. If we are profiling cells that express many genes, such as stem cells, we might go significantly higher, to 100,000 or even 200,000 reads per cell.

Number of samples

It is generally more economical to run at least a few scRNA-Seq samples than to run just one or two. For example, JAX’s Single Cell Biology Laboratory gives a substantial per-library discount for running 3+ samples as opposed to one or two samples. This is because the chip used to run samples through Chromium has 8 (or 16) channels which cannot be reused.

The most efficient way to organize your experiment is to design it to be run in batches of 8.

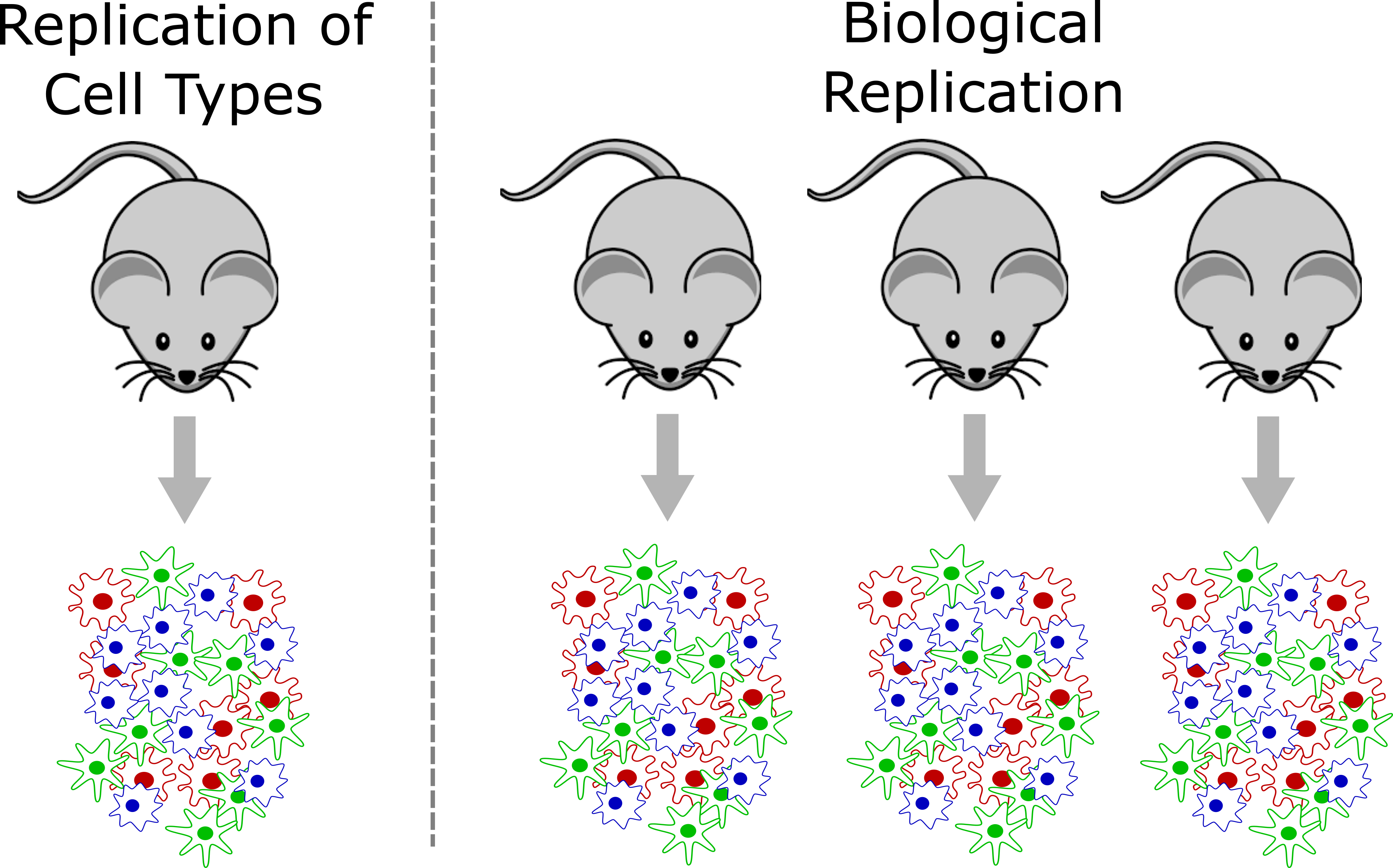

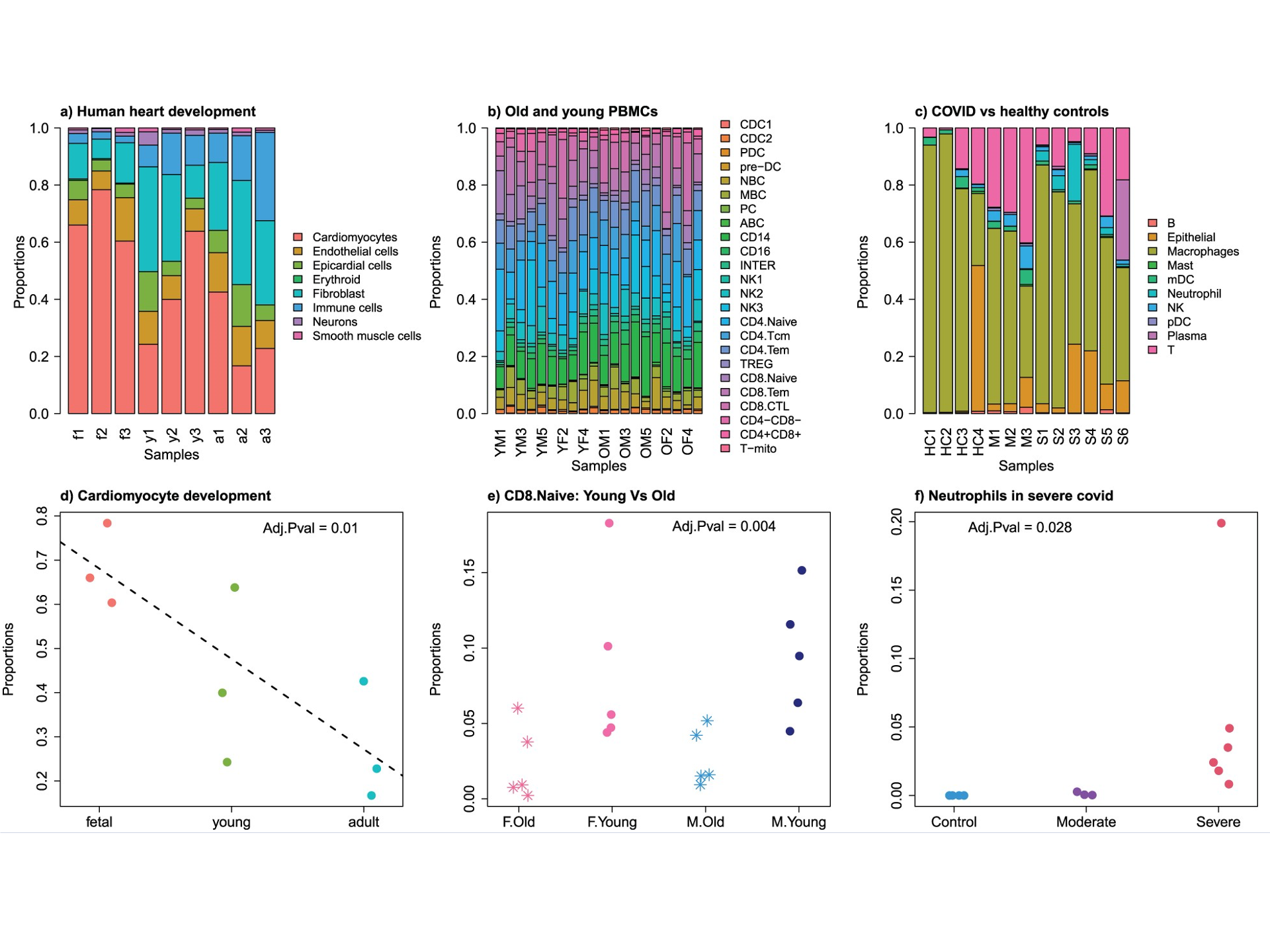

Biological replication

Proper biological replication is the cornerstone of a statistically well-powered experiment. This is recognized across nearly all of biology. In the early days of single cell RNA-Seq, the main focus of many investigations was demonstrating the technology and often biological replication was missing. As scRNA-Seq has matured, it has become increasingly important to include biological replication in order to have confidence that our findings are statistically robust.

Note that the tens of thousands of cells sequenced in a typical sample do not constitute biological replication. In order to show a biologically meaningful change in cell composition, the emergence of a particular cell subset, or difference in gene expression we need samples harvested from multiple individuals.

In a strict sense, biological replication would entail independently rearing multiple genetically identical animals, subjecting them to identical conditions, and harvesting cells in an identical manner. This is one strength of working in the mouse that we can leverage at JAX. However, there are also instances where we can obtain useful biological replication without meeting these strict requirements. For example, a disease study in humans may profile single cells collected from affected and unaffected individuals, and could provide insights as long as the groups are not systematically different. Turning to the mouse, a fine-grained time course may not require biological replication at every time point because – depending on the questions investigators wish to ask – cells from different individual mice collected at different time points might be analyzed together to attain robust results.

How many biological replicates should one collect? This question is difficult to answer with a “one size fits all” approach but we recommend an absolute minimum of three biological replicates per experimental group. More is almost always better, but there are obviously trade offs with respect to budget and the time it takes to harvest samples. There are many studies that have attempted to model the power of single cell RNA-Seq experiments, including differential gene expression and differences in cell composition. We will not discuss these studies in detail in this course, but some may be useful for your own work:

- Schmid et al. 2021. scPower accelerates and optimizes the design of multi-sample single cell transcriptomic studies. https://doi.org/10.1038/s41467-021-26779-7

- Svensson et al. 2017. Power analysis of single-cell RNA-sequencing experiments. https://doi.org/10.1038/nmeth.4220

- Davis et al. 2019. SCOPIT: sample size calculations for single-cell sequencing experiments. https://doi.org/10.1186/s12859-019-3167-9

- Zimmerman et al. 2021. Hierarchicell: an R-package for estimating power for tests of differential expression with single-cell data. https://doi.org/10.1186%2Fs12864-021-07635-w

- Vieth et al. 2017. powsimR: power analysis for bulk and single cell RNA-seq experiments. https://doi.org/10.1093/bioinformatics/btx435j

- Su et al. 2020. Simulation, power evaluation and sample size recommendation for single-cell RNA-seq. https://doi.org/10.1093/bioinformatics/btaa607

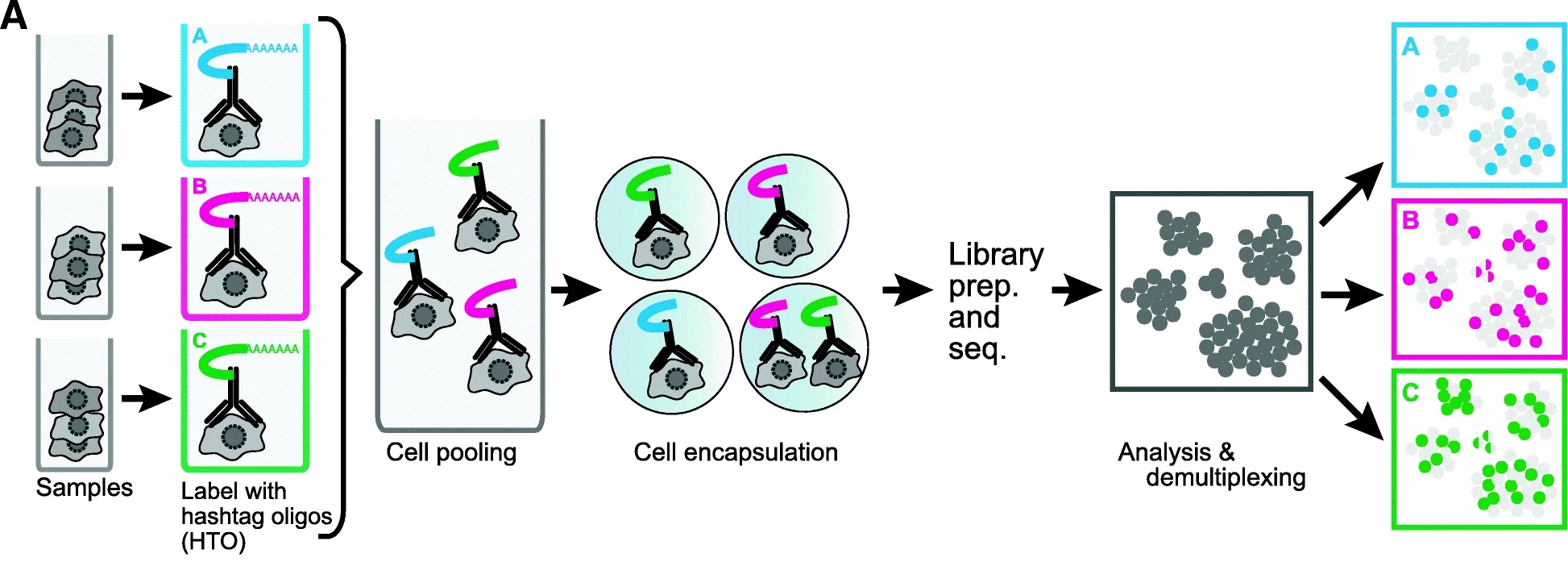

Pooling options

Given the relatively high expense of each scRNA-Seq sample and the importance of proper biological replication laid out above, the opportunity to pool samples and run them together is a powerful tool in the single cell researcher’s toolkit. In pooled single cell genomics, there are two primary methods of tracking the sample to which each individual cell belongs. First, one can attach a unique barcode (“hashtag”) to all cells of each sample, typically using an oligonucleotide-tagged antibody to a ubiquitous cell surface protein or an alternative (e.g. lipid-reactive) reagent.

(Figure from Smibert et al. 2018) This approach was pioneered by Smibert et al. The hashtag is read out in a dedicated library processing step and used to demultiplex samples based on knowledge of the pre-pooling barcoding strategy. This is the basis of the techniques known as “cell hashing”, “lipid hashing”, and the recently introduced 10X Genomics CellPlex multiplexing system. The hashtagging approach is accurate, however it is important to note that the extra experimental steps may impose obstacles. Hashtagged multiplexing requires extra sample preparation steps for barcoding, which can affect the viability and quality of cell preparations, may be less effective for certain tissues and cell types, and the increased sample handling may itself impose a significant burden in large projects.

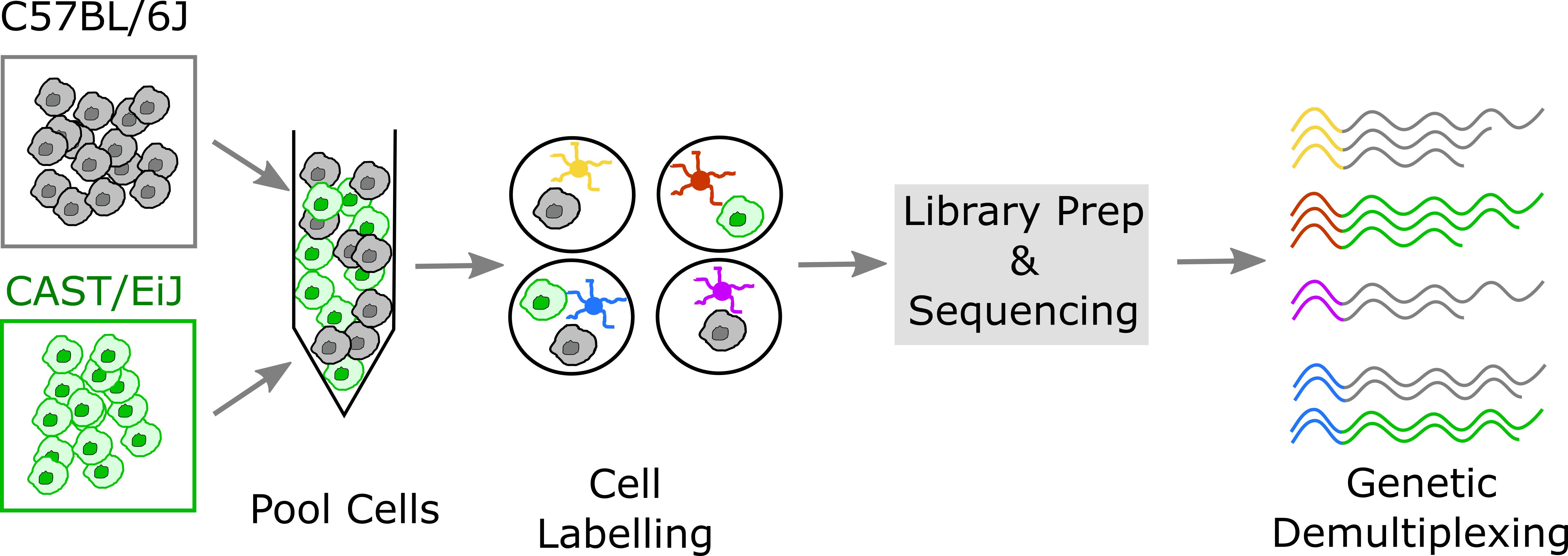

A second, alternative, approach is to use natural genetic variation as a built-in barcode and demultiplex cells using SNVs and indels unique to each sample.

This approach was pioneered by Kang et al.. In contrast to the hashtagging approach, genetic demultiplexing requires no special reagents or additional steps, and can be used for any tissue or cell type. However, the genetic demultiplexing approach requires that the samples being pooled are sufficiently genetically distinct for them to be distinguishable using transcribed genetic variation. This condition is typically met in human studies or in studies of genetically diverse mice. Of course, this approach would not suffice for a study of a single inbred mouse strain profiled under multiple conditions. One advantage of genetic demultiplexing is that we can use the genetic information to filter out any mixed background doublets, allowing us to obtain data that contains fewer doublets than we would otherwise expect.

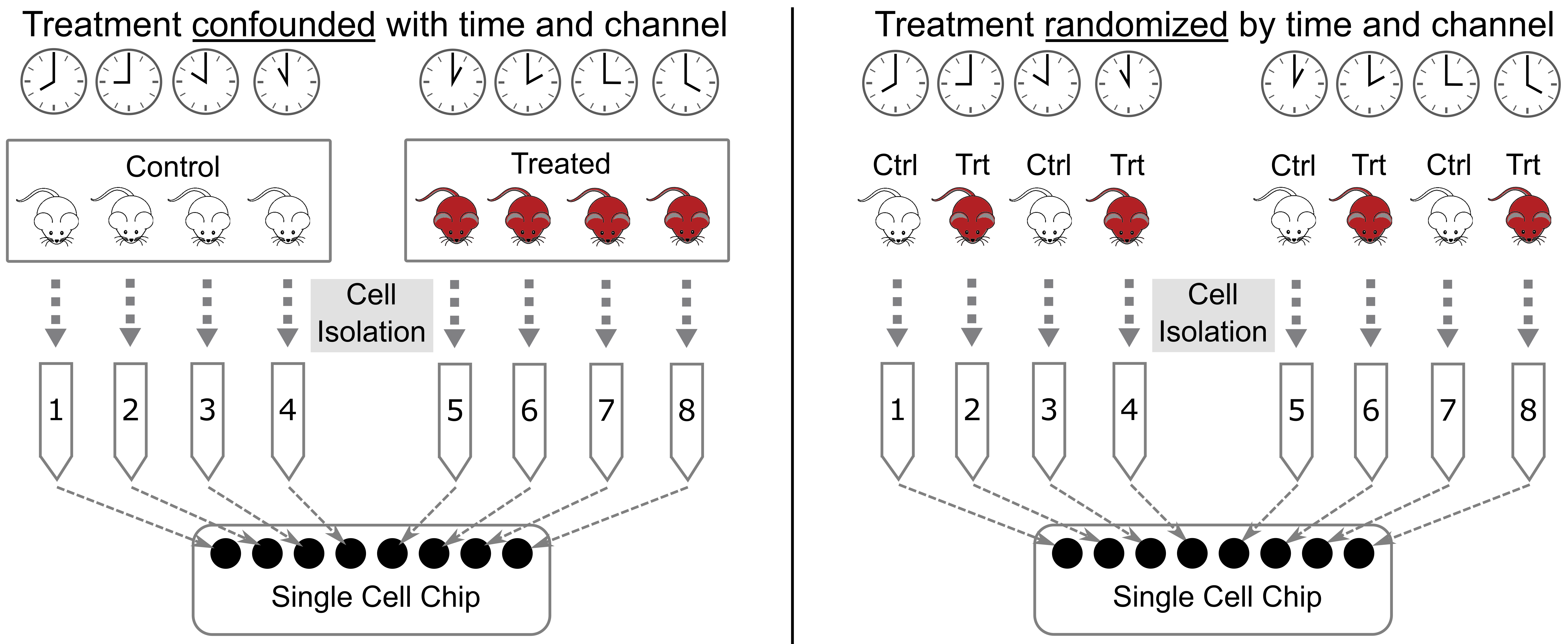

Avoid Confounding Batch with Experimental Variables

When designing an experiment, it is tempting to lay samples out in some order that is easy to remember. If you are performing a dose-response experiment, you might order your samples from the lowest dose to the highest dose. If you are assaying transcript levels in different tissues, you might sort the samples by tissue. However, if you maintain this order, you risk confounding single cell batches with your experimental batches. This is called “confounding” and it makes it impossible to statistically disentangle the batch effect from your experimental question.

Let’s look at an example, illustrated in the figure below. Suppose that you have analyzed cells from control and treated mice, which you keep in separate cages, as shown in the left panel below. At euthanasia, you might isolate cells from the mice in each cage sequentially. If you isolate cells from the control mice first, then those cells will sit longer than the treated cells before being delivered to the single cell core. Also, you might place the control cells in the first set of tubes and the treated cells in the last set of tubes. When you deliver your tubes to the core, they may be placed on the 10X instrument in the same order. This will confound holding time and instrument channel with the control and treated groups. A better design is shown in the right panel, in which treatment is not confounded with time and chip channel.

Recommended Reading

Luecken MD, Theis FJ (2019) Current best practices in single-cell RNA-seq analysis: a tutorial. Mol Syst Biol link

Andrews TS, Kiselev VY, McCarthy D, Hemberg M (2021) Tutorial: guidelines for the computational analysis of single-cell RNA sequencing data. Nat Protoc link

Key Points

Due to the high variance in single-cell data sets, a well-powered study with adequate biological replication is essential for rigor & reproducibility.

Increasing the number of cells also increases the multiplet rate.

Pooling cells using hashtagging is a useful way to reduce costs, but also stresses cells and may affect cell viability.

Pooling cells from genetically diverse individuals may allow cells to be demultiplexed using genetic variants that differ between samples.

Do not confound experimental batch with any technical aspect of the experiment, i.e. sample pooling or flow cell assignment.

Overview of scRNA-seq Data

Overview

Teaching: 90 min

Exercises: 30 minQuestions

What does single cell RNA-Seq data look like?

Objectives

Understand the types of files provided by CellRanger.

Understand the structure of files provided by CellRanger.

Describe a sparse matrix and explain why it is useful.

Read in a count matrix using Seurat.

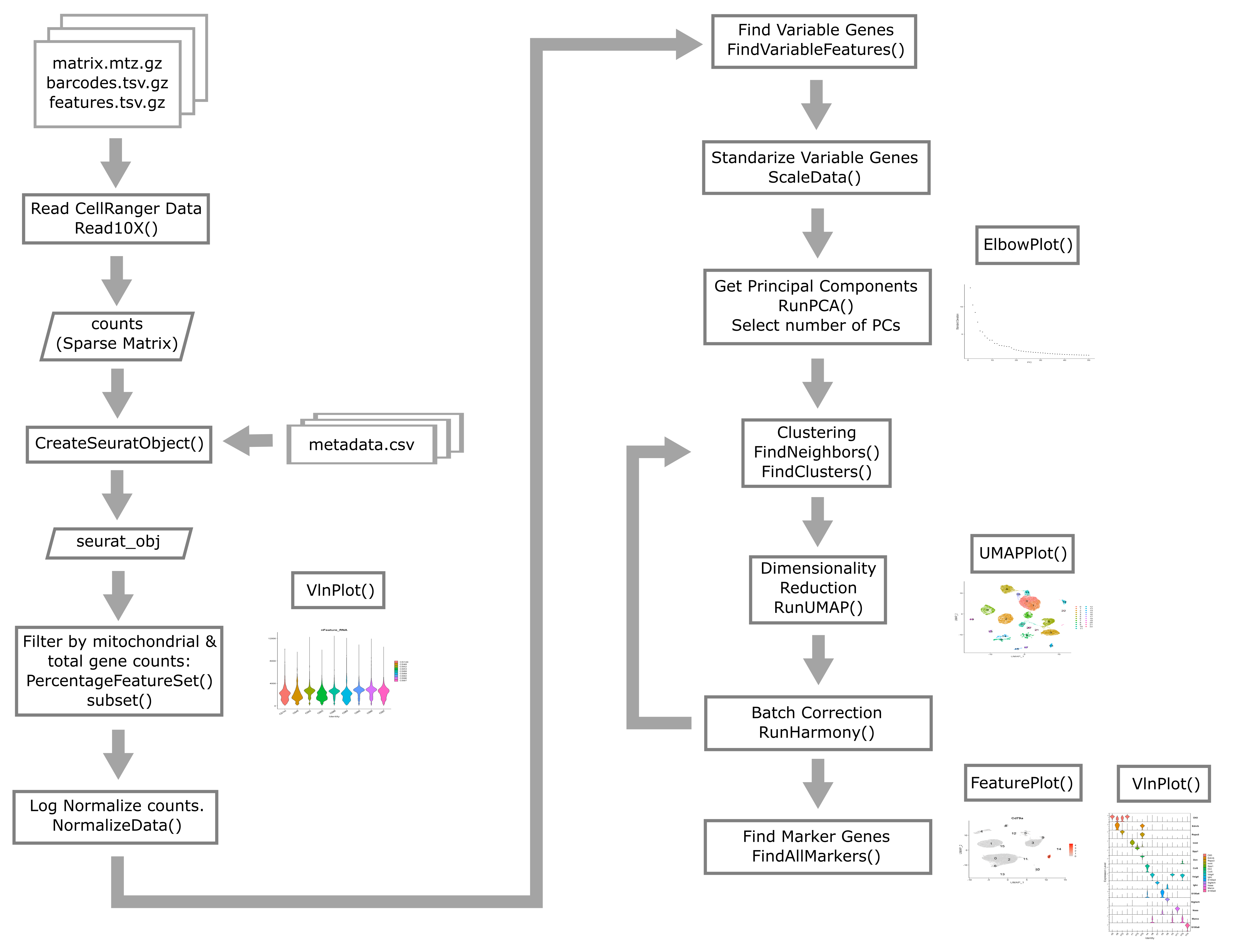

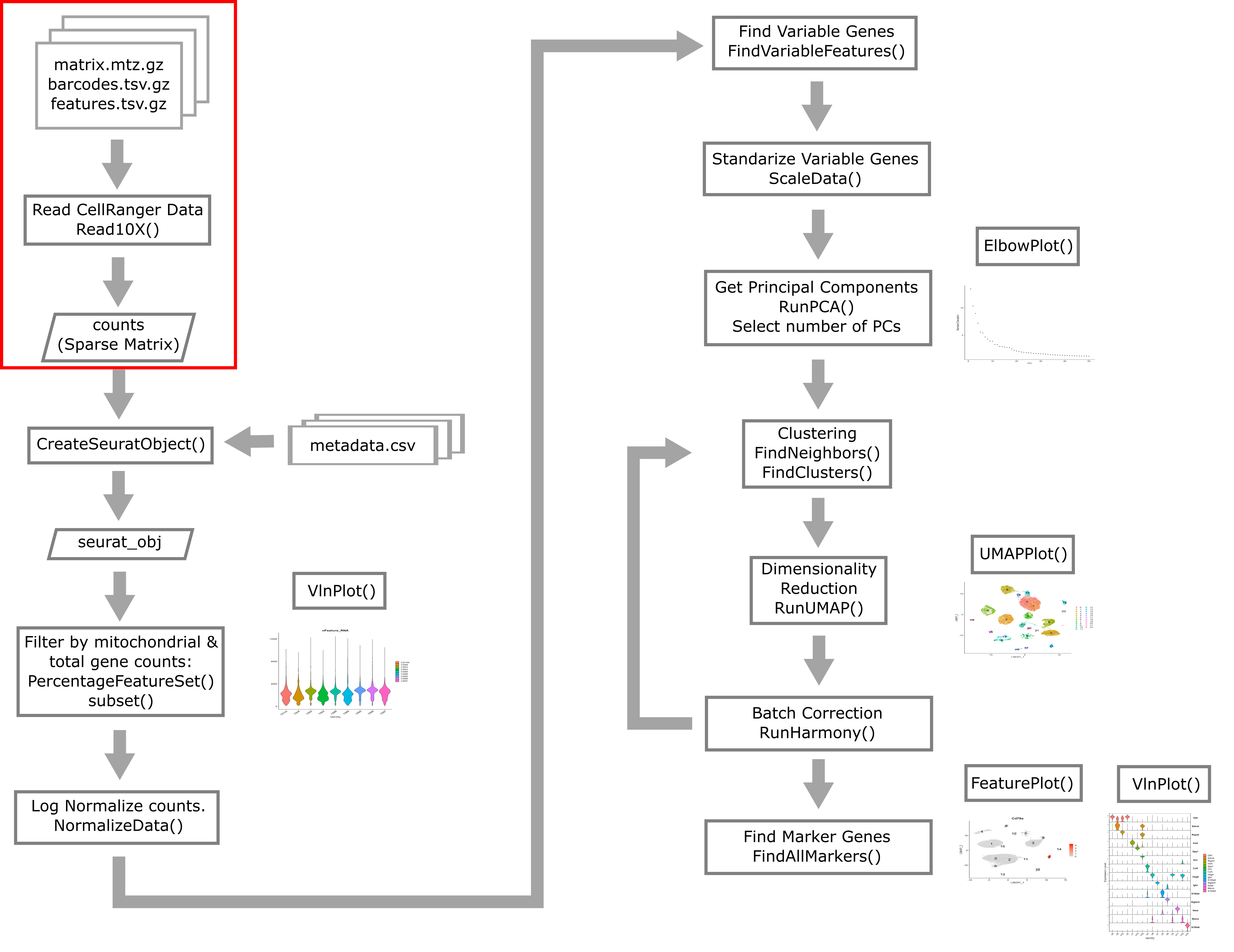

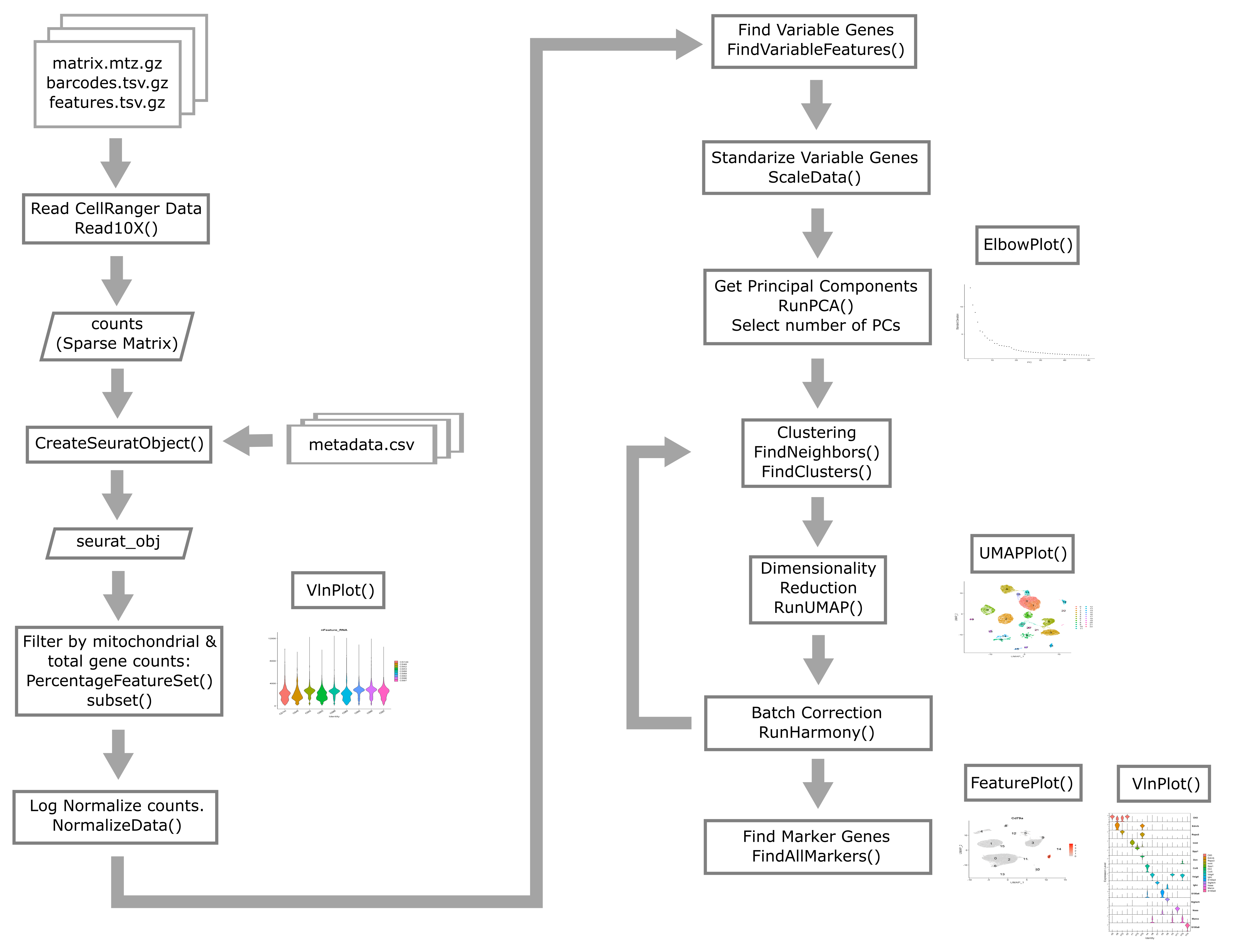

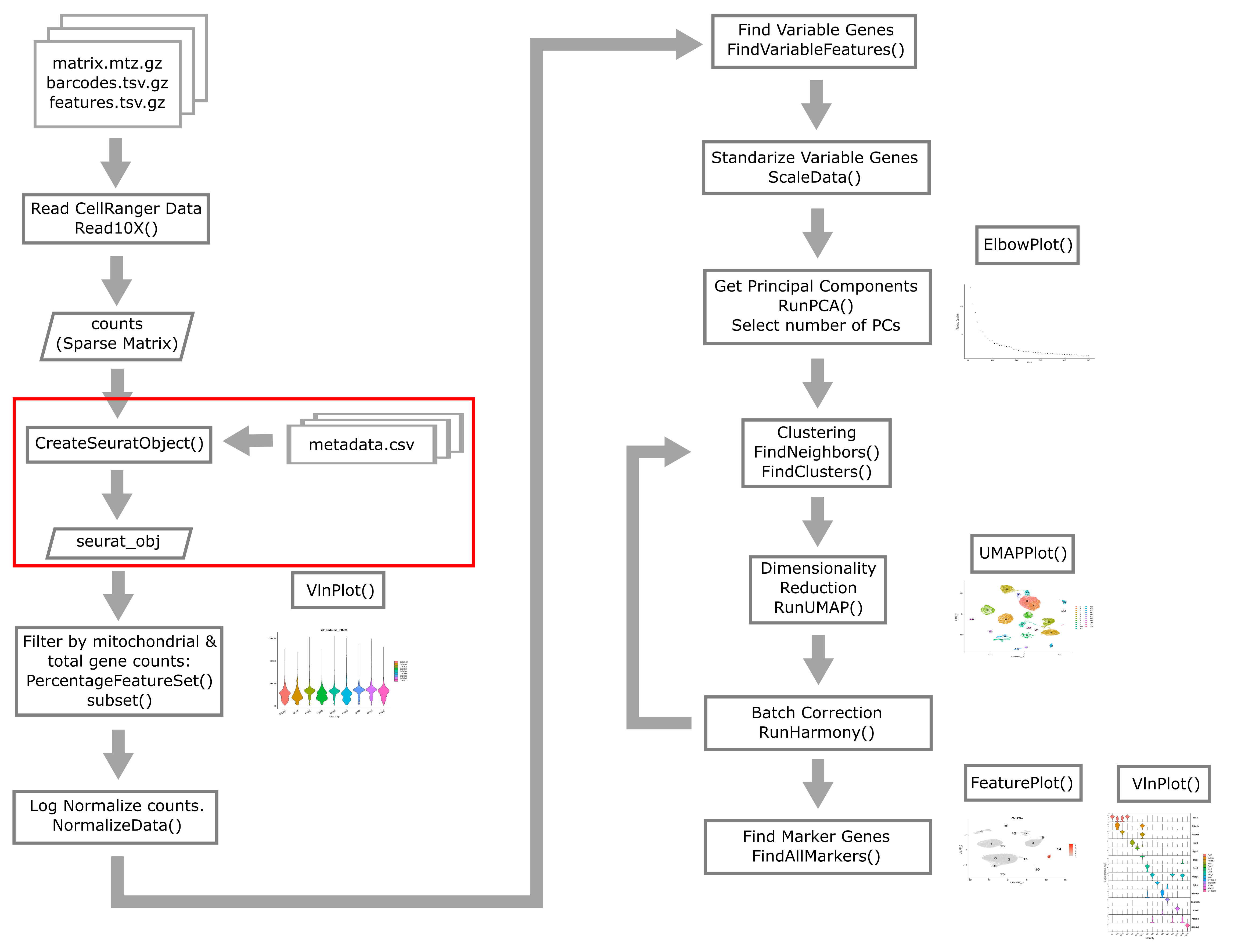

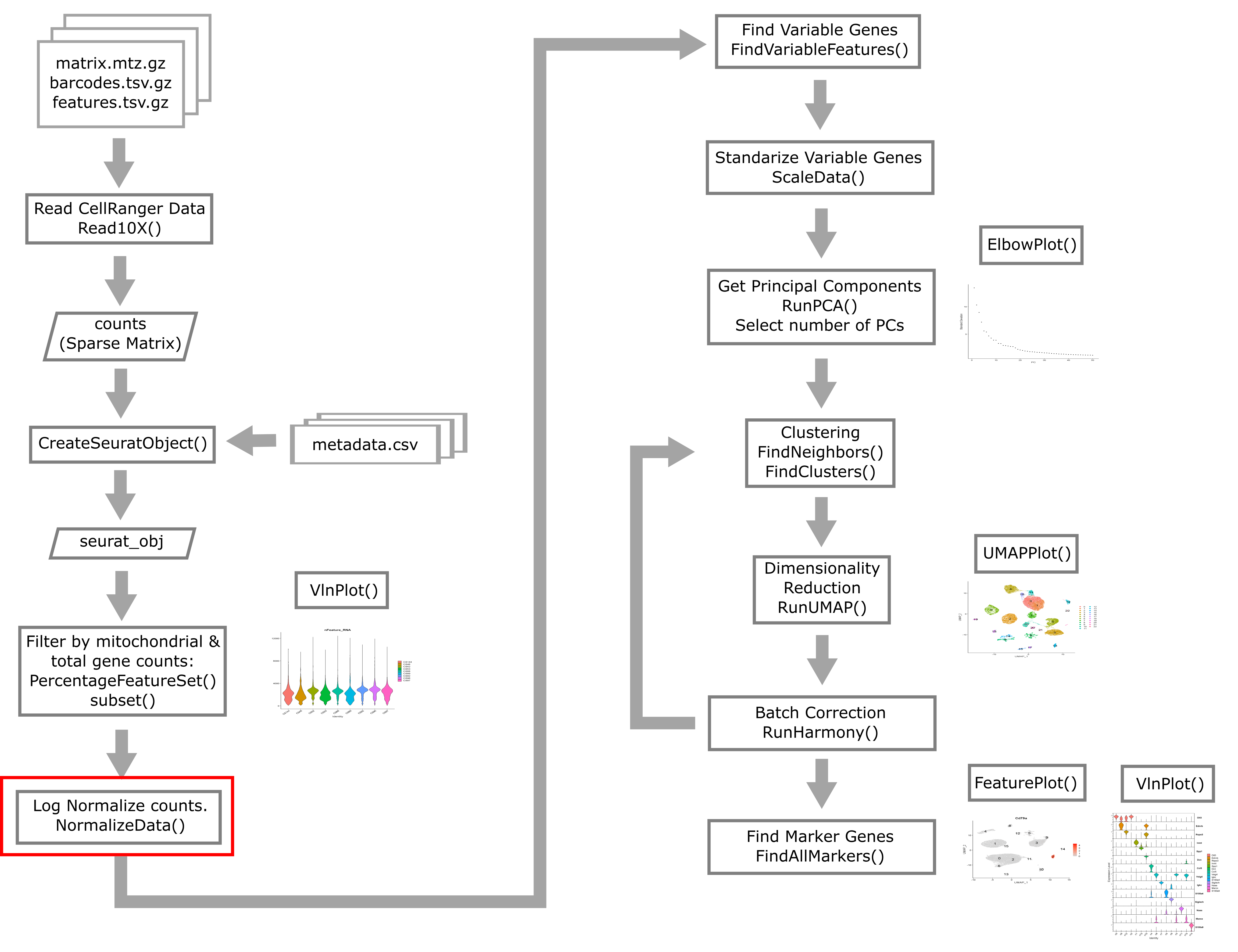

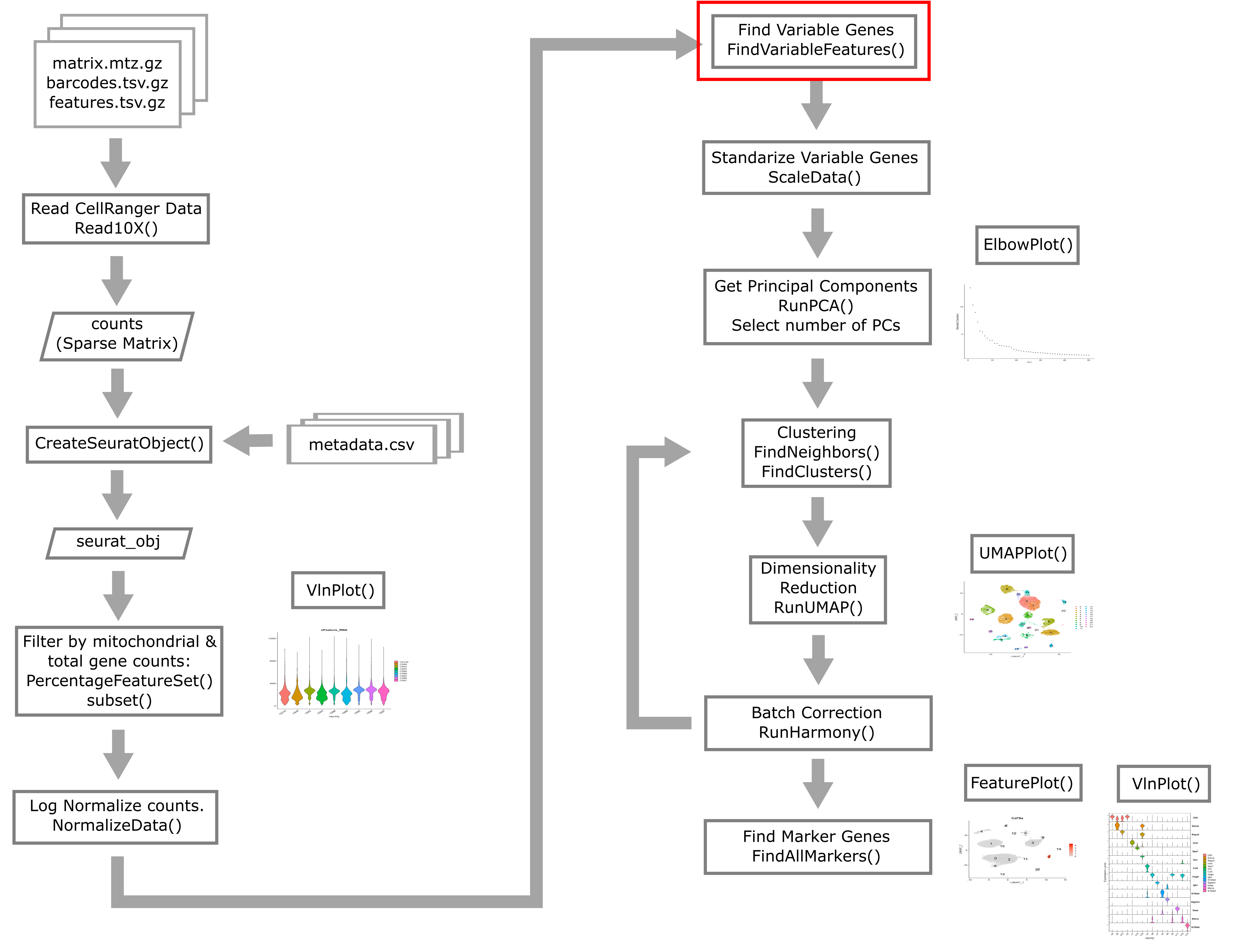

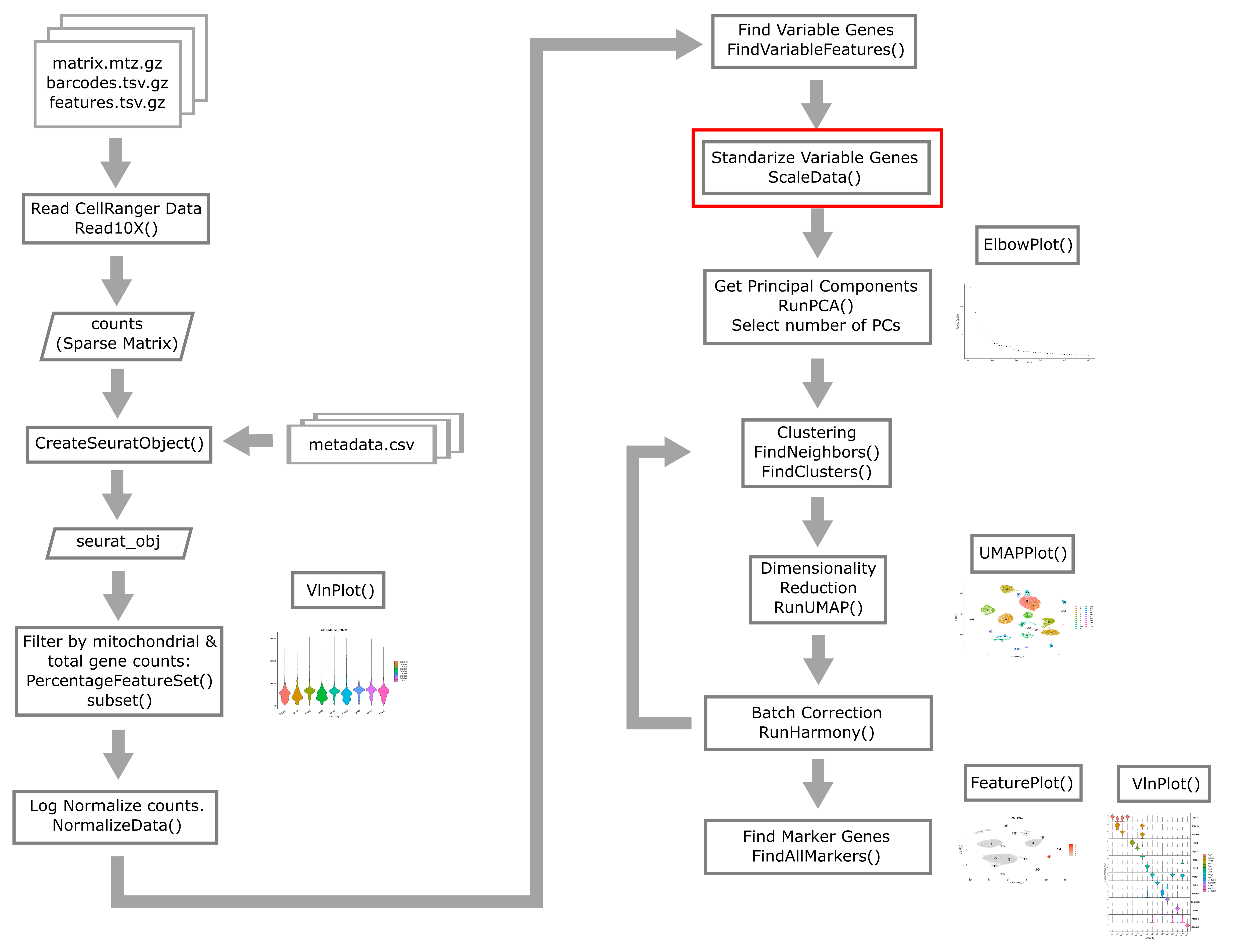

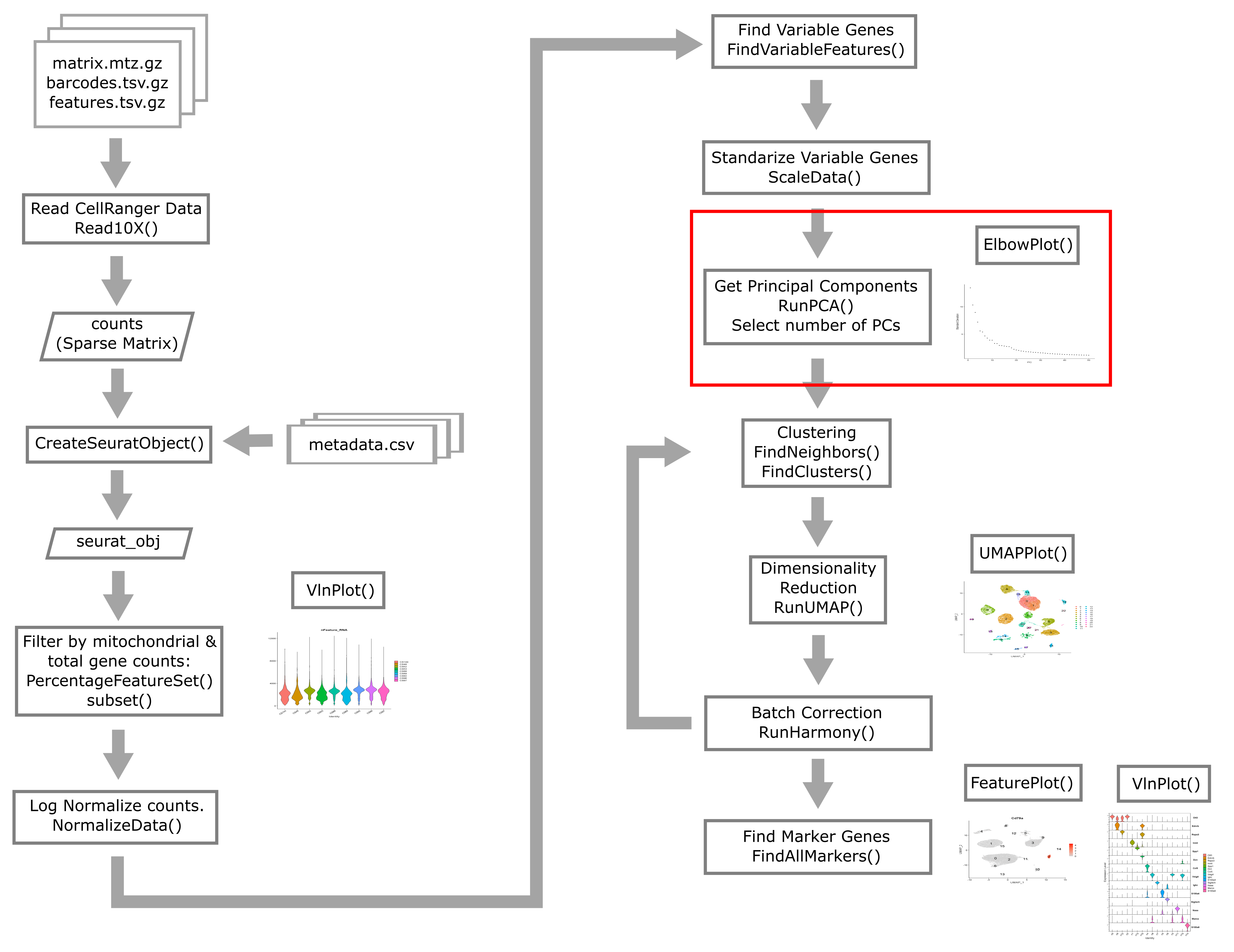

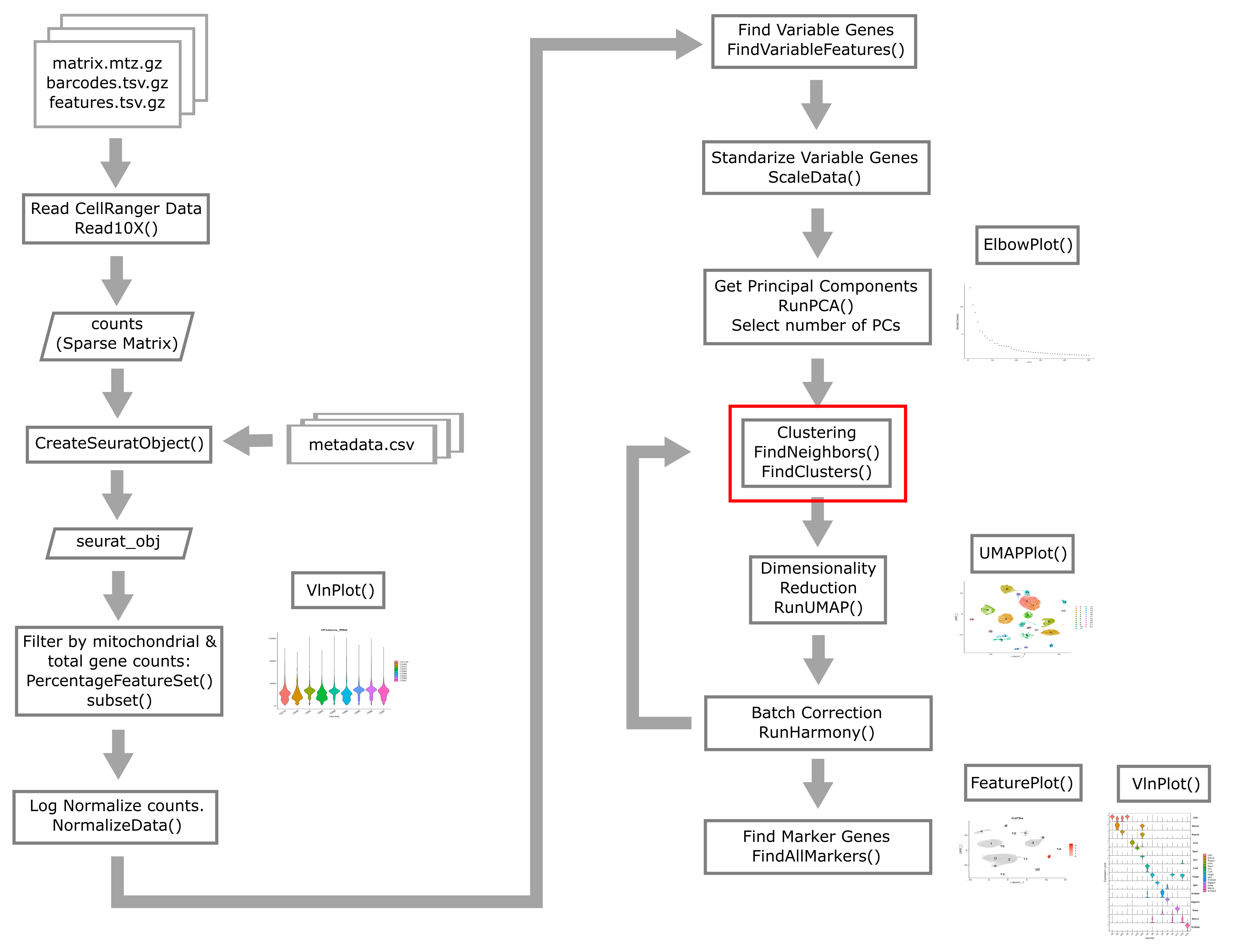

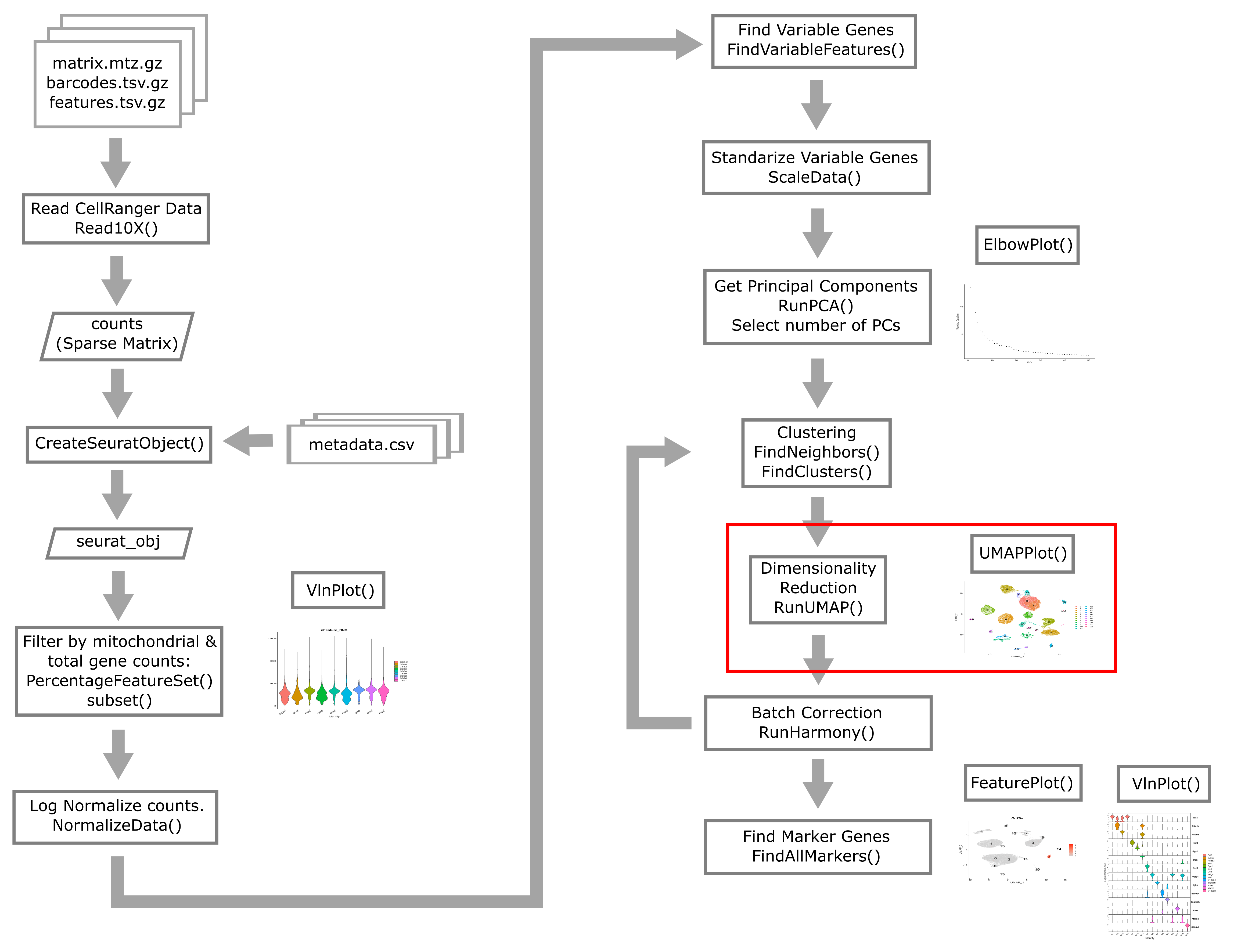

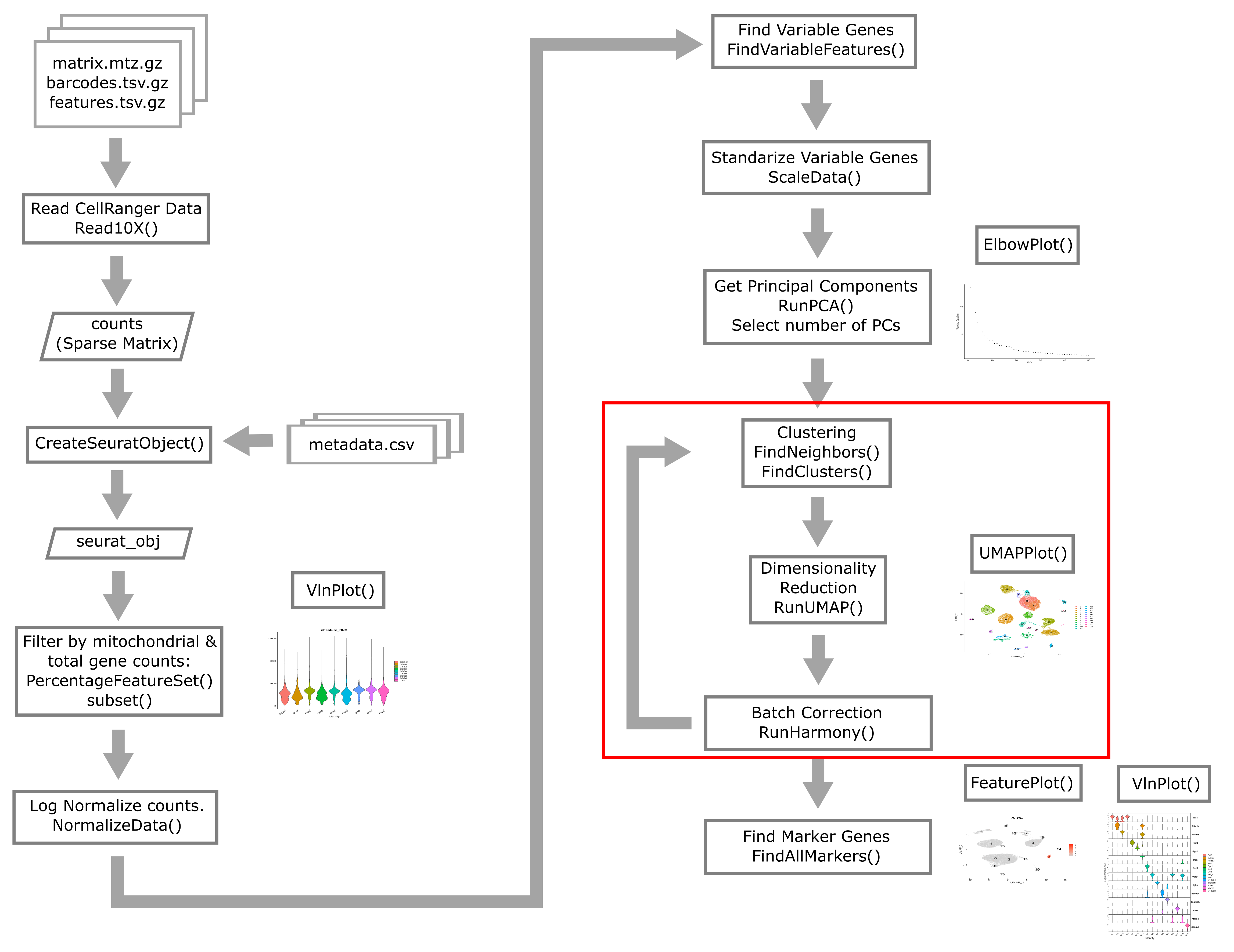

Overview of Single Cell Analysis Process

Open Project File

In the Setup section of this workshop, you created an RStudio Project. Open this project now, by:

- selecting File –> Open Project… from the Menu

- choosing “scRNA.Rproj”

- opening the project file.

What do raw scRNA-Seq data look like?

The raw data for an scRNA-Seq experiment typically consists of two FASTQ files. One file contains the sequences of the cell barcode and molecular barcode (UMI), while the other file contains the sequences derived from the transcript. The reads in file one are approximately 28bp long (16bp cell barcode, 12bp UMI), while the reads in file two are approximately 90bp long.

The Single Cell Biology Laboratory at JAX additionally provides output of running the 10X CellRanger pipeline (see below).

Typical pre-processing pipeline

10X CellRanger

10X CellRanger is “a set of analysis pipelines that process Chromium single cell data to align reads, generate feature-barcode matrices” and perform various other downstream analyses. In this course we will work with data that has been preprocessed using CellRanger. All you need to remember is that we used CellRanger to obtain gene expression counts for each gene within each cell.

CellRanger alternatives

There are several alternatives to CellRanger. Each of these alternatives has appealing properties that we encourage you to read about but do not have the time to discuss in this course. Alternatives include:

alevinSrivastava et al. 2019, from the developers of thesalmonalignerkallisto | bustoolsMelsted et al. 2021, from the developers of thekallistoalignerSTARsoloKaminow et al 2021, from the developers of theSTARaligner

While you should be aware that these alternatives exist and in some cases there may be very compelling reasons to use them, broadly speaking CellRanger is the most widely used tool for processing 10X Chromium scRNA-Seq data.

Introduction to two major single cell analysis ecosystems:

At the time that this workshop was created, there were many different software packages designed for analyzing scRNA-seq data in a variety of scenarios. The two scRNA-seq software “ecosystems” that were most widely in use were:

- R/Seurat : The Seurat ecosystem is the tool of choice for this workshop. The

biggest strength of Seurat is its straightforward vignettes and ease of

visualization/exploration.

- Seurat was released in 2015 by the Regev lab.

- The first author, Rahul Satija, now has a faculty position and has maintained and improved Seurat.

- Currently at version 4.

- Source code available on Github.

- Each version of Seurat adds new functionality:

- Seurat v1: Infers cellular localization by integrating scRNA-seq with in situ hybridization.

- Seurat v2: Integrates multiple scRNA-seq data sets using shared correlation structure.

- Seurat v3: Integrates data from multiple technologies, i.e. scRNA-seq, scATAC-seq, proteomics, in situ hybridization.

- Seurat v4: Integrative multimodal analysis and mapping of user data sets to cell identity reference database.

- Python/scanpy and anndata

- Scanpy is a python toolkit for analyzing single-cell gene expression data.

- Scanpy is built jointly with anndata, which is a file format specification and accompanying API for efficiently storing and accessing single cell data.

- Like Seurat, scanpy is under active development as well. Scanpy has an advantage of being a somewhat larger and more diverse community than Seurat, where developement is centered around a single lab group.

- This software has been used in a very large number of single cell projects. We encourage you to check it out and consider using it for your own work.

For this course we will not use scanpy because we view R/Seurat as having a slight edge over scanpy when it comes to visualization and interactive exploration of single cell data.

Reading in CellRanger Data

As described above, CellRanger is software which preprocesses Chromium single cell data to align reads, generate feature-bar code matrices, and perform other downstream analyses. We will not be using any of CellRanger’s downstream analyses, but we will be using the feature-barcode matrix produced by CellRanger. A feature-barcode matrix – in the context of scRNA-Seq – is a matrix that gives gene expression counts for each gene in each single cell. In a feature-barcode matrix, the genes (rows) are the features, and the cells (columns) are each identified by a barcode. The name feature-barcode matrix is a generalized term for the gene expression matrix. For example, feature-barcode could also refer to a matrix of single cell protein expression or single cell chromatin accessibility. In this workshop, we will read in the feature-barcode matrix produced by CellRanger and will perform the downstream analysis using Seurat.

Liver Atlas

Cell Ranger Files

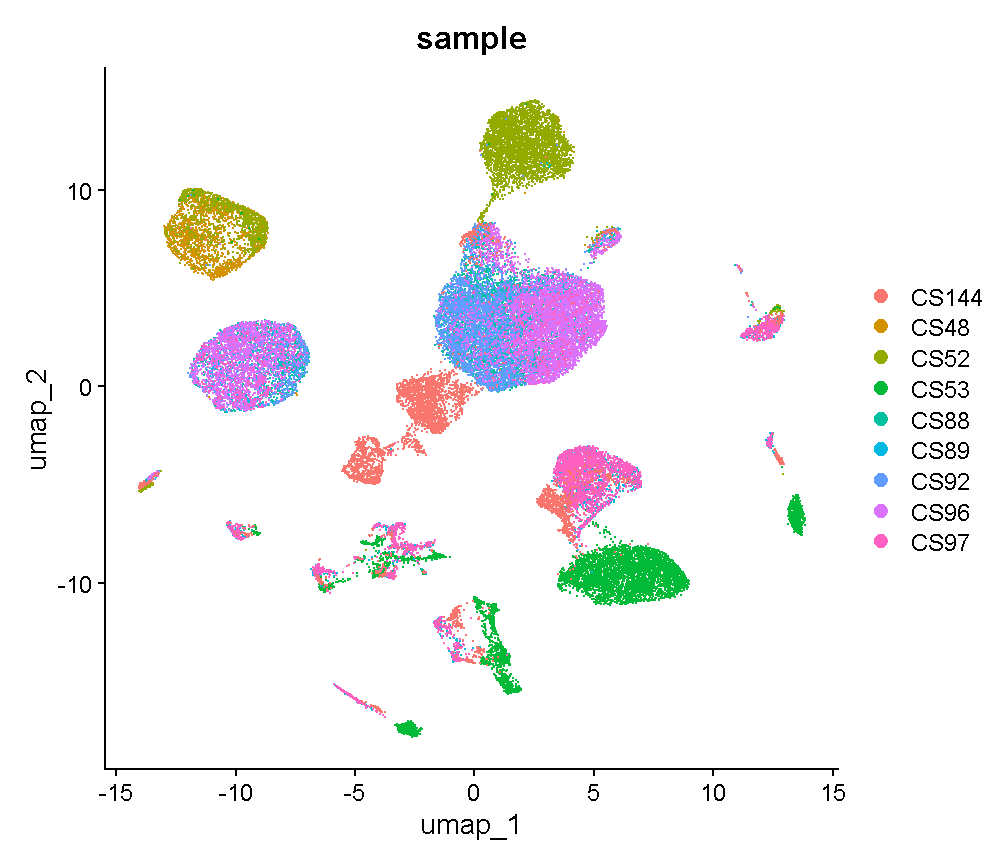

In this lesson, we will read in a subset of data from the Liver Atlas, which is described in their Cell paper. Briefly, the authors performed scRNASeq on liver cells from mice and humans, identified cell types, clustered them, and made the data publicly available. We will be working with a subset of the mouse liver data. We split the data into two sets, one to use in the lesson and one for you to work with independently as a challenge.

Before the workshop, you should have downloaded the data from

Box and placed it in your data directory.

Go to the Setup page for instructions on how to download the data

files.

Open a file browser and look in the data subdirectory mouseStSt_invivo and

you should see three files. Each file ends with ‘gz’, which indicates that it

has been compressed (or ‘zipped’) using

gzip. You don’t need to unzip them;

the software that we use will uncompress the files as it reads them in. The

files are:

- matrix.mtx.gz: The feature-barcode matrix, i.e. a two-dimensional

matrix containing the counts for each gene in each cell.

- Genes are in rows and cells are in columns.

- This file is in a special sparse matrix format which reduces disk space and memory usage.

- barcodes.tsv.gz: DNA barcodes for each cell. Used as column names in counts matrix.

- features.tsv.gz: Gene symbols for each gene. Used as row names in counts matrix.

Challenge 1

1). R has a function called file.size. Look at the help for this function and get the size of each of the files in the

mouseStSt_invivodirectory. Which one is the largest?Solution to Challenge 1

1).

file.size(file.path(data_dir, 'mouseStSt_invivo', 'barcodes.tsv.gz'))

584346 bytes

file.size(file.path(data_dir, 'mouseStSt_invivo', 'features.tsv.gz'))

113733 bytes

file.size(file.path(data_dir, 'mouseStSt_invivo', 'matrix.mtx.gz'))

603248953 bytes

‘matrix.mtx.gz’ is the largest file.

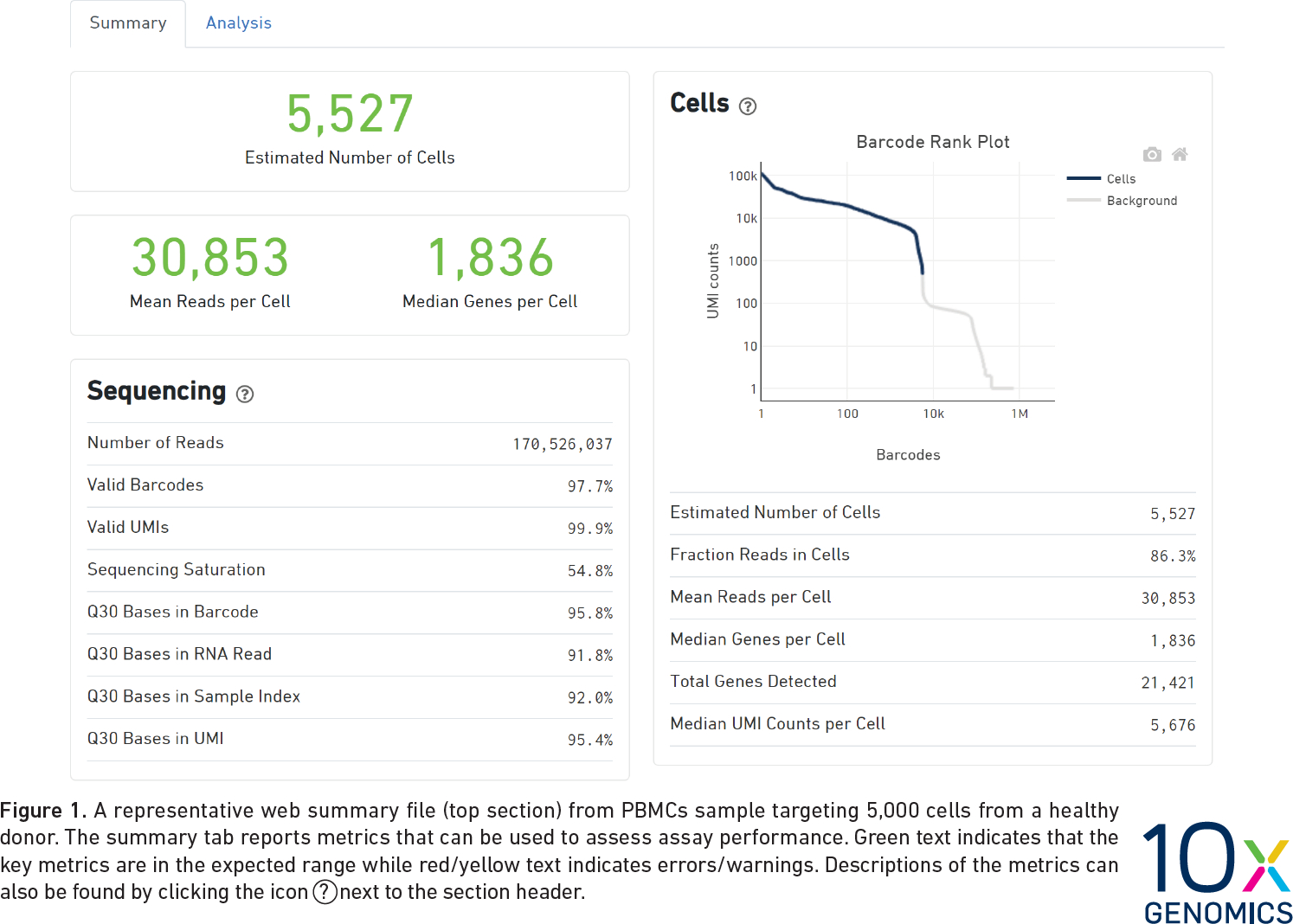

CellRanger Quality Control Report

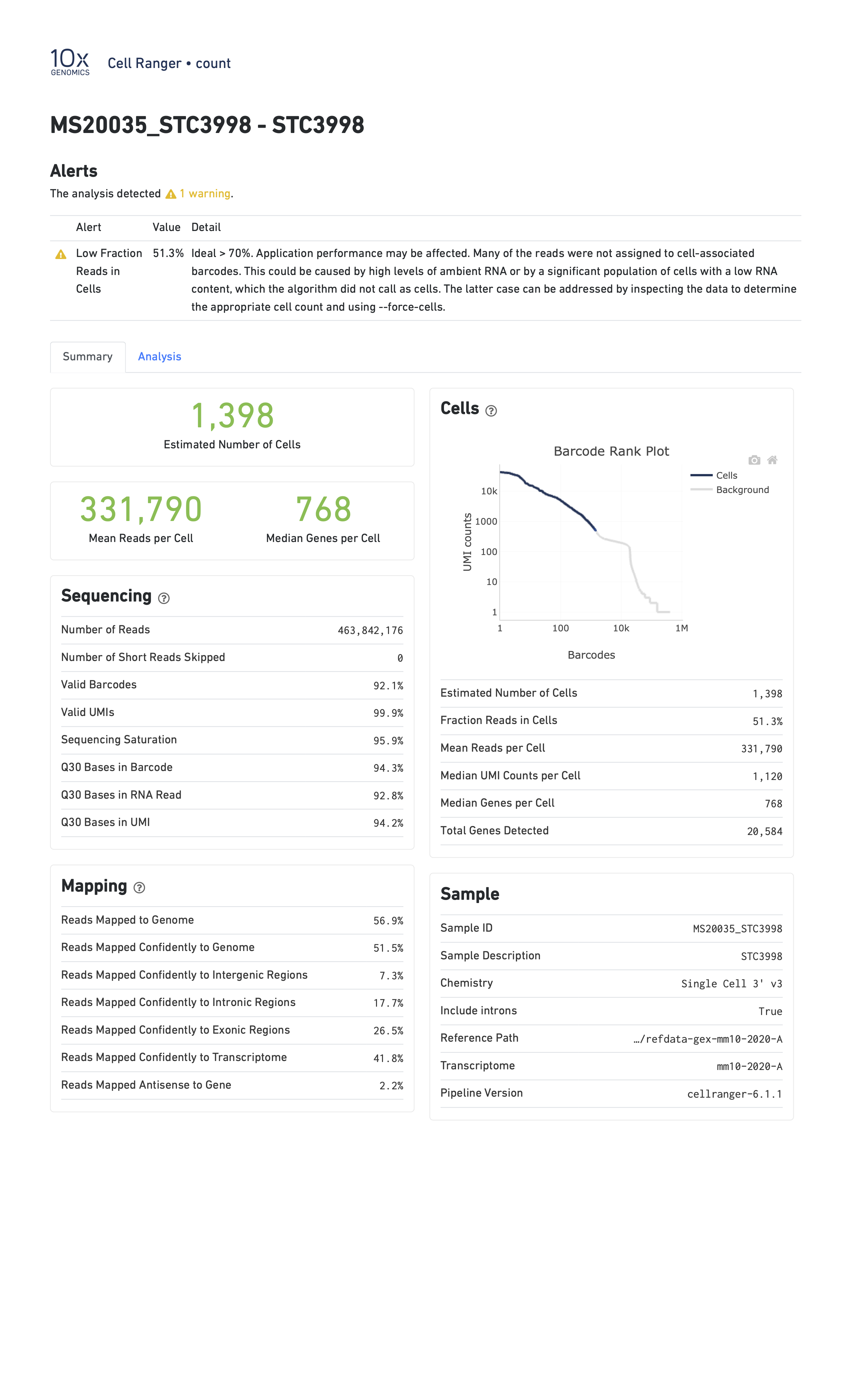

CellRanger also produces a Quality Control (QC) report as an HTML document. It produces one report for each sample we run (each channel of the 10X chip). We do not have the QC report from the Liver Atlas study, but the figure below shows an example report. The report highlights three numbers:

- Estimated Number of Cells: This indicates the number of cells recovered in your experiment. As we previously discussed this will be less than the number of cells you loaded. The number of cells recovered will almost never be the exact number of cells you had hoped to recover, but we might like to see a number within approximately +/-20% of your goal.

- Mean Reads per Cell: This indicates the number of reads in each cell. This will be a function of how deeply you choose to sequence your library.

- Median Genes per Cell: This indicates the median number of genes detected in each cell. Note that this is much lower than in bulk RNA-Seq. This number will also be lower for single nucleus than for single cell RNA-Seq, and is also likely to vary between cell types.

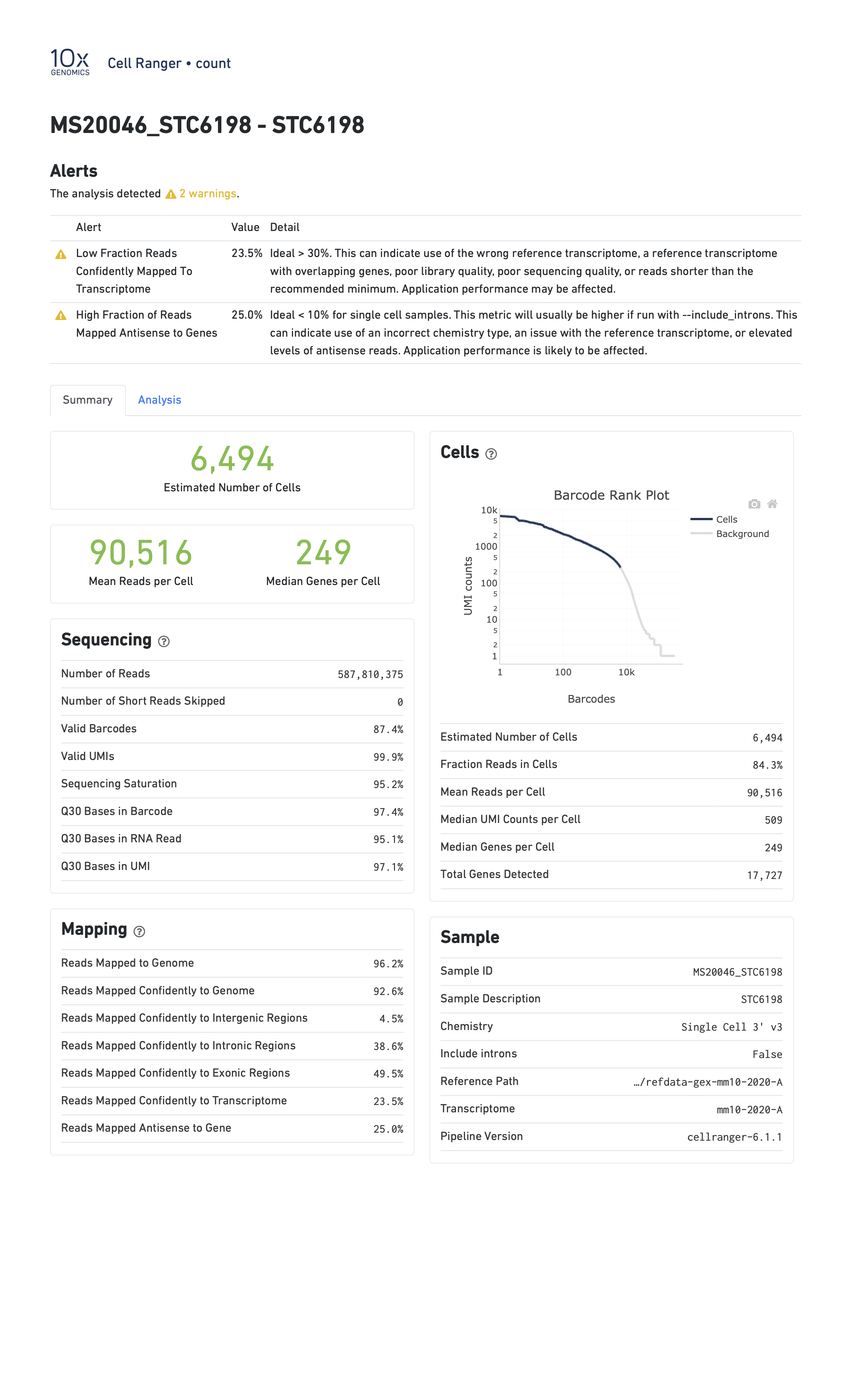

When you run a sample that has a problem of some kind, the CellRanger report might be able to detect something anomalous about your data and present you with a warning. Here are two examples of reports with warning flags highlighted.

In the report below, CellRanger notes that a low fraction of reads are within cells. This might be caused by, for example, very high levels of ambient RNA.

In the report below, CellRanger notes that a low fraction of reads are confidently mapped to the transcriptome, and a high fraction of reads map antisense to genes. Note that in this sample we are seeing only 249 genes per cell despite a mean of over 90,000 reads per cell. This likely indicates a poor quality library.

Reading a CellRanger Gene Expression Count Matrix using Seurat

In order to read these files into memory, we will use the Seurat::Read10X() function. This function searches for the three files mentioned above in the directory that you pass in. Once it verifies that all three files are present, it reads them in to create a counts matrix with genes in rows and cells in columns.

library(Seurat)

data_dir <- 'data'

We will use the gene.column = 1 argument to tell Seurat to use the first

column in ‘features.tsv.gz’ as the gene identifier.

Run the following command. This may take up to three minutes to complete.

# uses the Seurat function Read10X()

counts <- Read10X(file.path(data_dir, 'mouseStSt_invivo'), gene.column = 1)

counts now contains the sequencing read counts for each gene and cell.

How many rows and columns are there in counts?

dim(counts)

[1] 31053 47743

In the counts matrix, genes are in rows and cells are in columns. Let’s look

at the first few gene names.

head(rownames(counts), n = 10)

[1] "Xkr4" "Gm1992" "Gm37381" "Rp1" "Sox17" "Gm37323" "Mrpl15"

[8] "Lypla1" "Gm37988" "Tcea1"

As you can see, the gene names are gene symbols. There is some risk that these may not be unique. Let’s check whether any of the gene symbols are duplicated. We will sum the number of duplicated gene symbols.

sum(duplicated(rownames(counts)))

[1] 0

The sum equals zero, so there are no duplicated gene symbols, which is good. As it turns out, the reference genome/annotation files that are prepared for use by CellRanger have already been filtered to ensure no duplicated gene symbols.

Let’s look at the cell identifiers in the column names.

head(colnames(counts), n = 10)

[1] "AAACGAATCCACTTCG-2" "AAAGGTACAGGAAGTC-2" "AACTTCTGTCATGGCC-2"

[4] "AATGGCTCAACGGTAG-2" "ACACTGAAGTGCAGGT-2" "ACCACAACAGTCTCTC-2"

[7] "ACGATGTAGTGGTTCT-2" "ACGCACGCACTAACCA-2" "ACTGCAATCAACTCTT-2"

[10] "ACTGCAATCGTCACCT-2"

Each of these barcodes identifies one cell. They should all be unique. Once again, let’s verify this.

sum(duplicated(colnames(counts)))

[1] 0

The sum of duplicated values equals zero, so all of the barcodes are unique. The barcode sequence is the actual sequence of the oligonucleotide tag that was attached to the GEM (barcoded bead) that went into each droplet. In early versions of 10X technology there were approximately 750,000 barcodes per run while in the current chemistry there are >3 million barcodes. CellRanger attempts to correct sequencing errors in the barcodes and uses a “whitelist” of known barcodes (in the 10X chemistry) to help.

Next, let’s look at the values in counts.

counts[1:10, 1:20]

10 x 20 sparse Matrix of class "dgCMatrix"

[[ suppressing 20 column names 'AAACGAATCCACTTCG-2', 'AAAGGTACAGGAAGTC-2', 'AACTTCTGTCATGGCC-2' ... ]]

Xkr4 . . . . . . . . . . . . . . . . . . . .

Gm1992 . . . . . . . . . . . . . . . . . . . .

Gm37381 . . . . . . . . . . . . . . . . . . . .

Rp1 . . . . . . . . . . . . . . . . . . . .

Sox17 . . 2 4 . . . 1 . 1 1 . . 2 . . 1 8 1 .

Gm37323 . . . . . . . . . . . . . . . . . . . .

Mrpl15 . . . 1 1 . . . 1 . 2 . . . . 1 . 1 1 .

Lypla1 . . 2 1 . 1 1 . . . 1 1 2 . 1 1 1 . . .

Gm37988 . . . . . . . . . . . . . . . . . . . .

Tcea1 . . 2 . 2 2 . . 1 2 . 2 2 . . 2 1 1 2 .

We can see the gene symbols in rows along the left. The barcodes are not shown to make the values easier to read. Each of the periods represents a zero. The ‘1’ values represent a single read for a gene in one cell.

Although counts looks like a matrix and you can use many matrix functions on

it, counts is actually a different type of object. In scRNASeq, the read

depth in each cell is quite low. So you many only get counts for a small number

of genes in each cell. The counts matrix has 31053 rows and

47743 columns, and includes 1.4825634 × 109

entries. However, most of these entries

(92.4930544%) are

zeros because every gene is not detected in every cell. It would be wasteful

to store all of these zeros in memory. It would also make it difficult to

store all of the data in memory. So counts is a ‘sparse matrix’, which only

stores the positions of non-zero values in memory.

Look at the structure of the counts matrix using str.

str(counts)

Formal class 'dgCMatrix' [package "Matrix"] with 6 slots

..@ i : int [1:111295227] 15 19 36 38 40 61 66 67 70 93 ...

..@ p : int [1:47744] 0 3264 6449 9729 13446 16990 20054 23142 26419 29563 ...

..@ Dim : int [1:2] 31053 47743

..@ Dimnames:List of 2

.. ..$ : chr [1:31053] "Xkr4" "Gm1992" "Gm37381" "Rp1" ...

.. ..$ : chr [1:47743] "AAACGAATCCACTTCG-2" "AAAGGTACAGGAAGTC-2" "AACTTCTGTCATGGCC-2" "AATGGCTCAACGGTAG-2" ...

..@ x : num [1:111295227] 1 1 1 2 1 6 1 1 2 1 ...

..@ factors : list()

We can see that the formal class name is a “dgCMatrix”. There are two long vectors of integers which encode the positions of non-zero values. The gene names and cell barcodes are stored in character vectors and the non-zero values are an integer vector. This class saves space by not allocating memory to store all of the zero values.

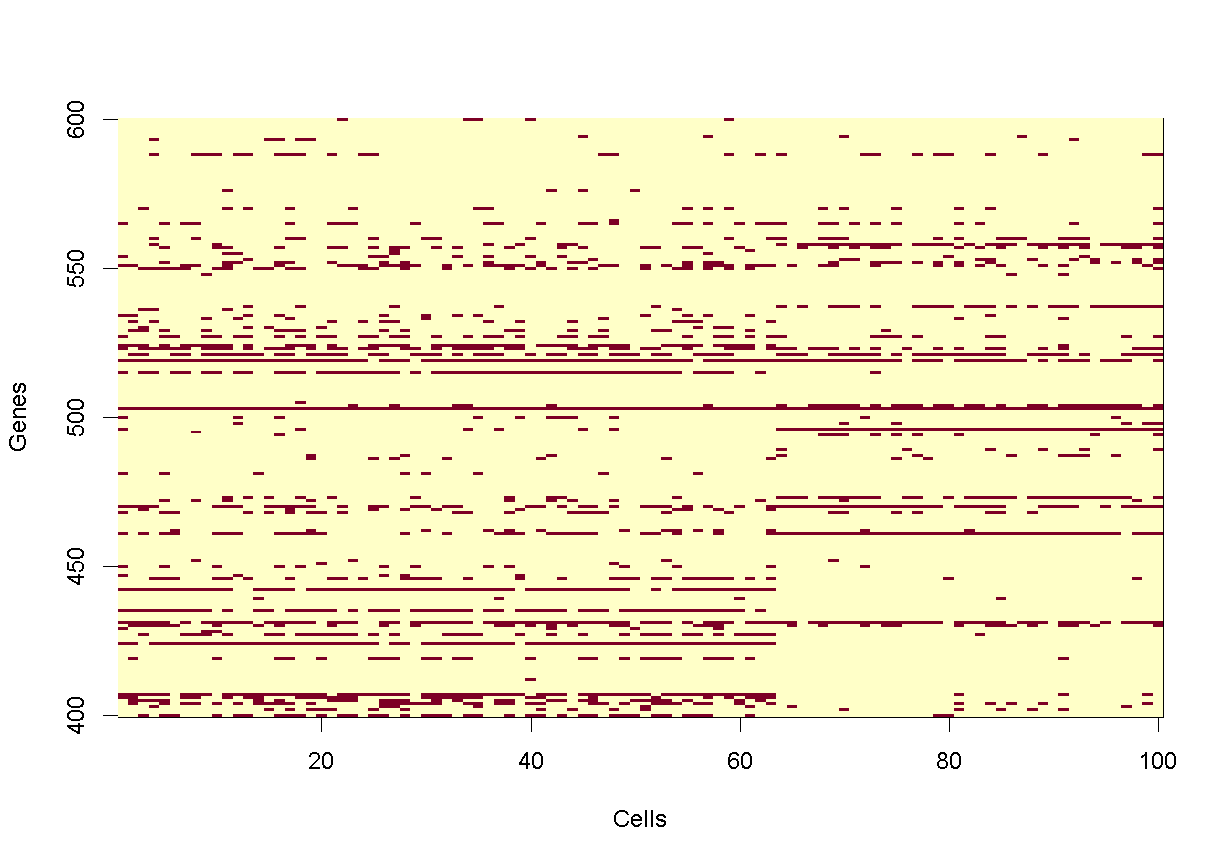

Let’s look at small portion of counts. We will create a tile plot indicating

which values are non-zero for the first 100 cells and genes in rows 400 to 600.

For historical reasons, R plots the rows along the X-axis and columns along the

Y-axis. We will transpose the matrix so that genes are on the Y-axis, which

reflects the way in which we normally look at this matrix.

image(1:100, 400:600, t(as.matrix(counts[400:600,1:100]) > 0),

xlab = 'Cells', ylab = 'Genes')

plot of chunk counts_image

In the tile plot above, each row represents one gene and each column represents one cell. Red indicates non-zero values and yellow indicates zero values. As you can see, most of the matrix consists of zeros (yellow tiles) and hence is called ‘sparse’. You can also see that some genes are expressed in most cells, indicated by the horizontal red lines, and that some genes are expressed in very few cells.

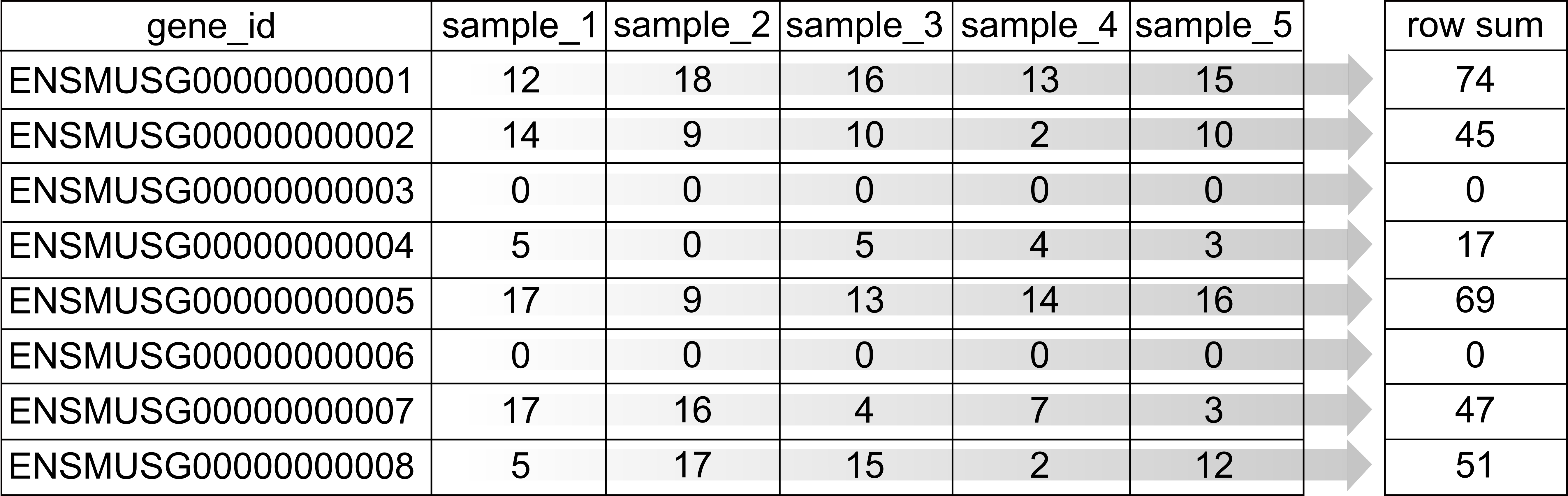

What proportion of genes have zero counts in all samples?

gene_sums <- data.frame(gene_id = rownames(counts),

sums = Matrix::rowSums(counts))

sum(gene_sums$sums == 0)

[1] 7322

We can see that 7322 (24%) genes have no reads at all associated with them. In the next lesson, we will remove genes that have no counts in any cells.

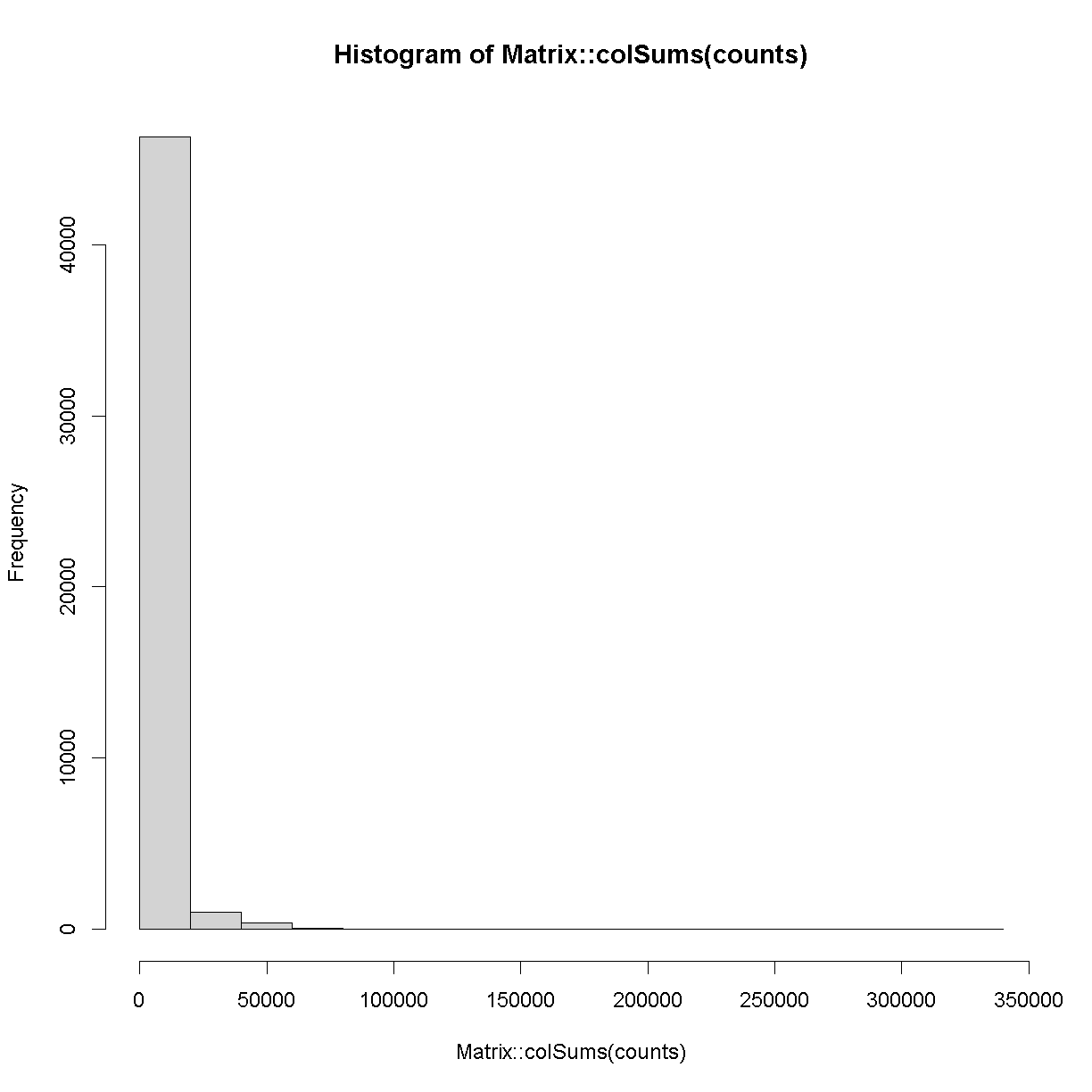

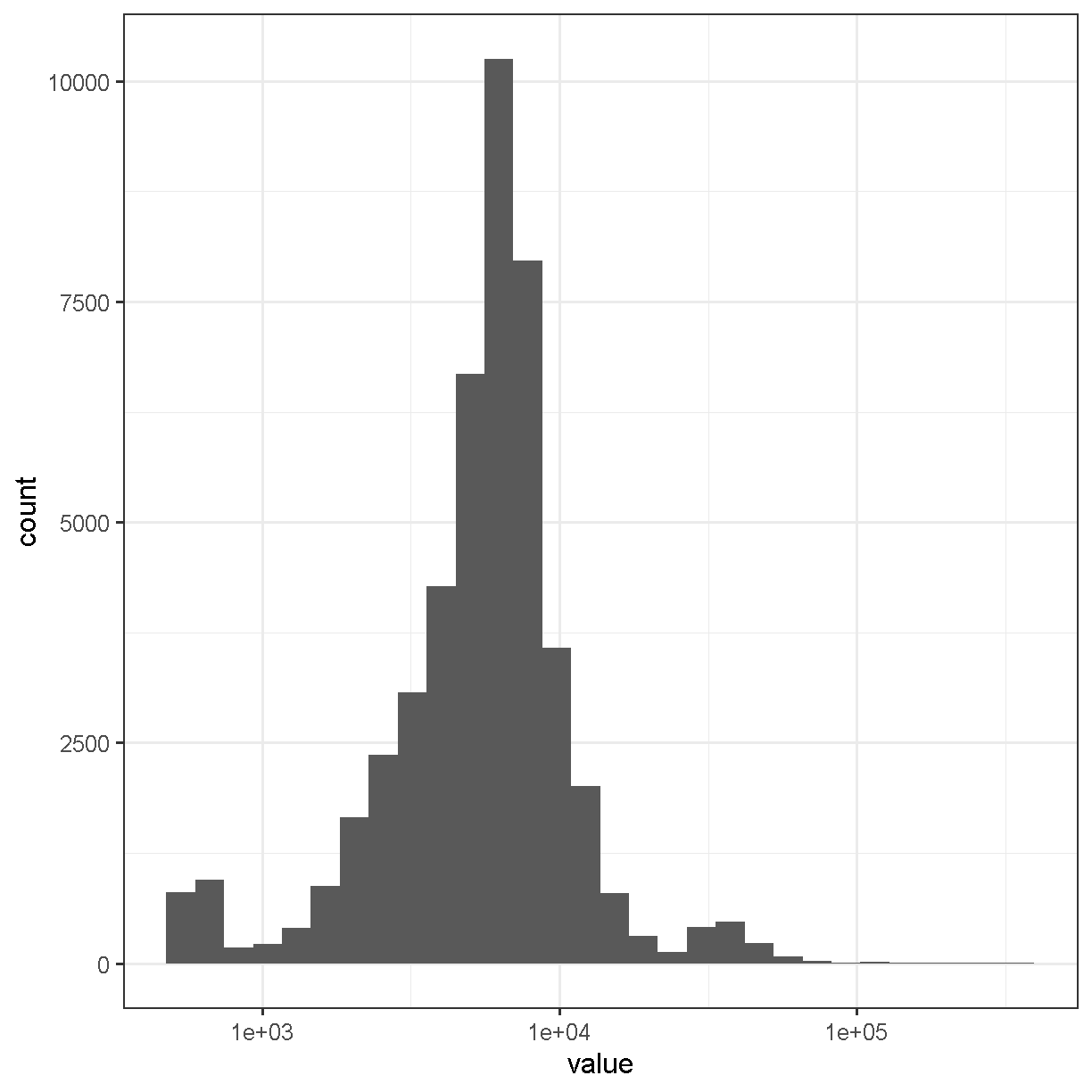

Next, let’s look at the number of counts in each cell.

hist(Matrix::colSums(counts))

plot of chunk cell_counts

Matrix::colSums(counts) %>%

enframe() %>%

ggplot(aes(value)) +

geom_histogram(bins = 30) +

scale_x_log10() +

theme_bw(base_size = 16)

plot of chunk cell_counts

The range of counts covers several orders of magnitude, from 500 to 3.32592 × 105. We will need to normalize for this large difference in sequencing depth, which we will cover in the next lesson.

Sample Metadata

Sample metadata refers to information about your samples that is not the “data”, i.e. the gene counts. This might include information such as sex, tissue, or treatment. In the case of the liver atlas data, the authors provided a metadata file for their samples.

The sample metadata file is a comma-separated variable (CSV) file, We will read it in using the readr read_csv function.

metadata <- read_csv(file.path(data_dir, 'mouseStSt_invivo', 'annot_metadata_first.csv'))

Rows: 47743 Columns: 4

── Column specification ────────────────────────────────────────────────────────────────────────────────────────────────────────

Delimiter: ","

chr (4): sample, cell, digest, typeSample

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.

Let’s look at the top of the metadata.

head(metadata)

# A tibble: 6 × 4

sample cell digest typeSample

<chr> <chr> <chr> <chr>

1 CS48 AAACGAATCCACTTCG-2 inVivo scRnaSeq

2 CS48 AAAGGTACAGGAAGTC-2 inVivo scRnaSeq

3 CS48 AACTTCTGTCATGGCC-2 inVivo scRnaSeq

4 CS48 AATGGCTCAACGGTAG-2 inVivo scRnaSeq

5 CS48 ACACTGAAGTGCAGGT-2 inVivo scRnaSeq

6 CS48 ACCACAACAGTCTCTC-2 inVivo scRnaSeq

In the table above, you can see that there are four columns:

- sample: mouse identifier from which cell was derived;

- cell: the DNA bar code used to identify the cell;

- digest: cells for this liver atlas were harvested using either an in vivo or an ex vivo procedure. In this subset of the data we are looking only at in vivo samples;

- typeSample: the type of library preparation protocol, either single cell RNA-seq (scRnaSeq) or nuclear sequencing (nucSeq). In this subset of the data we are looking only at scRnaSeq samples.

Let’s confirm that we are only looking at scRnaSeq samples from in vivo digest cells:

dplyr::count(metadata, digest, typeSample)

# A tibble: 1 × 3

digest typeSample n

<chr> <chr> <int>

1 inVivo scRnaSeq 47743

We’re going to explore the data further using a series of Challenges. You will be asked to look at the contents of some of the columns to see how the data are distributed.

Challenge 2

How many mice were used to produce this data? The “sample” column contains the mouse identifier for each cell.

Solution to Challenge 2

count(metadata, sample) %>% summarize(total = n())

Challenge 3

How many cells are there from each mouse?

Solution to Challenge 3

count(metadata, sample)

In this workshop, we will attempt to reproduce some of the results of the Liver Atlas using Seurat. We will analyze the in-vivo single cell RNA-Seq together.

Save Data for Next Lesson

We will use the in-vivo data in the next lesson. If you plan to keep your RStudio open, we will simply continue to the next lesson. If you wanted to save the data you could execute a command like:

save(counts, metadata, file = file.path(data_dir, 'lesson03.Rdata'))

Challenge 5

In the lesson above, you read in the scRNASeq data. There is another dataset which was created using an ex vivo digest in the

mouseStSt_exvivodirectory. Delete thecountsandmetadataobjects from your environment. Then read in the counts and metadata from themouseStSt_exvivodirectory and save them to a file called ‘lesson03_challenge.Rdata’.Solution to Challenge 5

# Remove exising counts and metadata.

rm(counts, metadata)# Read in new counts.

counts <- Seurat::Read10X(file.path(data_dir, 'mouseStSt_exvivo'), gene.column = 1)

# Read in new metadata.

metadata <- read_csv(file.path(data_dir, 'mouseStSt_exvivo', 'annot_metadata.csv'))

# Save data for next lesson.

save(counts, metadata, file = file.path(data_dir, 'lesson03_challenge.Rdata'))

Session Info

sessionInfo()

R version 4.4.0 (2024-04-24 ucrt)

Platform: x86_64-w64-mingw32/x64

Running under: Windows 10 x64 (build 19045)

Matrix products: default

locale:

[1] LC_COLLATE=English_United States.utf8

[2] LC_CTYPE=English_United States.utf8

[3] LC_MONETARY=English_United States.utf8

[4] LC_NUMERIC=C

[5] LC_TIME=English_United States.utf8

time zone: America/New_York

tzcode source: internal

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] Seurat_5.1.0 SeuratObject_5.0.2 sp_2.1-4 lubridate_1.9.3

[5] forcats_1.0.0 stringr_1.5.1 dplyr_1.1.4 purrr_1.0.2

[9] readr_2.1.5 tidyr_1.3.1 tibble_3.2.1 ggplot2_3.5.1

[13] tidyverse_2.0.0 knitr_1.48

loaded via a namespace (and not attached):

[1] deldir_2.0-4 pbapply_1.7-2 gridExtra_2.3

[4] rlang_1.1.4 magrittr_2.0.3 RcppAnnoy_0.0.22

[7] spatstat.geom_3.3-3 matrixStats_1.4.1 ggridges_0.5.6

[10] compiler_4.4.0 png_0.1-8 vctrs_0.6.5

[13] reshape2_1.4.4 crayon_1.5.3 pkgconfig_2.0.3

[16] fastmap_1.2.0 labeling_0.4.3 utf8_1.2.4

[19] promises_1.3.0 tzdb_0.4.0 bit_4.5.0

[22] xfun_0.44 jsonlite_1.8.9 goftest_1.2-3

[25] highr_0.11 later_1.3.2 spatstat.utils_3.1-0

[28] irlba_2.3.5.1 parallel_4.4.0 cluster_2.1.6

[31] R6_2.5.1 ica_1.0-3 spatstat.data_3.1-2

[34] stringi_1.8.4 RColorBrewer_1.1-3 reticulate_1.39.0

[37] spatstat.univar_3.0-1 parallelly_1.38.0 lmtest_0.9-40

[40] scattermore_1.2 Rcpp_1.0.13 tensor_1.5

[43] future.apply_1.11.2 zoo_1.8-12 R.utils_2.12.3

[46] sctransform_0.4.1 httpuv_1.6.15 Matrix_1.7-0

[49] splines_4.4.0 igraph_2.0.3 timechange_0.3.0

[52] tidyselect_1.2.1 abind_1.4-8 rstudioapi_0.16.0

[55] spatstat.random_3.3-2 codetools_0.2-20 miniUI_0.1.1.1

[58] spatstat.explore_3.3-2 listenv_0.9.1 lattice_0.22-6

[61] plyr_1.8.9 shiny_1.9.1 withr_3.0.1

[64] ROCR_1.0-11 evaluate_1.0.1 Rtsne_0.17

[67] future_1.34.0 fastDummies_1.7.4 survival_3.7-0

[70] polyclip_1.10-7 fitdistrplus_1.2-1 pillar_1.9.0

[73] KernSmooth_2.23-24 plotly_4.10.4 generics_0.1.3

[76] vroom_1.6.5 RcppHNSW_0.6.0 hms_1.1.3

[79] munsell_0.5.1 scales_1.3.0 globals_0.16.3

[82] xtable_1.8-4 glue_1.8.0 lazyeval_0.2.2

[85] tools_4.4.0 data.table_1.16.2 RSpectra_0.16-2

[88] RANN_2.6.2 leiden_0.4.3.1 dotCall64_1.2

[91] cowplot_1.1.3 grid_4.4.0 colorspace_2.1-1

[94] nlme_3.1-165 patchwork_1.3.0 cli_3.6.3

[97] spatstat.sparse_3.1-0 spam_2.11-0 fansi_1.0.6

[100] viridisLite_0.4.2 uwot_0.2.2 gtable_0.3.5

[103] R.methodsS3_1.8.2 digest_0.6.35 progressr_0.14.0

[106] ggrepel_0.9.6 htmlwidgets_1.6.4 farver_2.1.2

[109] R.oo_1.26.0 htmltools_0.5.8.1 lifecycle_1.0.4

[112] httr_1.4.7 mime_0.12 bit64_4.5.2

[115] MASS_7.3-61

Key Points

CellRanger produces a gene expression count matrix that can be read in using Seurat.

The count matrix is stored as a sparse matrix with features in rows and cells in columns.

Quality Control of scRNA-Seq Data

Overview

Teaching: 90 min

Exercises: 30 minQuestions

How do I determine if my single cell RNA-seq experiment data is high quality?

What are the common quality control metrics that I should check in my scRNA-seq data?

Objectives

Critically examine scRNA-seq data to identify potential technical issues.

Apply filters to remove cells that are largely poor quality/dead cells.

Understand the implications of different filtering steps on the data.

suppressPackageStartupMessages(library(tidyverse))

suppressPackageStartupMessages(library(Matrix))

suppressPackageStartupMessages(library(SingleCellExperiment))

suppressPackageStartupMessages(library(scds))

suppressPackageStartupMessages(library(Seurat))

data_dir <- '../data'

dir(data_dir)

[1] "lesson03.Rdata"

[2] "lesson03_challenge.Rdata"

[3] "lesson04.rds"

[4] "lesson05.Rdata"

[5] "lesson05.rds"

[6] "mouseStSt_exvivo"

[7] "mouseStSt_exvivo.zip"

[8] "mouseStSt_invivo"

[9] "mouseStSt_invivo.zip"

[10] "regev_lab_cell_cycle_genes_mm.fixed.txt"

[11] "regev_lab_cell_cycle_genes_mm.fixed.txt_README"

Quality control in scRNA-seq

There are many technical reasons why cells produced by an scRNA-seq protocol might not be of high quality. The goal of the quality control steps are to assure that only single, live cells are included in the final data set. Ultimately some multiplets and poor quality cells will likely escape your detection and make it into your final dataset; however, these quality control steps aim to reduce the chance of this happening. Failure to undertake quality control is likely to adversely impact cell type identification, clustering, and interpretation of the data.

Some technical questions that you might ask include:

- Why is mitochondrial gene expression high in some cells?

- What is a unique molecular identifier (UMI), and why do we check numbers of UMI?

- What happens to make gene counts low in a cell?

Doublet detection

We will begin by discussing doublets. We have already discussed the concept of the doublet. Now we will try running one computational doublet-detection approach and track predictions of doublets.

We will use the scds method. scds contains two methods for predicting doublets. Method cxds is based on co-expression of gene pairs, while method bcds uses the full count information and a binary classification approach using in silico doublets. Method cxds_bcds_hybrid combines both approaches. We will use the combined approach. See Bais and Kostka 2020 for more details.

Because this doublet prediction method takes some time and is a bit memory-intensive, we will run it only on cells from one mouse. We will return to the doublet predictions later in this lesson.

cell_ids <- filter(metadata, sample == 'CS52') %>% pull(cell)

sce <- SingleCellExperiment(list(counts = counts[, cell_ids]))

sce <- cxds_bcds_hybrid(sce)

doublet_preds <- colData(sce)

used (Mb) gc trigger (Mb) max used (Mb)

Ncells 8111953 433.3 14168290 756.7 11252398 601.0

Vcells 181810210 1387.2 436715070 3331.9 436711315 3331.9

High-level overview of quality control and filtering

First we will walk through some of the typical quantities one examines when conducting quality control of scRNA-Seq data.

Filtering Genes by Counts

As mentioned in an earlier lesson, the counts matrix is sparse and may contain rows (genes) or columns (cells) with low overall counts. In the case of genes, we likely wish to exclude genes with zeros counts in most cells. Let’s see how many genes have zeros counts across all cells. Note that the Matrix package has a special implementation of rowSums which works with sparse matrices.

gene_counts <- Matrix::rowSums(counts)

sum(gene_counts == 0)

[1] 7322

Of the 31053 genes, 7322 have zero counts across all cells. These genes do not inform us about the mean, variance, or covariance of any of the other genes and we will remove them before proceeding with further analysis.

counts <- counts[gene_counts > 0,]

This leaves 23731 genes in the counts matrix.

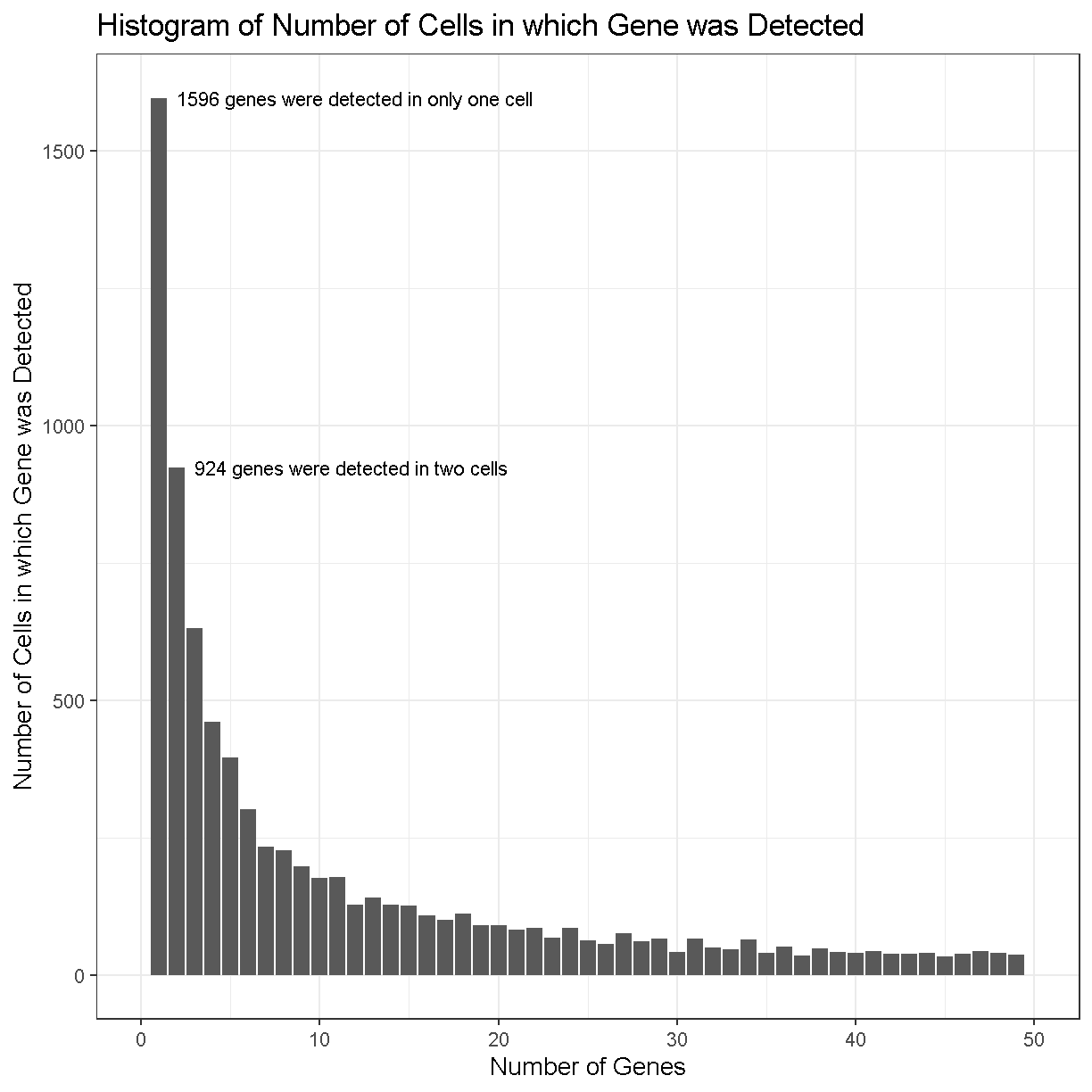

We could also set some other threshold for filtering genes. Perhaps we should

look at the number of genes that have different numbers of counts. We will use

a histogram to look at the distribution of overall gene counts. Note that, since

we just resized the counts matrix, we need to recalculate gene_counts.

We will count the number of cells in which each gene was detected. Because

counts is a sparse matrix, we have to be careful not to perform operations

that would convert the entire matrix into a non-sparse matrix. This might

happen if we wrote code like:

gene_counts <- rowSums(counts > 0)

The expression counts > 0 would create a logical matrix that takes up much

more memory than the sparse matrix. We might be tempted to try

rowSums(counts == 0), but this would also result in a non-sparse matrix

because most of the values would be TRUE. However, if we evaluate

rowSums(counts != 0), then most of the values would be FALSE, which can be

stored as 0 and so the matrix would still be sparse. The Matrix package has

an implementation of ‘rowSums()’ that is efficient, but you may have to specify

that you want to used the Matrix version of ‘rowSums()’ explicitly.

The number of cells in which each gene is detected spans several orders of magnitude and this makes it difficult to interpret the plot. Some genes are detected in all cells while others are detected in only one cell. Let’s zoom in on the part with lower gene counts.

gene_counts <- tibble(counts = Matrix::rowSums(counts > 0))

gene_counts %>%

dplyr::count(counts) %>%

ggplot(aes(counts, n)) +

geom_col() +

labs(title = 'Histogram of Number of Cells in which Gene was Detected',

x = 'Number of Genes',

y = 'Number of Cells in which Gene was Detected') +

lims(x = c(0, 50)) +

theme_bw(base_size = 14) +

annotate('text', x = 2, y = 1596, hjust = 0,

label = str_c(sum(gene_counts == 1), ' genes were detected in only one cell')) +

annotate('text', x = 3, y = 924, hjust = 0,

label = str_c(sum(gene_counts == 2), ' genes were detected in two cells'))

Warning: Removed 9335 rows containing missing values or values outside the

scale range (`geom_col()`).

plot of chunk gene_count_hist_2

In the plot above, we can see that there are 1596 genes that were detected in only one cell, 924 genes detected in two cells, etc.

Making a decision to keep or remove a gene based on its expression being detected in a certain number of cells can be tricky. If you retain all genes, you may consume more computational resources and add noise to your analysis. If you discard too many genes, you may miss rare but important cell types.

Consider this example: You have a total of 10,000 cells in your scRNA-seq results. There is a rare cell population consisting of 100 cells that expresses 20 genes which are not expressed in any other cell type. If you only retain genes that are detected in more than 100 cells, you will miss this cell population.

Challenge 1

How would filtering genes too strictly affect your results? How would this affect your ability to discriminate between cell types?

Solution to Challenge 1

Filtering too strictly would make it more difficult to distinguish between cell types. The degree to which this problem affects your analyses depends on the degree of strictness of your filtering. Let’s take the situation to its logical extreme – what if we keep only genes expressed in at least 95% of cells. If we did this, we would end up with only 41 genes! By definition these genes will be highly expressed in all cell types, therefore eliminating our ability to clearly distinguish between cell types.

Challenge 2

What total count threshold would you choose to filter genes? Remember that there are 47,743 cells.

Solution to Challenge 2

This is a question that has a somewhat imprecise answer. Following from challenge one, we do not want to be too strict in our filtering. However, we do want to remove genes that will not provide much information about gene expression variability among the cells in our dataset. Our recommendation would be to filter genes expressed in < 5 cells, but one could reasonably justify a threshold between, say, 3 and 20 cells.

Filtering Cells by Counts

Next we will look at the number of genes expressed in each cell. If a cell lyses and leaks RNA,the total number of reads in a cell may be low, which leads to lower gene counts. Furthermore, each single cell suspension likely contains some amount of so-called “ambient” RNA from damaged/dead/dying cells. This ambient RNA comes along for the ride in every droplet. Therefore even droplets that do not contain cells (empty droplets) can have some reads mapping to transcripts that look like gene expression. Filtering out these kinds of cells is a quality control step that should improve your final results.

We will explicitly use the Matrix package’s implementation of ‘colSums()’.

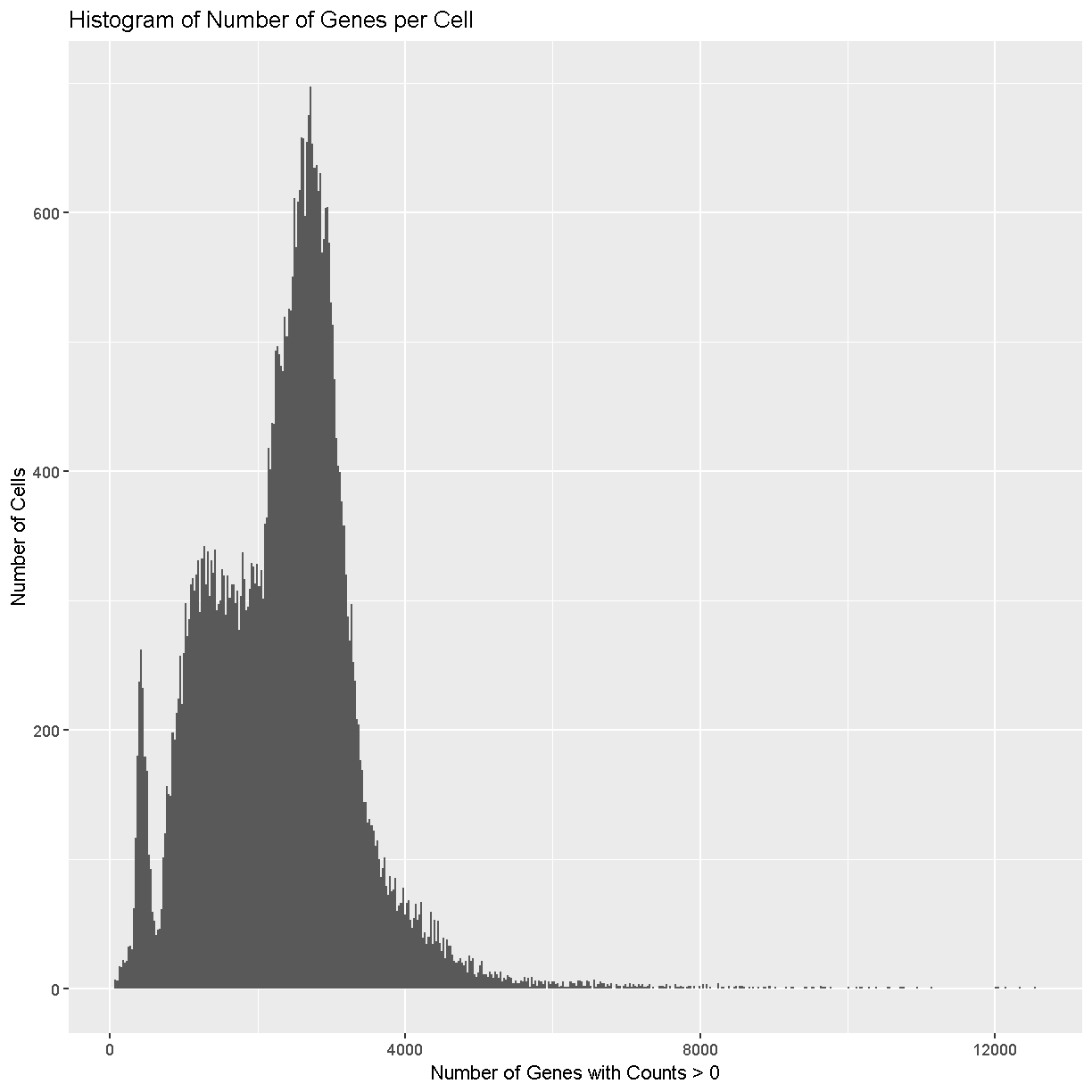

tibble(counts = Matrix::colSums(counts > 0)) %>%

ggplot(aes(counts)) +

geom_histogram(bins = 500) +

labs(title = 'Histogram of Number of Genes per Cell',

x = 'Number of Genes with Counts > 0',

y = 'Number of Cells')

plot of chunk sum_cell_counts

Cells with way more genes expressed than the typical cell might be doublets/multiplets and should also be removed.

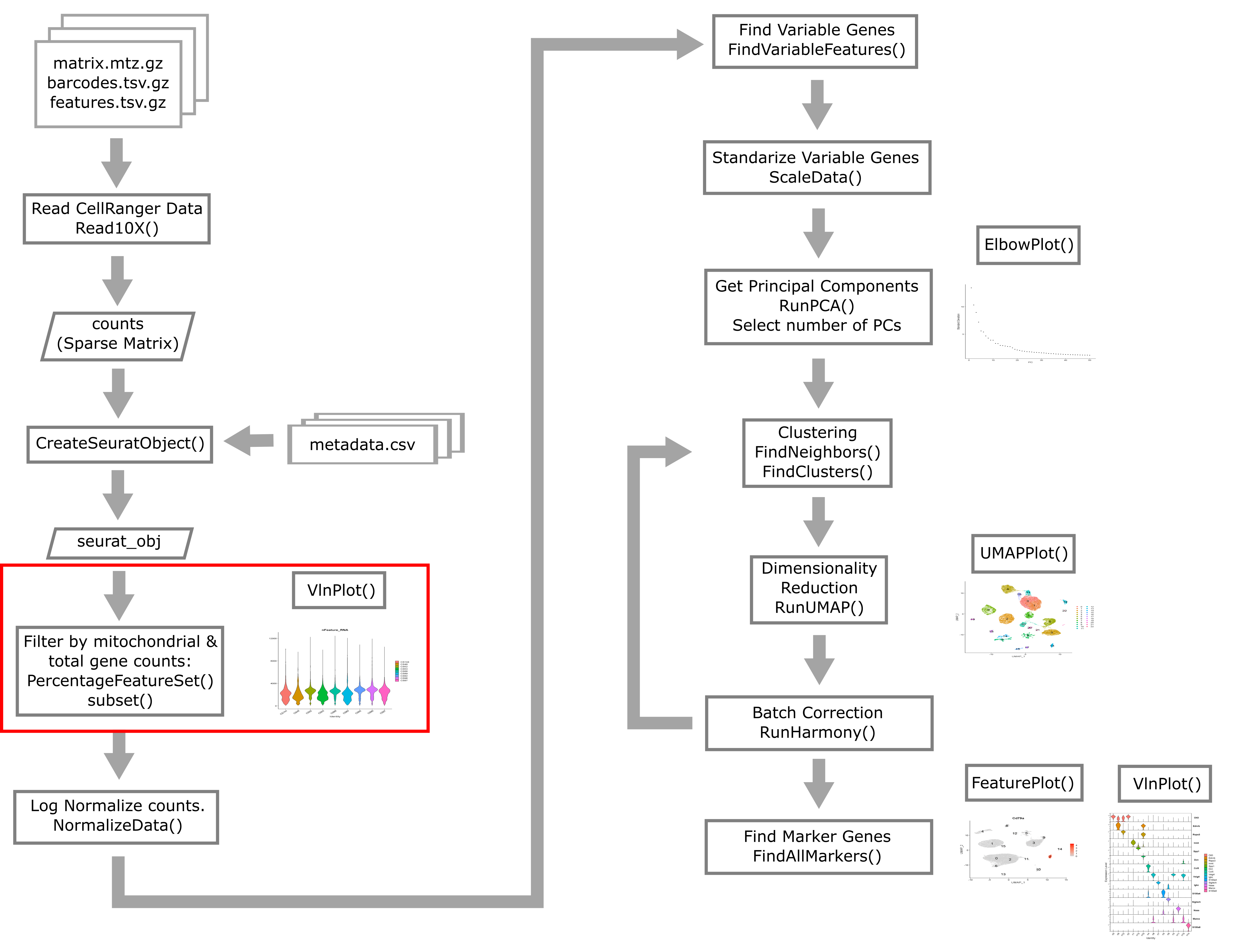

Creating the Seurat Object

In order to use Seurat, we must take the sample metadata and gene counts and create a Seurat Object. This is a data structure which organizes the data and metadata and will store aspects of the analysis as we progress through the workshop.

Below, we will create a Seurat object for the liver data. We must first convert the cell metadata into a data.frame and place the barcodes in rownames. The we will pass the counts and metadata into the CreateSeuratObject function to create the Seurat object.

In the section above, we examined the counts across genes and cells and proposed filtering using thresholds. The CreateSeuratObject function contains two arguments, ‘min.cells’ and ‘min.features’, that allow us to filter the genes and cells by counts. Although we might use these arguments for convenience in a typical analysis, for this course we will look more closely at these quantities on a per-library basis to decide on our filtering thresholds. We will us the ‘min.cells’ argument to filter out genes that occur in less than 5 cells.

# set a seed for reproducibility in case any randomness used below

set.seed(1418)

metadata <- as.data.frame(metadata) %>%

column_to_rownames('cell')

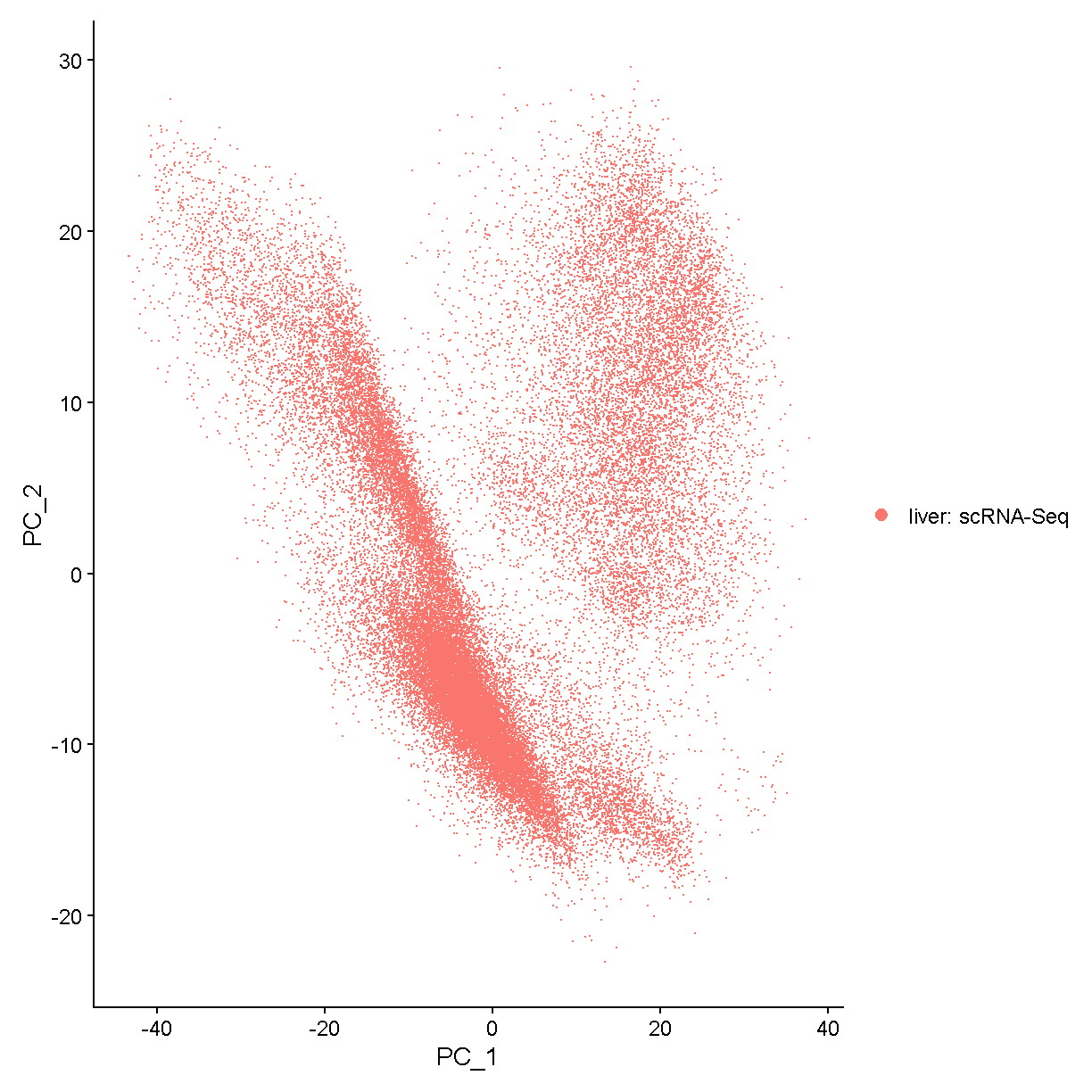

liver <- CreateSeuratObject(counts = counts,

project = 'liver: scRNA-Seq',

meta.data = metadata,

min.cells = 5)

We now have a Seurat object with 20,120 genes and 47,743 cells.

We will remove the counts object to save some memory because it is now stored inside of the Seurat object.

rm(counts)

gc()

used (Mb) gc trigger (Mb) max used (Mb)

Ncells 8269380 441.7 14168290 756.7 12310063 657.5

Vcells 182530194 1392.6 596761865 4553.0 629553222 4803.2

Add on doublet predictions that we did earlier in this lesson.

liver <- AddMetaData(liver, as.data.frame(doublet_preds))

Let’s briefly look at the structure of the Seurat object. The counts are stored

as an assay, which we can query using the Assays() function.

Seurat::Assays(liver)

[1] "RNA"

The output of this function tells us that we have data in an “RNA assay. We can access this using the GetAssayData function.

tmp <- GetAssayData(object = liver, layer = 'counts')

tmp[1:5,1:5]

5 x 5 sparse Matrix of class "dgCMatrix"

AAACGAATCCACTTCG-2 AAAGGTACAGGAAGTC-2 AACTTCTGTCATGGCC-2

Xkr4 . . .

Rp1 . . .

Sox17 . . 2

Mrpl15 . . .

Lypla1 . . 2

AATGGCTCAACGGTAG-2 ACACTGAAGTGCAGGT-2

Xkr4 . .

Rp1 . .

Sox17 4 .

Mrpl15 1 1

Lypla1 1 .

As you can see the data that we retrieved is a sparse matrix, just like the counts that we provided to the Seurat object.

What about the metadata? We can access the metadata as follows:

head(liver)

orig.ident nCount_RNA nFeature_RNA sample digest

AAACGAATCCACTTCG-2 liver: scRNA-Seq 8476 3264 CS48 inVivo

AAAGGTACAGGAAGTC-2 liver: scRNA-Seq 8150 3185 CS48 inVivo

AACTTCTGTCATGGCC-2 liver: scRNA-Seq 8139 3280 CS48 inVivo

AATGGCTCAACGGTAG-2 liver: scRNA-Seq 10083 3716 CS48 inVivo

ACACTGAAGTGCAGGT-2 liver: scRNA-Seq 9517 3543 CS48 inVivo

ACCACAACAGTCTCTC-2 liver: scRNA-Seq 7189 3064 CS48 inVivo

ACGATGTAGTGGTTCT-2 liver: scRNA-Seq 7437 3088 CS48 inVivo

ACGCACGCACTAACCA-2 liver: scRNA-Seq 8162 3277 CS48 inVivo

ACTGCAATCAACTCTT-2 liver: scRNA-Seq 7278 3144 CS48 inVivo

ACTGCAATCGTCACCT-2 liver: scRNA-Seq 9584 3511 CS48 inVivo

typeSample cxds_score bcds_score hybrid_score

AAACGAATCCACTTCG-2 scRnaSeq NA NA NA

AAAGGTACAGGAAGTC-2 scRnaSeq NA NA NA

AACTTCTGTCATGGCC-2 scRnaSeq NA NA NA

AATGGCTCAACGGTAG-2 scRnaSeq NA NA NA

ACACTGAAGTGCAGGT-2 scRnaSeq NA NA NA

ACCACAACAGTCTCTC-2 scRnaSeq NA NA NA

ACGATGTAGTGGTTCT-2 scRnaSeq NA NA NA

ACGCACGCACTAACCA-2 scRnaSeq NA NA NA

ACTGCAATCAACTCTT-2 scRnaSeq NA NA NA

ACTGCAATCGTCACCT-2 scRnaSeq NA NA NA

Notice that there are some columns that were not in our original metadata file; specifically the ‘nCount_RNA’ and ‘nFeature_RNA’ columns.

- nCount_RNA is the total counts for each cell.

- nFeature_RNA is the number of genes with counts > 0 in each cell.

These were calculated by Seurat when the Seurat object was created. We will use these later in the lesson.

Typical filters for cell quality

Here we briefly review these filters and decide what thresholds we will use for these data.

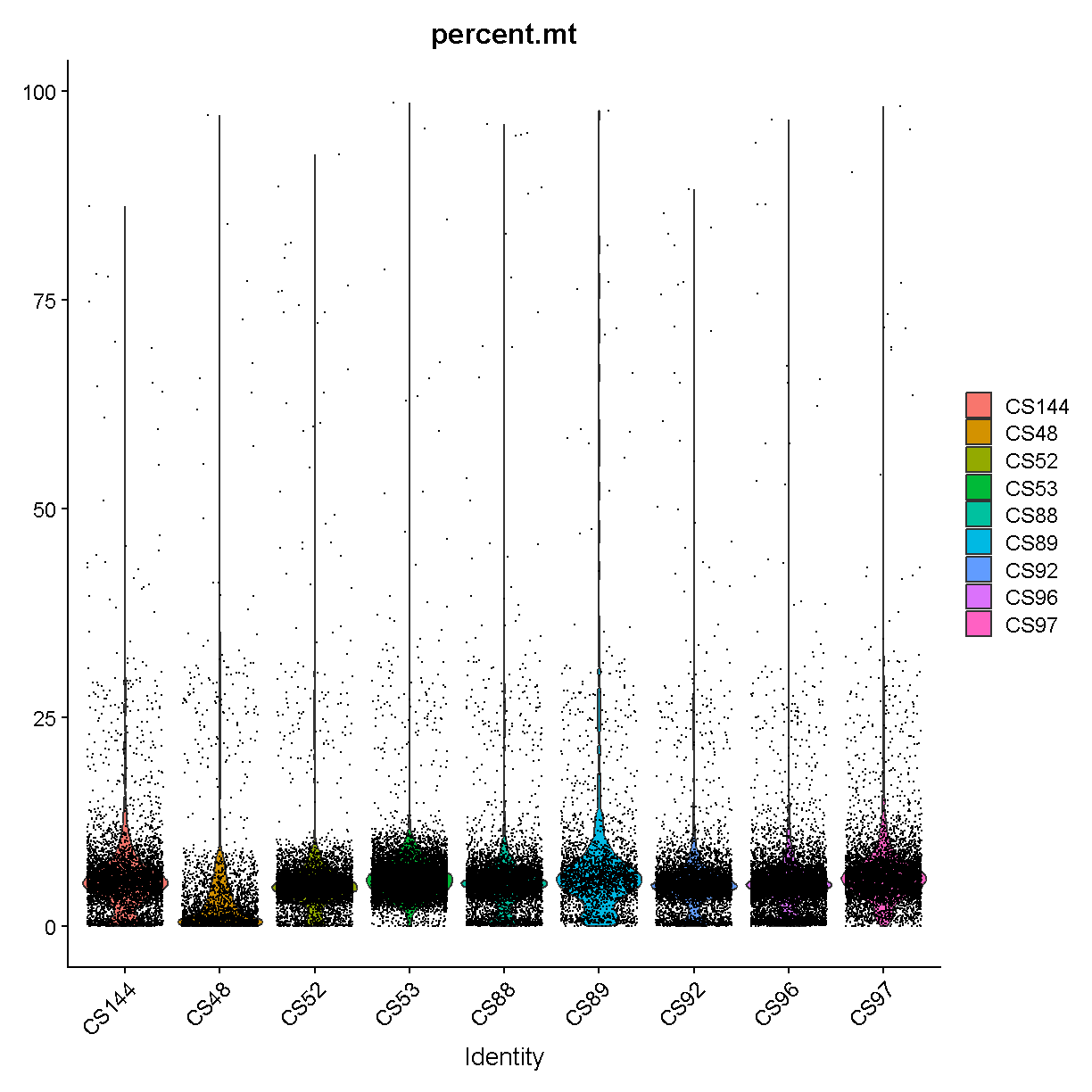

Filtering by Mitochondrial Gene Content

During apoptosis, the cell membrane may break and release transcripts into the surrounding media. However, the mitochondrial transcripts may remain inside of the mitochondria. This will lead to an apparent, but spurious, increase in mitochondrial gene expression. As a result, we use the percentage of mitochondrial-encoded reads to filter out cells that were not healthy during sample processing. See this link from 10X Genomics for additional information.

First we compute the percentage mitochondrial gene expression in each cell.

liver <- liver %>%

PercentageFeatureSet(pattern = "^mt-", col.name = "percent.mt")

Different cell types may have different levels of mitochondrial RNA content. Therefore we must use our knowledge of the particular biological system that we are profiling in order to choose an appropriate threshold. If we are profiling single nuclei instead of single cells we might consider a very low threshold for MT content. If we are profiling a tissue where we anticipate broad variability in levels of mitochondrial RNA content between cell types, we might use a very lenient threshold to start and then return to filter out additional cells after we obtain tentative cell type labels that we have obtained by carrying out normalization and clustering. In this course we will filter only once

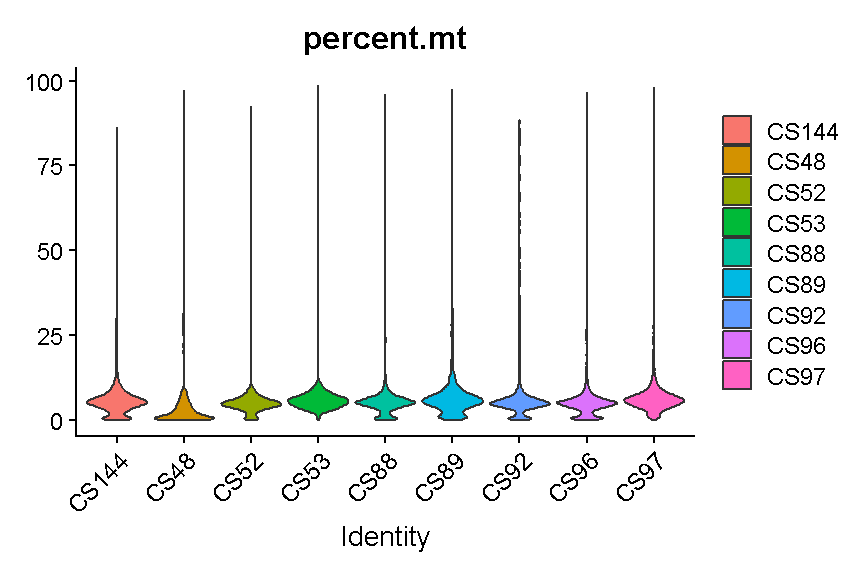

VlnPlot(liver, features = "percent.mt", group.by = 'sample')

Warning: Default search for "data" layer in "RNA" assay yielded no results;

utilizing "counts" layer instead.

plot of chunk seurat_counts_plots

It is hard to see with so many dots! Let’s try another version where we just plot the violins:

VlnPlot(liver, features = "percent.mt", group.by = 'sample', pt.size = 0)

Warning: Default search for "data" layer in "RNA" assay yielded no results;

utilizing "counts" layer instead.

plot of chunk seurat_counts_plots2

Library “CS89” (and maybe CS144) have a “long tail” of cells with high mitochondrial gene expression. We may wish to monitor these libraries throughout QC and decide whether it has problems worth ditching the sample.

In most cases it would be ideal to determine separate filtering thresholds on each scRNA-Seq sample. This would account for the fact that the characteristics of each sample might vary (for many possible reasons) even if the same tissue is profiled. However, in this course we will see if we can find a single threshold that works decently well across all samples. As you can see, the samples we are examining do not look drastically different so this may not be such an unrealistic simplification.

We will use a threshold of 14% mitochondrial gene expression which will

remove the “long tail” of cells with high percent.mt values. We could

also perhaps justify going as low as 10% to be more conservative,

but we likely would not want to go down to 5%, which would

remove around half the cells.

# Don't run yet, we will filter based on several criteria below

#liver <- subset(liver, subset = percent.mt < 14)

Filtering Cells by Total Gene Counts

Let’s look at how many genes are expressed in each cell. Again we’ll split by the mouse ID so we can see if there are particular samples that are very different from the rest. Again we will show only the violins for clarity.

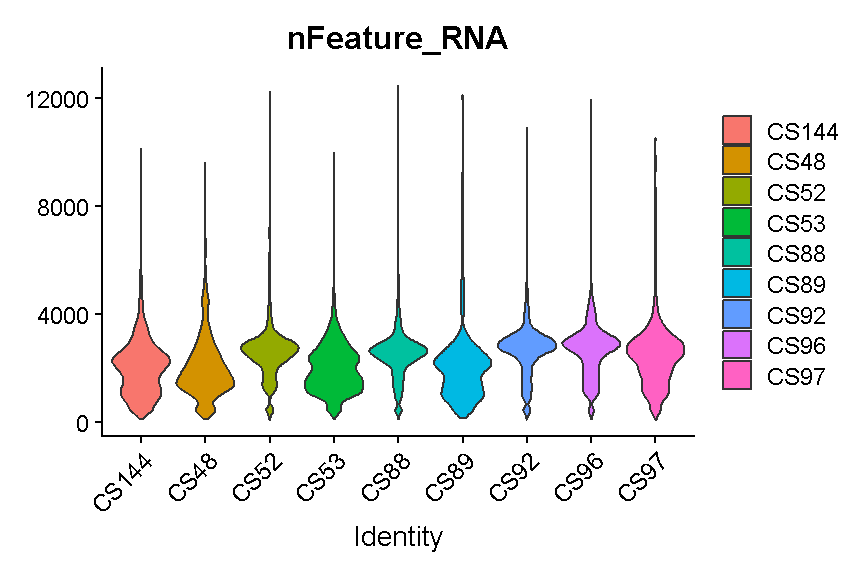

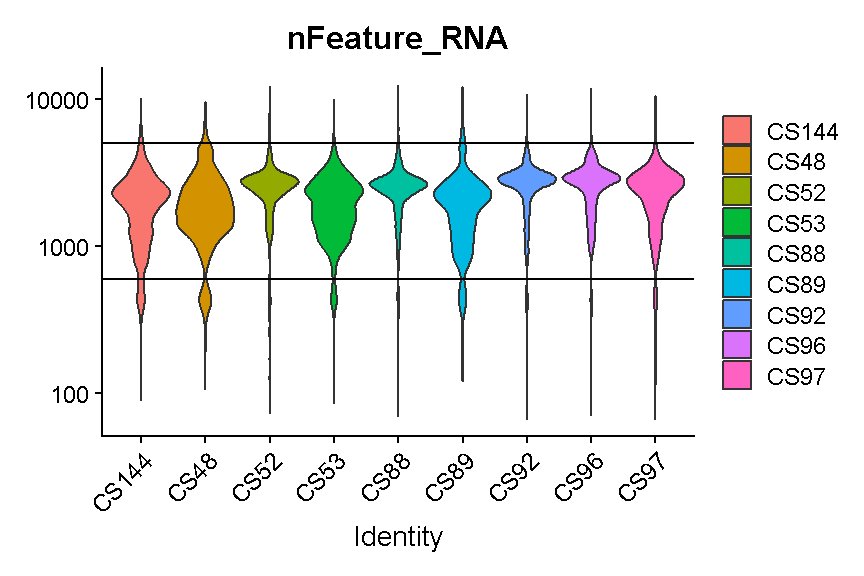

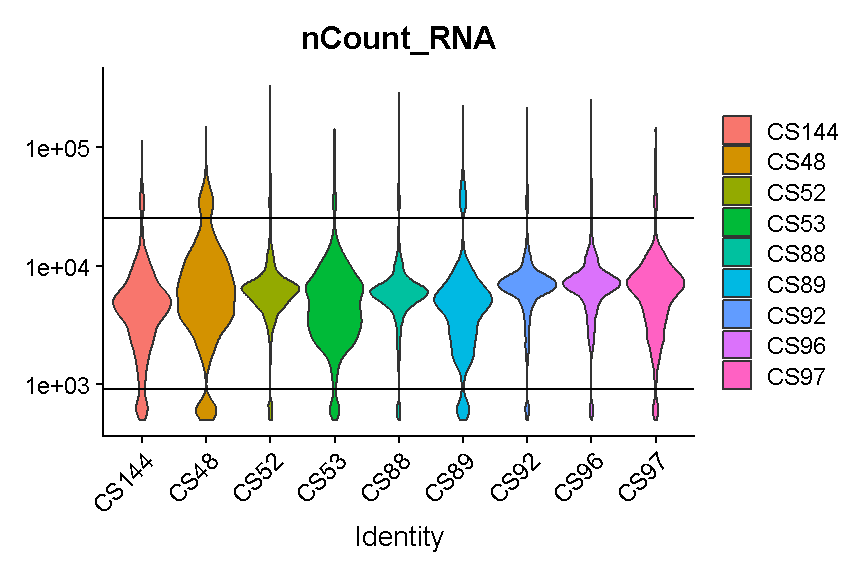

VlnPlot(liver, 'nFeature_RNA', group.by = 'sample', pt.size = 0)

Warning: Default search for "data" layer in "RNA" assay yielded no results;

utilizing "counts" layer instead.

plot of chunk filter_gene_counts

Like with the mitochondrial expression percentage, we will strive to find a threshold that works reasonably well across all samples. For the number of genes expressed we will want to filter out both cells that express to few genes and cells that express too many genes. As noted above, damaged or dying cells may leak RNA, resulting in a low number of genes expressed, and we want to filter out these cells to ignore their “damaged” transcriptomes. On the other hand, cells with way more genes expressed than the typical cell might be doublets/multiplets and should also be removed.

It looks like filtering out cells that express less than 400 or greater than 5,000 genes is a reasonable compromise across our samples. (Note the log scale in this plot, which is necessary for seeing the violin densities at low numbers of genes expressed).

VlnPlot(liver, 'nFeature_RNA', group.by = 'sample', pt.size = 0) +

scale_y_log10() +

geom_hline(yintercept = 600) +

geom_hline(yintercept = 5000)

Warning: Default search for "data" layer in "RNA" assay yielded no results;

utilizing "counts" layer instead.

Scale for y is already present.

Adding another scale for y, which will replace the existing scale.

plot of chunk filter_gene_counts_5k

#liver <- subset(liver, nFeature_RNA > 600 & nFeature_RNA < 5000)

Filtering Cells by UMI

A UMI – unique molecular identifier – is like a molecular barcode for each RNA molecule in the cell. UMIs are short, distinct oligonucleotides attached during the initial preparation of cDNA from RNA. Therefore each UMI is unique to a single RNA molecule.

Why are UMI useful? The amount of RNA in a single cell is quite low (approximately 10-30pg according to this link). Thus single cell transcriptomics profiling usually includes a PCR amplification step. PCR amplification is fairly “noisy” because small stochastic sampling differences can propagate through exponential amplification. Using UMIs, we can throw out all copies of the molecule except one (the copies we throw out are called “PCR duplicates”).

Several papers (e.g. Islam et al) have demonstrated that UMIs reduce amplification noise in single cell transcriptomics and thereby increase data fidelity. The only downside of UMIs is that they cause us to throw away a lot of our data (perhaps as high as 90% of our sequenced reads). Nevertheless, we don’t want those reads if they are not giving us new information about gene expression, so we tolerate this inefficiency.

CellRanger will automatically process your UMIs and the feature-barcode matrix it produces will be free from PCR duplicates. Thus, we can think of the number of UMIs as the sequencing depth of each cell.

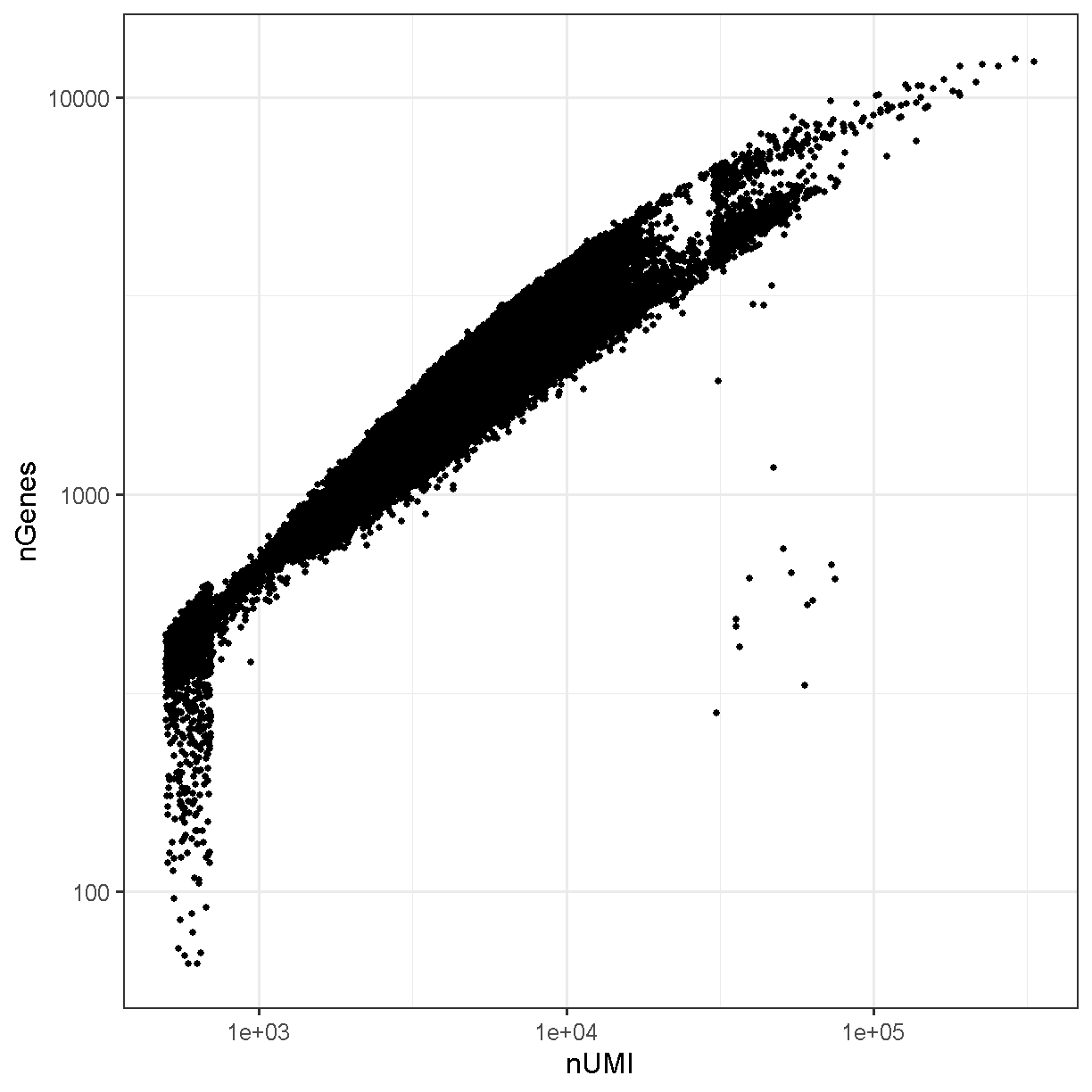

Typically the number of genes and number of UMI are highly correlated and this is mostly the case in our liver dataset:

ggplot(liver@meta.data, aes(x = nCount_RNA, y = nFeature_RNA)) +

geom_point() +

theme_bw(base_size = 16) +

xlab("nUMI") + ylab("nGenes") +

scale_x_log10() + scale_y_log10()

plot of chunk genes_umi

VlnPlot(liver, 'nCount_RNA', group.by = 'sample', pt.size = 0) +

scale_y_log10() +

geom_hline(yintercept = 900) +

geom_hline(yintercept = 25000)

Warning: Default search for "data" layer in "RNA" assay yielded no results;

utilizing "counts" layer instead.

Scale for y is already present.

Adding another scale for y, which will replace the existing scale.

plot of chunk filter_umi

# Don't run yet, we will filter based on several criteria below

#liver <- subset(liver, nCount_RNA > 900 & nCount_RNA < 25000)

Again we try to select thresholds that remove most of the strongest outliers in all samples.

Challenge 2

List two technical issues that can lead to poor scRNA-seq data quality and which filters we use to detect each one.

Solution to Challenge 2

1 ). Cell membranes may rupture during the disassociation protocol, which is indicated by high mitochondrial gene expression because the mitochondrial transcripts are contained within the mitochondria, while other transcripts in the cytoplasm may leak out. Use the mitochondrial percentage filter to try to remove these cells.

2 ). Cells may be doublets of two different cell types. In this case they might express many more genes than either cell type alone. Use the “number of genes expressed” filter to try to remove these cells.

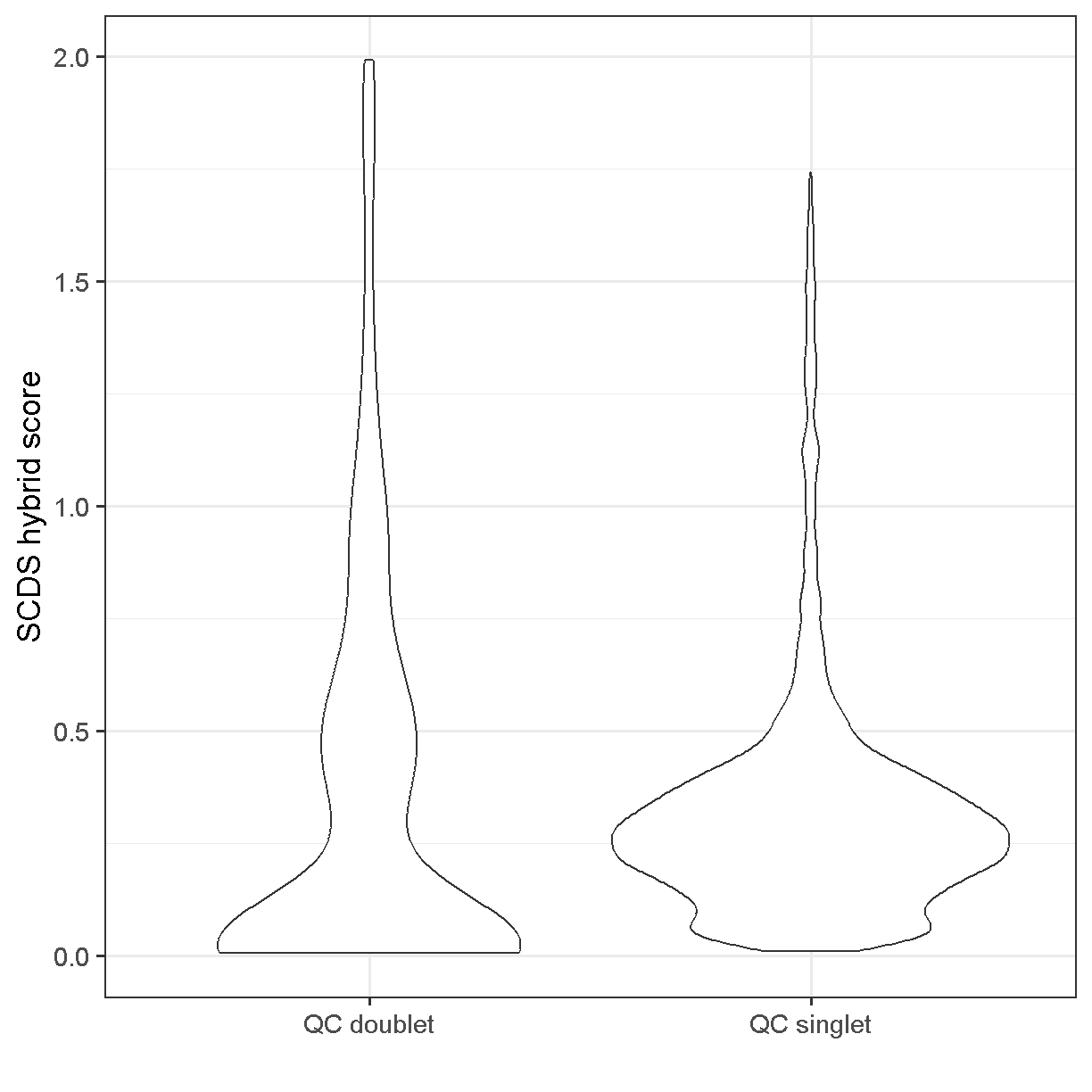

Doublet detection revisited

Let’s go back to our doublet predictions. How many of the cells that are going to be filtered out of our data were predicted to be doublets by scds?

liver$keep <- liver$percent.mt < 14 &

liver$nFeature_RNA > 600 &

liver$nFeature_RNA < 5000 &

liver$nCount_RNA > 900 &

liver$nCount_RNA < 25000

Using the scds hybrid_score method, the scores range between 0 and 2. Higher scores should be more likely to be doublets.

ggplot(mutate(liver[[]], class = ifelse(keep, 'QC singlet', 'QC doublet')),

aes(x = class, y = hybrid_score)) +

geom_violin() +

theme_bw(base_size = 18) +

xlab("") +

ylab("SCDS hybrid score")

Warning: Removed 42388 rows containing non-finite outside the scale range

(`stat_ydensity()`).

plot of chunk doublet_plot

Somewhat unsatisfyingly, the scds hybrid scores aren’t wildly different between the cells we’ve used QC thresholds to call as doublets vs singlets. There does seem to be an enrichment of cells with score >0.75 among the QC doublets. If we had run scds doublet prediction on all cells we might compare results with no scds score filtering to those with an scds score cutoff of, say, 1.0. One characteristic of the presence of doublet cells is a cell cluster located between two large and well-defined clusters that expresses markers of both of them (don’t worry, we will learn how to cluster and visualize data soon). Returning to the scds doublet scores, we could cluster our cells with and without doublet score filtering, and see if we note any putative doublet clusters.

Subset based on %MT, number of genes, and number of UMI thresholds

liver <- subset(liver, subset = percent.mt < 14 &

nFeature_RNA > 600 &

nFeature_RNA < 5000 &

nCount_RNA > 900 &

nCount_RNA < 25000)

Batch correction

We might want to correct for batch effects. This can be difficult to do because batch effects are complicated (in general), and may affect different cell types in different ways. Although correcting for batch effects is an important aspect of quality control, we will discuss this procedure in lesson 06 with some biological context.

Challenge 3

Delete the existing counts and metadata objects. Read in the ex-vivo data that you saved at the end of Lesson 03 (lesson03_challenge.Rdata) and create a Seurat object called ‘liver_2’. Look at the filtering quantities and decide whether you can use the same cell and feature filters that were used to create the Seurat object above.

Solution to Challenge 3

# Remove the existing counts and metadata.

rm(counts, metadata)

# Read in citeseq counts & metadata.

load(file = file.path(data_dir, 'lesson03_challenge.Rdata'))

# Create Seurat object.

metadata = as.data.frame(metadata) %>%

` column_to_rownames(‘cell’)liver_2 = CreateSeuratObject(count = counts, `

` project = ‘liver: ex-vivo’,meta.data = metadata)`

Challenge 4

Estimate the proportion of mitochondrial genes. Create plots of the proportion of features, cells, and mitochondrial genes. Filter the Seurat object by mitochondrial gene expression.

Solution to Challenge 4

liver_2 = liver_2 %>%` PercentageFeatureSet(pattern = “^mt-“, col.name = “percent.mt”)VlnPlot(liver_2, features = c(“nFeature_RNA”, “nCount_RNA”, “percent.mt”), ncol = 3)liver_2 = subset(liver_2, subset = percent.mt < 10)`

Session Info

sessionInfo()

R version 4.4.0 (2024-04-24 ucrt)

Platform: x86_64-w64-mingw32/x64

Running under: Windows 10 x64 (build 19045)

Matrix products: default

locale:

[1] LC_COLLATE=English_United States.utf8

[2] LC_CTYPE=English_United States.utf8

[3] LC_MONETARY=English_United States.utf8

[4] LC_NUMERIC=C

[5] LC_TIME=English_United States.utf8

time zone: America/New_York

tzcode source: internal

attached base packages:

[1] stats4 stats graphics grDevices utils datasets methods

[8] base

other attached packages:

[1] Seurat_5.1.0 SeuratObject_5.0.2

[3] sp_2.1-4 scds_1.20.0

[5] SingleCellExperiment_1.26.0 SummarizedExperiment_1.34.0

[7] Biobase_2.64.0 GenomicRanges_1.56.2

[9] GenomeInfoDb_1.40.1 IRanges_2.38.1

[11] S4Vectors_0.42.1 BiocGenerics_0.50.0

[13] MatrixGenerics_1.16.0 matrixStats_1.4.1

[15] Matrix_1.7-0 lubridate_1.9.3

[17] forcats_1.0.0 stringr_1.5.1

[19] dplyr_1.1.4 purrr_1.0.2

[21] readr_2.1.5 tidyr_1.3.1

[23] tibble_3.2.1 ggplot2_3.5.1

[25] tidyverse_2.0.0 knitr_1.48

loaded via a namespace (and not attached):

[1] RColorBrewer_1.1-3 rstudioapi_0.16.0 jsonlite_1.8.9

[4] magrittr_2.0.3 ggbeeswarm_0.7.2 spatstat.utils_3.1-0

[7] farver_2.1.2 zlibbioc_1.50.0 vctrs_0.6.5

[10] ROCR_1.0-11 spatstat.explore_3.3-2 htmltools_0.5.8.1

[13] S4Arrays_1.4.1 xgboost_1.7.8.1 SparseArray_1.4.8

[16] pROC_1.18.5 sctransform_0.4.1 parallelly_1.38.0

[19] KernSmooth_2.23-24 htmlwidgets_1.6.4 ica_1.0-3

[22] plyr_1.8.9 plotly_4.10.4 zoo_1.8-12

[25] igraph_2.0.3 mime_0.12 lifecycle_1.0.4

[28] pkgconfig_2.0.3 R6_2.5.1 fastmap_1.2.0

[31] GenomeInfoDbData_1.2.12 fitdistrplus_1.2-1 future_1.34.0

[34] shiny_1.9.1 digest_0.6.35 colorspace_2.1-1

[37] patchwork_1.3.0 tensor_1.5 RSpectra_0.16-2

[40] irlba_2.3.5.1 labeling_0.4.3 progressr_0.14.0

[43] spatstat.sparse_3.1-0 fansi_1.0.6 timechange_0.3.0

[46] polyclip_1.10-7 httr_1.4.7 abind_1.4-8

[49] compiler_4.4.0 withr_3.0.1 fastDummies_1.7.4

[52] highr_0.11 MASS_7.3-61 DelayedArray_0.30.1

[55] tools_4.4.0 vipor_0.4.7 lmtest_0.9-40

[58] beeswarm_0.4.0 httpuv_1.6.15 future.apply_1.11.2

[61] goftest_1.2-3 glue_1.8.0 nlme_3.1-165

[64] promises_1.3.0 grid_4.4.0 Rtsne_0.17

[67] cluster_2.1.6 reshape2_1.4.4 generics_0.1.3

[70] spatstat.data_3.1-2 gtable_0.3.5 tzdb_0.4.0

[73] data.table_1.16.2 hms_1.1.3 utf8_1.2.4

[76] XVector_0.44.0 spatstat.geom_3.3-3 RcppAnnoy_0.0.22

[79] ggrepel_0.9.6 RANN_2.6.2 pillar_1.9.0

[82] spam_2.11-0 RcppHNSW_0.6.0 later_1.3.2

[85] splines_4.4.0 lattice_0.22-6 deldir_2.0-4

[88] survival_3.7-0 tidyselect_1.2.1 miniUI_0.1.1.1

[91] pbapply_1.7-2 gridExtra_2.3 scattermore_1.2

[94] xfun_0.44 stringi_1.8.4 UCSC.utils_1.0.0

[97] lazyeval_0.2.2 evaluate_1.0.1 codetools_0.2-20

[100] cli_3.6.3 uwot_0.2.2 xtable_1.8-4

[103] reticulate_1.39.0 munsell_0.5.1 Rcpp_1.0.13

[106] spatstat.random_3.3-2 globals_0.16.3 png_0.1-8

[109] ggrastr_1.0.2 spatstat.univar_3.0-1 parallel_4.4.0

[112] dotCall64_1.2 listenv_0.9.1 viridisLite_0.4.2

[115] scales_1.3.0 ggridges_0.5.6 leiden_0.4.3.1

[118] crayon_1.5.3 rlang_1.1.4 cowplot_1.1.3

Key Points

It is essential to filter based on criteria including mitochondrial gene expression and number of genes expressed in a cell.

Determining your filtering thresholds should be done separately for each experiment, and these values can vary dramatically in different settings.

Common Analyses

Overview

Teaching: 120 min

Exercises: 10 minQuestions

What are the most common single cell RNA-Seq analyses?

Objectives

Understand the form of common Seurat commands and how to chain them together.

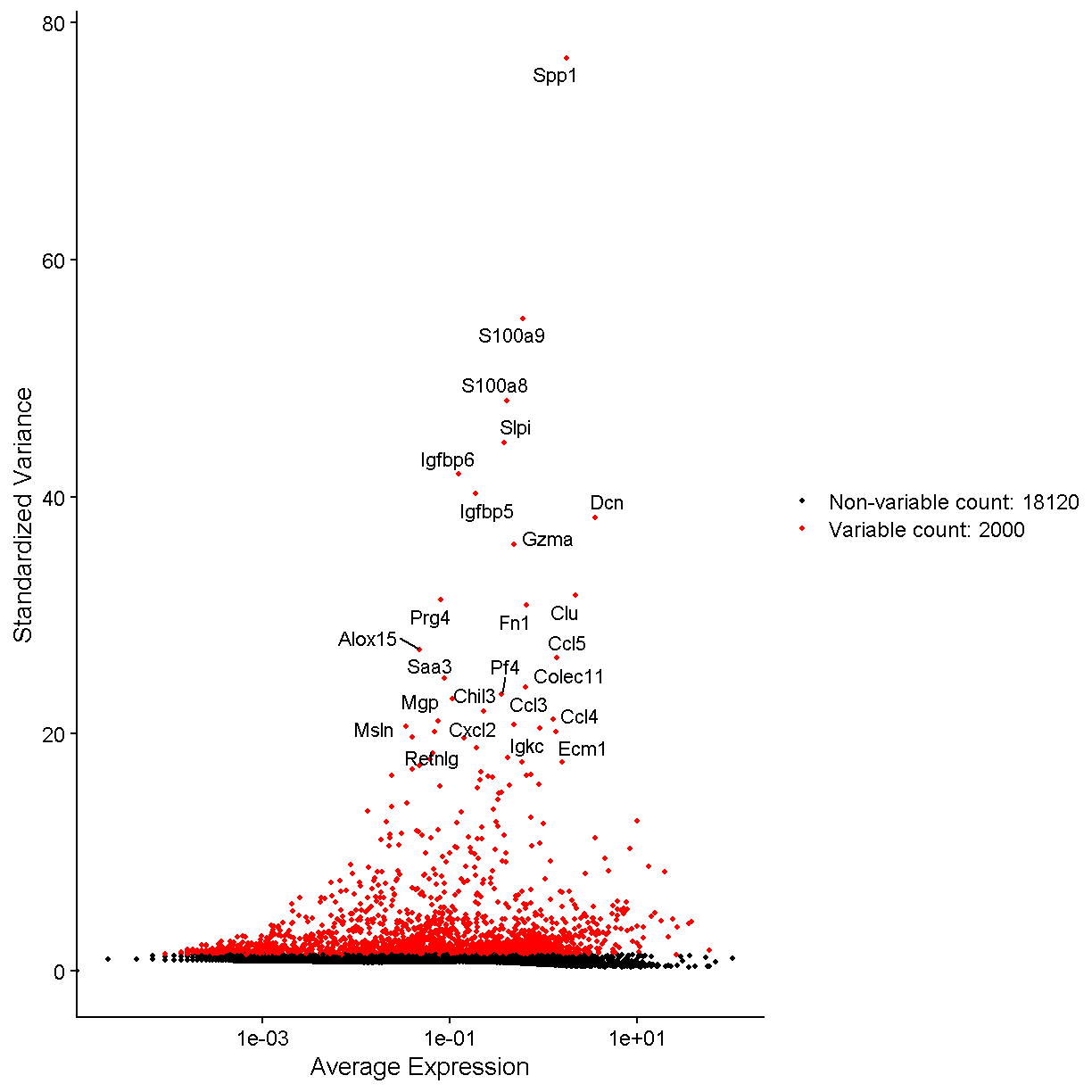

Learn how to normalize, find variable features, reduce dimensionality, and cluster your scRNA-Seq data.

suppressPackageStartupMessages(library(tidyverse))

suppressPackageStartupMessages(library(Seurat))

data_dir <- 'data'

# set a seed for reproducibility in case any randomness used below

set.seed(1418)

A Note on Seurat Functions

The Seurat package is set up so that we primarily work with a

Seurat object containing our single cell data and metadata.

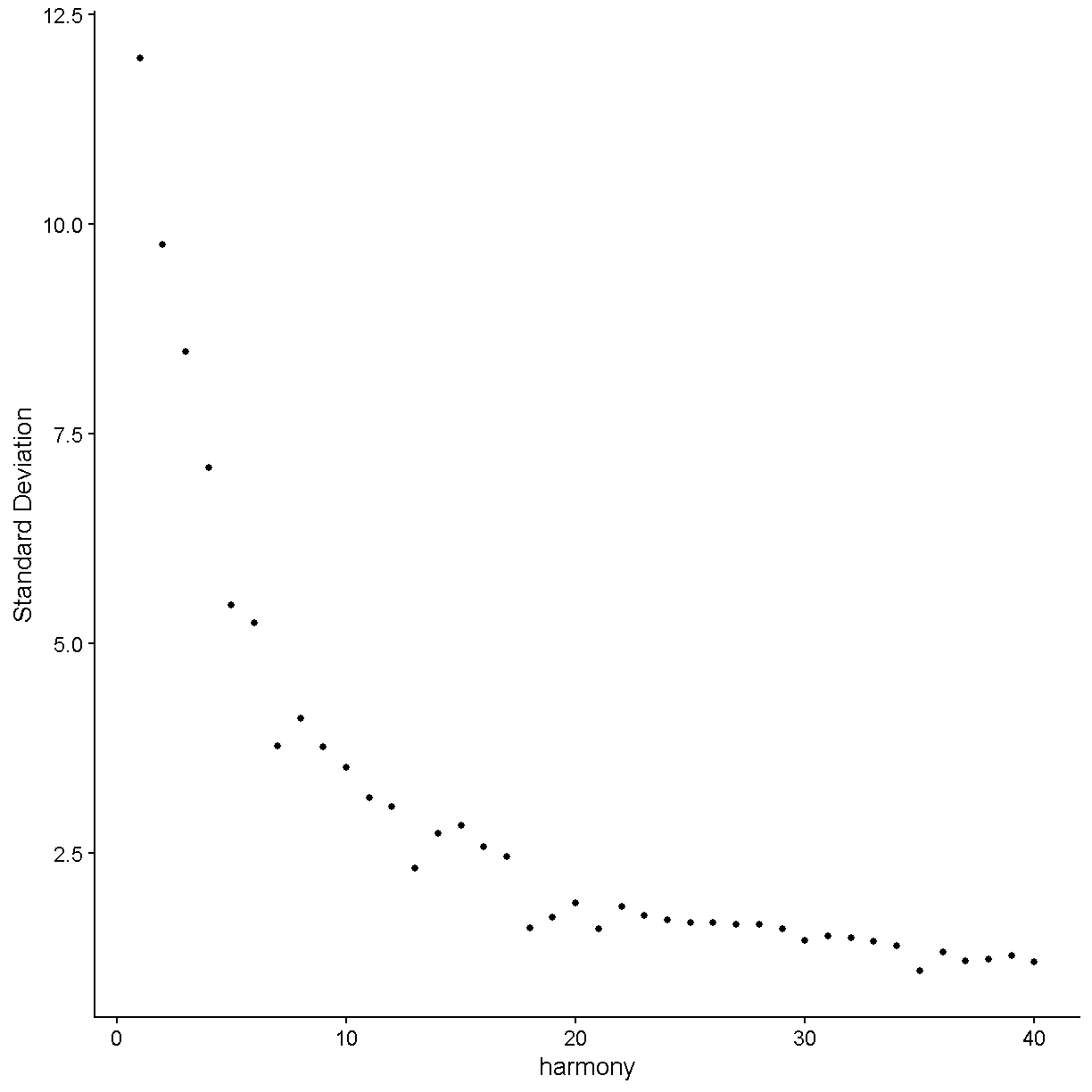

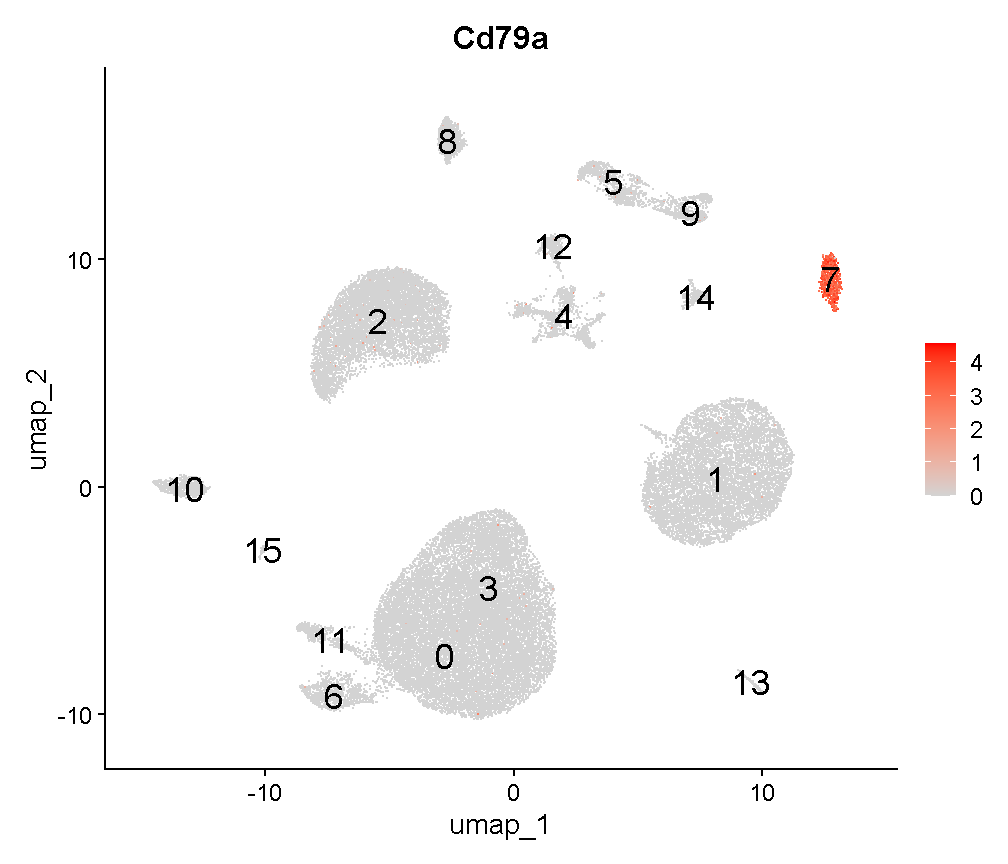

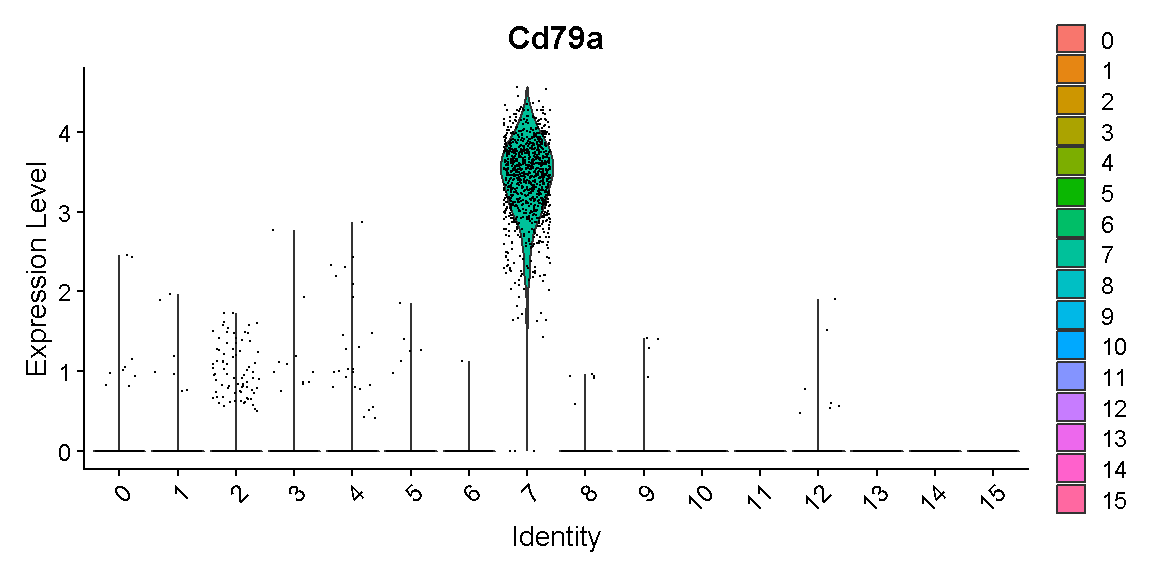

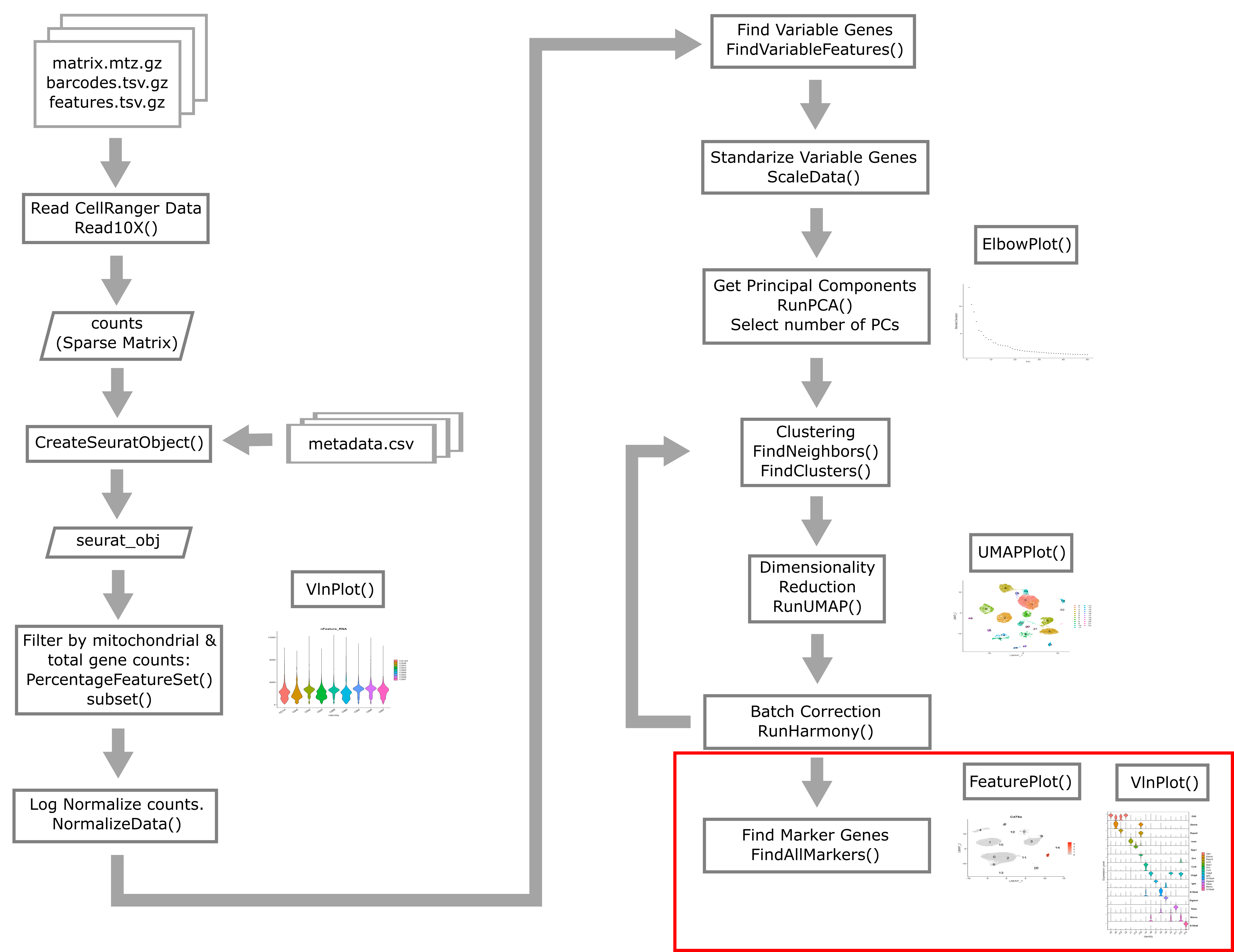

Let’s say we are working with our Seurat object liver.