Content from Genotyping by RNA Sequencing

Last updated on 2024-09-06 | Edit this page

Estimated time: 12 minutes

Overview

Questions

- What is Genotyping by RNA Sequencing (GBRS)?

- What analyses does GBRS provide?

- What are the steps in a GBRS analysis?

Objectives

- Explain what GBRS does.

- Understand when and why you would use GBRS for transcript quantification.

- List the steps in a GBRS analysis.

Introduction

Genotyping by RNA Sequencing (GBRS) is a suite of tools which estimates allele-specific transcript expression and performs haplotype reconstruction in multi-founder mouse crosses. GBRS can also by used to analyze transcript expression in other organisms, but this tutorial will focus on mouse crosses. We have configured GBRS to use the Nextflow workflow software and Singularity containers.

Prerequisites

This tutorial assumes that you have a background in genetics and are familiar with bash command-line programming.

In the area of genetics, we assume that:

- you understand the folloiwng terms: inbred strain, recombinant inbred strain, multi-founder advanced generation intercross;

- you are familiar with the Diversity Outbred (J:DO) mouse population;

- you understand the concepts of haplotype reconstruction and allele- specific expression;

- you understand what RNASeq analysis is used for and what type of data it produces.

In the area of bash programming, we assume that:

- you can navigate the UNIX/Linux file system;

- you understand the difference between absolute and relative file paths;

- you can create and use bash variables;

- you understand what a software container is and know how to use one.

Background

There are many tool chains that can align RNASeq reads and quantify transcript expression. The alignment tools (i.e. bowtie or STAR) generally align the reads to the reference genome or transcriptome, allowing for gaps and mismatches. These methods work well for reads derived from mice with genomes that are similar to the reference, but may not provide accurate alignments for mouse genome that diverge from the reference genome. This may be particularly important when users need allele-specific expression estimates. Allele-specific expression provides an estimate of the expression of each of the two alleles in a diploid sample.

GBRS provides a suite of tools which aligns reads to pseudogenomes and then quantifies allele-specific expression in multi-founder mouse crosses. A “pseudo-genome” is a reference genome with SNPs and indels inserted from the genome of a non-reference mouse strain. By analogy, we can also create a “pseudo-transcriptome” for a non-reference inbred strain, which involves inserting SNPs and indels into the reference transcriptome. We then align RNASeq reads to multiple pseudo-transriptomes and use expectation maximization to estimate allele-specific transcript counts and total gene counts. In the process, we also reconstruct the haplotypes of each mouse in terms of the founder strain haplotypes.

The GBRS suite consists of three tools:

- g2gtools: given a reference genome and a VCF containing SNPs and indels, creates pseudo-genomes by inserting the SNPs and indels into the reference genome.

- EMASE: given a set of pseudogenomes and a transcriptome, create a multi-way transcriptome. EMASE also produces estimates of allele-specific transcript counts.

- GBRS: Genotyping-by-RNA-Sequencing uses the pesudo-genomes created by g2gtools and the RNASeq read alignments from EMASE to reconstruct the haplotypes of each sample in terms of the founder strain haplotypes.

GBRS is designed to work well with bulk RNASeq off with sufficient read depth to confidently call genetic variants. It does not work well with single-cell RNASeq because of the low sequencing depth in each cell.

Organization of this Tutorial

This tutorial is intended to cover all aspects of GBRS, from building reference genomes and transcriptomes through haplotype reconstruction and estimation of allele-specific transcript expression. All users may not need to perform all steps.

We envision four types of users:

- Users who are analyzing J:DO data

- inside of The Jackson Laboratory This is the simplest case. The Next-Generation Sequencing Operations group has created the reference files and a pipeline to execute this workflow. This case is covered in the “Running GBRS” lesson.

- outside of The Jackson Laboratory In this case, users will need to download and store the DO-related reference files and have Nextflow installed on their computing cluster. This case is covered in the “Running GBRS” lesson.

- Users who are analyzing other mouse crosses

- inside of The Jackson Laboratory Users will need to create reference files for the founder strains in their cross and point to them in their GBRS scripts. This is described in the “Preparing GBRS Reference Genomes” and “Preparing GBRS Reference Transcriptomes” lessons.

- outside of The Jackson Laboratory Users will need to create reference files for the founder strains in their cross and point to them in their GBRS scripts. This is described in the “Preparing GBRS Reference Genomes” and “Preparing GBRS Reference Transcriptomes” lessons.

Key Points

- GBRS is a suite of tools which includes pseudo-genome creation, allele- specific transcript estimation, and haplotype reconstruction.

- Users who have J:DO data and work at The Jackson Laboratory can use reference data stored on the high-performance computing cluster.

- Users outside of The Jackson Laboratory will need to create or download the reference files.

Content from Installing GBRS

Last updated on 2024-09-06 | Edit this page

Estimated time: 35 minutes

Overview

Questions

- What supporting software is needed to install GBRS?

- How do I configure my installation for my computing cluster?

- Where do I obtain the GBRS reference files?

Objectives

- Understand which supporting software is required by GBRS.

- Install supporting software and GBRS.

- Download the required reference files for Diversity Outbred mice.

Introduction

Genotyping by RNA Sequencing (GBRS) uses several pieces of supporting software to run. We use the Nextflow workflow software and Singularity containers. Your computing cluster will also have a job submission system. Examples of this are slurm or PBS.

Install Nextflow

Many computing clusters will have nextflow installed.

However, if it is not installed on your cluster, nextflow

provides

detailed

installation instructions. The installation requires a version of

Java as well.

You should contact your computing cluster administrator and ask them

if nextflow is already installed and, if so, how to access

it. Sometimes you need to add software with an explicit command

like:

module load nextflowInstall Singularity

There are several software container systems that are in popular use,

including Docker and

singularity.

Many computing clusters use Singularity because it does not

allow root access from within containers.

Again, you should contact your computing cluster administrator and

ask whether Singularity is installed and, if so, how to

access it.

Install Nextflow

Nextflow is a scripting language which runs computational pipelines. It handles running jobs and tracking progress. Most computing clusters should have this installed. If not, you will need to install it and make sure that it is in the PATH variable in your environment.

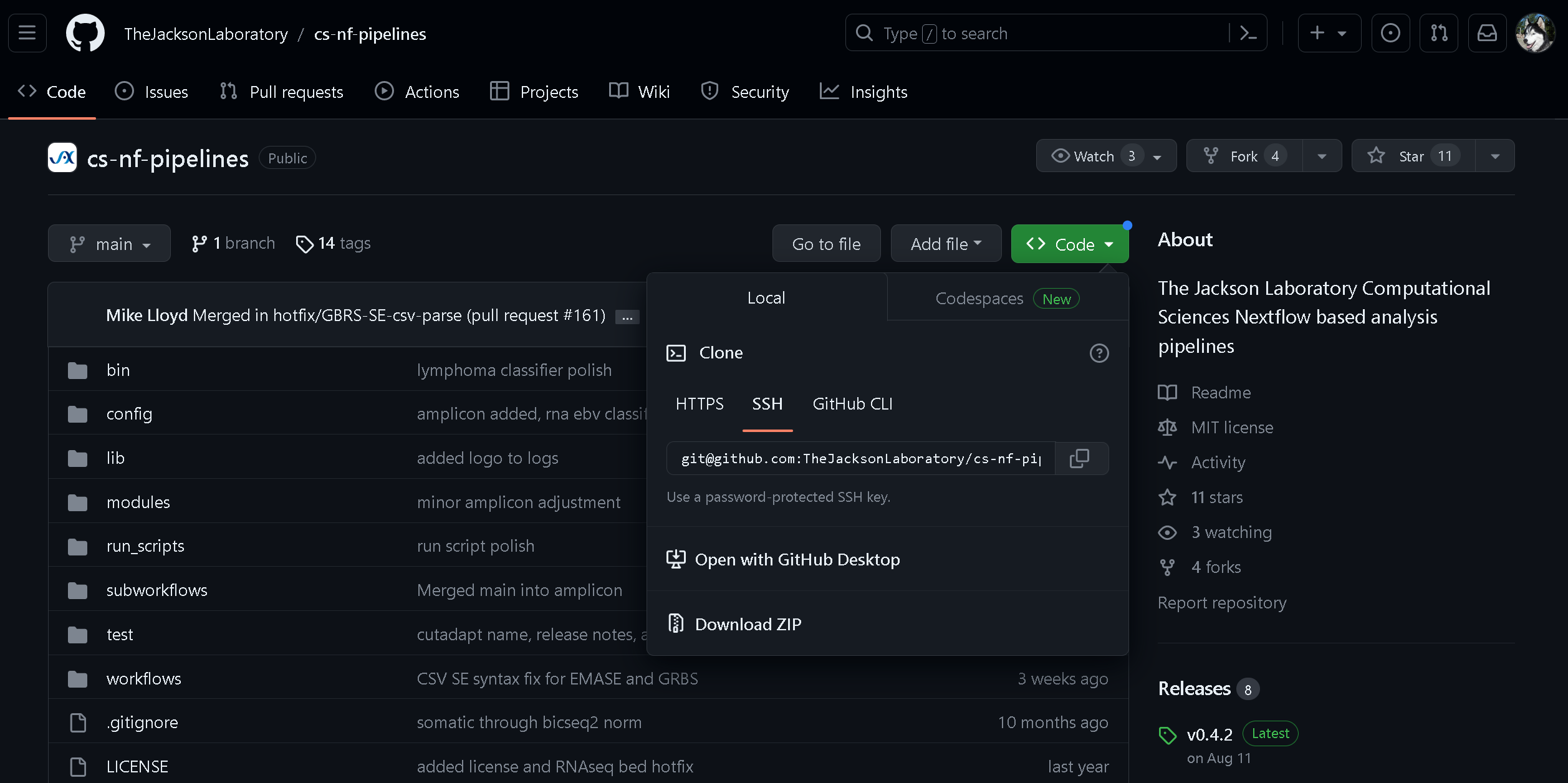

Nextflow Pipeline Repository

The Computational Sciences group at The Jackson Laboratory has

created a suite of next-generation sequencing tools using

nextflow. These are stored in a publicly available Github

repository.

Navigate to https://github.com/TheJacksonLaboratory/cs-nf-pipelines and click on the green “Code” button.

This will show a pop-over window with a code which you can copy to your clipboard. Change into a directory where you wish download the repository. Depending on how you clone Github repositories (https or ssh), your command may look something like this:

git clone https://github.com/TheJacksonLaboratory/cs-nf-pipelines.gitor this:

git clone git@github.com:TheJacksonLaboratory/cs-nf-pipelines.gitCluster Profile Configuration File

There are sample profile configuration files on Github. These files are for the clusters at The Jackson Laboratory. You will need to modify the values in this file to configure the settings for your cluster.

We provide some examples of blocks which you may need to edit.

singularity {

enabled = true

autoMounts = true

cacheDir = '/projects/omics_share/meta/containers'

}You should set the value of cacheDir to a directory in which you would like the pipeline to store software containers.

process {

executor = 'slurm'

queue = 'compute'

clusterOptions = {task.time < 72.h ? '-q batch' : '-q long'}

module = 'slurm'

}If your cluster does not use slurm, then you should edit

the ‘executor’ variable to be the one which you use. See the nextflow

executor documentation for more information.

executor {

name = 'slurm'

// The number of tasks the executor will handle in a parallel manner

queueSize = 150

submitRateLimit = '1 / 2 s'

// Determines the max rate of job submission per time unit, for example '10sec' eg. max 10 jobs per second or '1/2 s' i.e. 1 job submissions every 2 seconds.

}If your cluster does not use slurm, then you will need

to modify the block above.

Reference Data Files

The reference files for Diversity Outbred mice are stored on Zenodo. Create a directory in which you will store the reference files and change into it.

Callout

These reference files are on mouse genome build GRCm39 and use Ensembl 105 gene annotation.

You do not need all of the files. You will need the following files:

Bowtie transcript indices:

- bowtie.transcripts.1.ebwt

- bowtie.transcripts.2.ebwt

- bowtie.transcripts.3.ebwt

- bowtie.transcripts.4.ebwt

Reference genome FASTA file index:

GBRS needs the reference file index for internal bookkeeping. The reference genome is contained in the Bowtie indices above.

Gene and Transcript information:

- emase.fullTranscripts.info

- emase.gene2transcripts.tsv

- emase.pooled.fullTranscripts.info

- ref.gene_pos.ordered_ensBuild_105.npz

Marker grid on GRCm39:

GBRS uses a 74,165 pseudomarker grid with markers evenly distributed across the genome. This is the grid on which the results are reported. The motivation is that each tissue will express a different set of genes with different genomic positions and this grid allows for consistent reporting of results.

Emission probabilities:

Transition probabilities:

Colors to use when drawing founder haplotypes:

Once you have these file in place, navigate to the “Running GBRS” lesson.

Key Points

- GBRS requires support software and Diversity Outbred reference files to run.

- GBRS uses

nextflowto run the GBRS pipeline. - GBRS uses a container system, either

dockerorSingularity, to modularize required software versions. - Diversity Outbred (DO) reference files are needed to run GBRS on DO mice.

Content from Running GBRS

Last updated on 2024-09-09 | Edit this page

Estimated time: 120 minutes

Overview

Questions

- How do I run GBRS on my FASTQ files at JAX?

- How do I run GBRS on my FASTQ files at another institution?

Objectives

- At JAX: analyze FASTQ files using GBRS on the High Performance Computing cluster.

- At other institutions: configure Nexflow and the reference files and analyze FASTQ files using GBRS.

At the Jackson Laboratory (JAX)

The GBRS Nextflow pipeline is configured to run on sumner2, the High Performance Computing (HPC) cluster at JAX.

We will consider two scenarios:

- You are analyzing expression data from Diversity Outbred mice;

- You are analyzing expression data from some other cross;

GBRS for Diversity Outbred Mice

The Next-Generation Operations (NGSOps) team has configured the default arrguments for the GBRS Nextflow pipeline to use the reference files for GRCm39 and Ensembl version 105. This means that the arguments that point GBRS to the locations of the founder genomes, founder transcriptomes, and aligner indices are already set.

Here is the entire script:

#!/bin/bash

#SBATCH --qos=batch # job queue

#SBATCH --ntasks=1 # number of nodes

#SBATCH --cpus-per-task=1 # number of cores

#SBATCH --mem=1G # memory pool for all cores

#SBATCH --time=48:00:00 # wall clock time (D-HH:MM)

################################################################################

# Run GBRS on each sample. GRCm39. Ensembl 105.

#

# Daniel Gatti

# dan.gatti@jax.org

# 2022-09-28

################################################################################

set -e -u -x -o pipefail

##### VARIABLES #####

# Base directory for project.

BASE_DIR=/projects/research_lab_directory/diabetes

# Sample metadata file.

SAMPLE_META_FILE=${BASE_DIR}/data/gbrs_sample_metadata.csv

# Base /flashscratch directory.

FLASHSCRATCH=/flashscratch/dgatti

# Temporary working directory.

TMP_DIR=${FLASHSCRATCH}/tmp

# Temporary output directory.

OUT_DIR=${FLASHSCRATCH}/results

# Final results directory (in backed up space)

DEST_DIR=${BASE_DIR}/results

# GBRS path.

GBRS_GITHUB_PATH=TheJacksonLaboratory/cs-nf-pipelines

# GBRS version on Github.

GBRS_VERSION=v0.4.2

# Singularity cache directory.

#export NXF_SINGULARITY_CACHEDIR=${flashscratch}/singularity_cache

##### MAIN #####

mkdir -p ${OUT_DIR}

mkdir -p ${DEST_DIR}

mkdir -p ${TMP_DIR}

#mkdir -p ${NXF_SINGULARITY_CACHEDIR}

module load singularity

cd ${TMP_DIR}

nextflow run ${GBRS_GITHUB_PATH} \

-r ${GBRS_VERSION} \

-profile sumner \

-w ${TMP_DIR} \

--workflow gbrs \

--pubdir ${OUT_DIR} \

--csv_input ${SAMPLE_META_FILE} \

-resume

# Copy output files from OUT_DIR to DEST_DIR.

DIRS=`(ls ${OUT_DIR})`

for i in ${DIRS}

do

cp ${OUT_DIR}/${i}/stats/* ${DEST_DIR}

cp ${OUT_DIR}/${i}/gbrs/*_counts ${DEST_DIR}

cp ${OUT_DIR}/${i}/gbrs/*.tsv ${DEST_DIR}

cp ${OUT_DIR}/${i}/gbrs/*.pdf ${DEST_DIR}

done

# Clean up tmp directory.

rm -rf ${TMP_DIR}For Diversity Outbred (DO) mice, the reference files are stored in

the reference data directories /projects/omics_share on

sumner2.

JAX uses slurm (NOTE: This is an internal JAX link which requires a JAX login.) to manage computing jobs on sumner2. There are several good tutorials on using slurm on the JAX Research IT Documentation Library.

slurm Options Block

slurm scripts are bash scripts, so they start with:

#!/bin/bashNext, we use a slurm options block to tell slurm what computing resources we would like to use. You could pass slurm options in at the command line, but we recommend using an options block in your script so that you have a record of the resources required to run your job.

Each line in the slurm options block starts with:

#SBATCHThis tells slurm that what follows on this line is a slurm option. There are many slurm options, which are exhaustively enumerated in the slurm documentation. Here we will use only a few of the options:

#SBATCH --qos=batch # job queue

#SBATCH --ntasks=1 # number of nodes

#SBATCH --cpus-per-task=1 # number of cores

#SBATCH --mem=1G # memory pool for all cores

#SBATCH --time=48:00:00 # wall clock time (D-HH:MM)The first option specifies the job queue to use for this job. In this case, it is the “batch” queue on sumner2.

#SBATCH --qos=batch # job queueThe next two options specify the number of nodes and number of CPUs (or cores) per node to use. In this case, we will use one node and one CPU. This may seem confusing because we would like to use many nodes and cores to reduce the total run time of our job. But Nextflow handles resource request. This script just starts the Nextflow job, which does not require many resources. If you request more nodes and CPUs here, you will only be tying up resources that will sit idle while your job run. So one node and one CPU per node are sufficient.

#SBATCH --ntasks=1 # number of nodes

#SBATCH --cpus-per-task=1 # number of coresThe next option specifies the memory required for this task. We will request one Gigabyte (G). Again, this may seem like a small amount of memory for a large computational job, but this is only the memeory required to start and manage the Nextflow job. Nextflow will request the resources that it needs to run GBRS.

#SBATCH --mem=1G # memory pool for all coresThe last option that we will specify is the total wall-clock time needed to complete the job. This needs to be the total amount of time required to complete the job. This can be difficult to estimate and depends upon the number of samples, depth of coverage, and the configuration and utilization of the cluster.

#SBATCH --time=48:00:00 # wall clock time (D-HH:MM)Header Section

################################################################################

# Run GBRS on each sample. GRCm39. Ensembl 105.

#

# Daniel Gatti

# dan.gatti@jax.org

# 2022-09-28

################################################################################This section is not required, but it allows you to add some comments about what are doing. It is also a consistent place to put your name, contact information, and the date. Putting your name and contact information helps to keep you accountable for the code. Putting the date in the header helps you to remember when you wrote the script.

Error Handling

set -e -u -x -o pipefailThis commands tells bash to exit if any line of the script

fails (-e), to catch uninitialized variables (-u), to print commands as

we run (-x), and to

output the last exit code (-o).

Variable Section

We strongly recommend that you use bash variables to build file paths and specify arguments to the GBRS script. The reason for this is that it is easier to remember what each varible is when it is given a meaningful name.

Consider this script which runs the “analysis_tool_of_science”:

analysis_tool_of_science -t 8 \

-g /path/to/another/file \

-a /really/long/path/to/yet/another/file \

-i /path/to/mystery/file/number1 /path/to/mystery/file/number2 \

-x /path/to/some/directory/on/the/cluster

-o /path/to/another/directory/with/a/file/prefixYou can read the tool’s documentation to find out what each file is, but that requires extra time. This script would be easier to read if the paths had meaningful names. In the code block below, we give each file a meaningful variable name and provide a comment explaining what each file is. We also use this opportunity to specify the genome and annotation builds.

# FASTA file containing genome sequence for GRCm39.

GENOME_FASTA=/path/to/another/file

# GTF file containing genome annotation for Ensembl 105.

ANNOTATION_GTF=/really/long/path/to/yet/another/file

# Paths to the forward and reverse FASTQ files.

FASTQ_FILE1=/path/to/mystery/file/number1

FASTQ_FILE2=/path/to/mystery/file/number2

# Path to temporary directory for analysis_tool.

TEMP_DIR=/path/to/some/directory/on/the/cluster

# Path to output file, with a file prefix.

OUTPUT_PATH=/path/to/another/directory/with/a/file/prefix

analysis_tool_of_science -t 8 \

-g ${GENOME_FASTA} \

-a ${ANNOTATION_GTF} \

-i $(FASTQ_FILE1} ${FASTQ_FILE2} \

-x ${TEMP_DIR}

-o ${OUTPUT_PATH}This makes the script much easier for yourself (a year from now) and others to read and understand.

First, we will focus on variables that set the location of your files and working directories.

# Base directory for project.

BASE_DIR=/projects/research_lab_directory/diabetesWe like to set a “base directory”, which is the high-level directory for the project on which you are working. In this case, we are using a diabetes project as an example. We use the base directory to create other file paths which are below this directory.

# Sample metadata file.

SAMPLE_META_FILE=${BASE_DIR}/data/sodo_gbrs_metadata.csvThis is the path to the sample metadata file, which contains information about your samples. This includies the sample ID, sex, outbreeding generation, sequencer lane, and paths to the forward and reverse FASTQ files. Each row will contain information for one sample.

The sampleID column should contain values that you use

to identify the samples.

The sex column should contain the mouse’s sex recorded

as ‘F’ for females or ‘M’ for males.

The generation column should contain the outbreeding

generation for the mouse.

The lane column should contain the lane number on which

the sample was run. This can sometimes be found in the FASTQ file name.

In the example below, it is recorded as ‘L004’ in the FASTQ file names

and this is what we used in the lane column.

The fastq_1 and fastq_2 columns contain the

full path to the forward and reverse FASTQ files. In this case, we

copied the files to /flashscratch, which is the temporary sratch storage

space for large files.

Below is an example of the top of a sample metadata file. It is comma-delimited and has column names as the top.

sampleID,sex,generation,lane,fastq_1,fastq_2

D2,F,46,L004,/flashscratch/dgatti/fastq/D2_GT22-11729_CTAGGCAT-ACAGAGGT_S224_L004_R1_001.fastq.gz,/flashscratch/dgatti/fastq/D2_GT22-11729_CTAGGCAT-ACAGAGGT_S224_L004_R2_001.fastq.gz

D3,F,46,L001,/flashscratch/dgatti/fastq/D3_GT22-11665_CGGCTAAT-CTCGTTCT_S21_L001_R1_001.fastq.gz,/flashscratch/dgatti/fastq/D3_GT22-11665_CGGCTAAT-CTCGTTCT_S21_L001_R2_001.fastq.gz

D4,F,46,L001,/flashscratch/dgatti/fastq/D4_GT22-11670_TACGCTAC-CGTGTGAT_S65_L001_R1_001.fastq.gz,/flashscratch/dgatti/fastq/D4_GT22-11670_TACGCTAC-CGTGTGAT_S65_L001_R2_001.fastq.gz

D5,F,46,L001,/flashscratch/dgatti/fastq/D5_GT22-11708_GAGCAGTA-TGAGCTGT_S57_L001_R1_001.fastq.gz,/flashscratch/dgatti/fastq/D5_GT22-11708_GAGCAGTA-TGAGCTGT_S57_L001_R2_001.fastq.gzNotice that we have copied the FASTQ files over to /flashscratch. This isn’t required but we do this to simplify the file paths.

# Base /flashscratch directory.

FLASHSCRATCH=/flashscratch/usernameThis is the path to your directory on /flashscratch. You should create this before you begin your analysis. And you should replace ‘username’ with your username.

# Temporary directory.

TMP_DIR=${FLASHSCRATCH}/tmpThis is the path to the temporary directory where Nextflow and GBRS can store temporary working files during the analysis.

# Output directory.

OUT_DIR=${FLASHSCRATCH}/resultsThis is the directory where you would like GBRS to write your results. You should place this on /flashscratch and then copy the files that you need over to /projects when the analysis is complete.

# Final results directory (in backed up space)

DEST_DIR=${BASE_DIR}/results/gbrsThis is the directory to which you will copy your final results. It should be a location that is backed up (like /projects). Remember, /flashscratch is not backed up and your files will be deleted without notice after 10 days.

Once you have set all of the paths and created your sample metadata file, you can submit the script to the slurm queue. Save the script to a file called ‘run_gbrs.sh’, and then submit it using ‘sbatch’.

sbatch run_gbrs.shGBRS for Other Mouse Crosses

For Diversity Outbred (DO) mice, the reference files are stored in

the reference data directories /projects/omics_share on

sumner2. We will use these files as examples ot show you which

arguments to provide.

JAX uses slurm (NOTE: This is an internal JAX link which requires a JAX login.) to manage computing jobs on sumner2. There are several good tutorials on using slurm on the JAX Research IT Documentation Library.

slurm Options Block

slurm scripts are bash scripts, so they start with:

#!/bin/bashNext, we use a slurm options block to tell slurm what computing resources we would like to use. You could pass slurm options in at the command line, but we recommend using an options block in your script so that you have a record of the resources required to run your job.

Each line in the slurm options block starts with:

#SBATCHThis tells slurm that what follows on this line is a slurm option. There are many slurm options, which are exhaustively enumerated in the slurm documentation. Here we will use only a few of the options:

#SBATCH --qos=batch # job queue

#SBATCH --ntasks=1 # number of nodes

#SBATCH --cpus-per-task=1 # number of cores

#SBATCH --mem=1G # memory pool for all cores

#SBATCH --time=48:00:00 # wall clock time (D-HH:MM)The first option specifies the job queue to use for this job. In this case, it is the “batch” queue on sumner2.

#SBATCH --qos=batch # job queueThe next two options specify the number of nodes and number of CPUs (or cores) per node to use. In this case, we will use one node and one CPU. This may seem confusing because we would like to use many nodes and cores to reduce the total run time of our job. But Nextflow handles resource request. This script just starts the Nextflow job, which does not require many resources. If you request more nodes and CPUs here, you will only be tying up resources that will sit idle while your job run. So one node and one CPU per node are sufficient.

#SBATCH --ntasks=1 # number of nodes

#SBATCH --cpus-per-task=1 # number of coresThe next option specifies the memory required for this task. We will request one Gigabyte (G). Again, this may seem like a small amount of memory for a large computational job, but this is only the memeory required to start and manage the Nextflow job. Nextflow will request the resources that it needs to run GBRS.

#SBATCH --mem=1G # memory pool for all coresThe last option that we will specify is the total wall-clock time needed to complete the job. This needs to be the total amount of time required to complete the job. This can be difficult to estimate and depends upon the number of samples, depth of coverage, and the configuration and utilization of the cluster.

#SBATCH --time=48:00:00 # wall clock time (D-HH:MM)Header Section

This section is not required, but it allows you to add some comments about what are doing. It is also a consistent place to put your name, contact information, and the date. Putting your name and contact information helps to keep you accountable for the code. Putting the date in the header helps you to remember when you wrote the script.

################################################################################

# Run GBRS on each sample.

# GRCm39. Ensembl 105.

#

# Daniel Gatti

# dan.gatti@jax.org

# 2022-09-28

################################################################################Variable Section

We strongly recommend that you use bash variables to build file paths and specify arguments to the GBRS script. The reason for this is that it is easier to remember what each varible is when it is given a meaningful name.

Consider this script which runs the “analysis_tool_of_science”:

analysis_tool_of_science -t 8 \

-g /path/to/another/file \

-a /really/long/path/to/yet/another/file \

-i /path/to/mystery/file/number1 /path/to/mystery/file/number2 \

-x /path/to/some/directory/on/the/cluster

-o /path/to/another/directory/with/a/file/prefixYou can read the tool’s documentation to find out what each file is, but that requires extra time. It would be easier to read if the paths had meaningful names. In the code block below, we give each file a meaningful variable name and prrovide a comment explaining what each file is. We also use this opportunity to specify the genome and annotation builds.

# FASTA file containing genome sequence for GRCm39.

GENOME_FASTA=/path/to/another/file

# GTF file containing genome annotation for Ensembl 105.

ANNOTATION_GTF=/really/long/path/to/yet/another/file

# Paths to the forward and reverse FASTQ files.

FASTQ_FILE1=/path/to/mystery/file/number1

FASTQ_FILE2=/path/to/mystery/file/number2

# Path to temporary directory for analysis_tool.

TEMP_DIR=/path/to/some/directory/on/the/cluster

# Path to output file, with a file prefix.

OUTPUT_PATH=/path/to/another/directory/with/a/file/prefix

analysis_tool_of_science -t 8 \

-g ${GENOME_FASTA} \

-a ${ANNOTATION_GTF} \

-i $(FASTQ_FILE1} ${FASTQ_FILE2} \

-x ${TEMP_DIR}

-o ${OUTPUT_PATH}This makes the script much easier for yourself (a year from now) and others to read and understand.

The GBRS script has a lot of variables. We will explain each one so that you know what to use for your samples.

In this first section, we will focus on variables that set the location of your files and working directories.

# Base directory for project.

BASE_DIR=/projects/research_lab_directory/diabetesWe like to set a “base directory”, which is the high-level directory for the project which you are working on. In this case, we are using a diabetes project as an example. We use the base directory to create other file paths which are below this directory.

# Sample metadata file.

SAMPLE_META_FILE=${BASE_DIR}/data/sodo_gbrs_metadata.csvThis is the path to the sample metadata file. This file contains information about your samples, including the sample ID, sex, outbreeding generation, sequencer lane, and paths to the forward and reverse FASTQ files. Each row will contain information for one sample.

The sampleID should contain values that you use to

identify the samples.

The sex column should contain the mouse’s sex recorded

as ‘F’ for females or ‘M’ for males.

The generation column should contain the outbreeding

generation for the mouse.

The lane column should contain the lane number on which

the sample was run. This can sometimes be found in the FASTQ file name.

In the example below, it is recorded as ‘L004’ in the FASTQ file names

and this is what we used in the lane column.

The fastq_1 and fastq_2 columns contain the

full path to the forward and reverse FASTQ files. In this case, we

copied the files to /flashscratch, which is the temporary scratch

storage space for large files.

sampleID,sex,generation,lane,fastq_1,fastq_2

D2,F,46,L004,/flashscratch/dgatti/fastq/D2_GT22-11729_CTAGGCAT-ACAGAGGT_S224_L004_R1_001.fastq.gz,/flashscratch/dgatti/fastq/D2_GT22-11729_CTAGGCAT-ACAGAGGT_S224_L004_R2_001.fastq.gz

D3,F,46,L001,/flashscratch/dgatti/fastq/D3_GT22-11665_CGGCTAAT-CTCGTTCT_S21_L001_R1_001.fastq.gz,/flashscratch/dgatti/fastq/D3_GT22-11665_CGGCTAAT-CTCGTTCT_S21_L001_R2_001.fastq.gz

D4,F,46,L001,/flashscratch/dgatti/fastq/D4_GT22-11670_TACGCTAC-CGTGTGAT_S65_L001_R1_001.fastq.gz,/flashscratch/dgatti/fastq/D4_GT22-11670_TACGCTAC-CGTGTGAT_S65_L001_R2_001.fastq.gz

D5,F,46,L001,/flashscratch/dgatti/fastq/D5_GT22-11708_GAGCAGTA-TGAGCTGT_S57_L001_R1_001.fastq.gz,/flashscratch/dgatti/fastq/D5_GT22-11708_GAGCAGTA-TGAGCTGT_S57_L001_R2_001.fastq.gz# Base /flashscratch directory.

FLASHSCRATCH=/flashscratch/usernameThis is the path to your directory on /flashscratch. You should create this before you begin your analysis.

# Temporary directory.

TMP_DIR=${FLASHSCRATCH}/tmpThis is the path to the temporary directory where Nextflow and GBRS can store temporary working files during the analysis.

# Output directory.

OUT_DIR=${FLASHSCRATCH}/resultsThis is the directory where you would like GBRS to write your results. You should place this on /flashscratch and then copy the files that you need over to /projects when the analysis is complete.

# Final results directory (in backed up space)

DEST_DIR=${BASE_DIR}/results/gbrsThis is the directory to which you will copy your final results. It should be a location that is backed up (like /projects). Remember, /flashscratch is not backed up and your files will be deleted without notice after 10 days.

In the next section, we will focus on the reference files that are required by GBRS.

# GBRS Reference File Directory

GBRS_REF_DIR=/projects/omics_share/mouse/GRCm39/supporting_files/emase_gbrs/rel_2112_v8This is the directory where the GBRS reference files are stored. These are a series of files which contain information about genes and transcripts in the eight DO founder strains. The numbers in the directory refere to the Sanger Mouse Genomes Project release. “2112” refers to the 12th month of 2021. “v8” refers to version 8 of their genetic variant release.

There are eight arguments that point to the reference files used by GBRS.

# GBRS Transcripts.

GBRS_TRANSCRIPTS=${GBRS_REF_DIR}/emase.fullTranscripts.infoThis file contains the list of transcripts in Ensembl 105 that GBRS will quantify.

# GBRS/EMASE gene to transcript file.

GBRS_GENE2TRANS=${GBRS_REF_DIR}/emase.gene2transcripts.tsvThis file contains the list of transcripts which map to each gene. Each row contains one gene, followed by its associated Ensembl transcripts.

# GBRS Full Transcript.

GBRS_FULL_TRANSCRIPTS=${GBRS_REF_DIR}/emase.pooled.fullTranscripts.infoThis file contains a list of transcript lengths in each of the eight DO founders.

# GBRS Emission Probs.

GBRS_EMIS_PROBS=${GBRS_REF_DIR}/gbrs_emissions_all_tissues.avecs.npzThis file contains the allele emission probabilities for the hidden Markov model. These are used at each gene to estimate the probability that a given allele is obsered, given that the model is in a certain genotype state.

# GBRS Transmission Probs.

GBRS_TRANS_PROBS=${GBRS_REF_DIR}/transition_probabilitiesThis directory contains the transmission probabilities for the hidden Markov model. There are many files in this directory, one for each DO outbreeding generation. Each file contains the probability of changing genotype state between each gene at a given DO outbreeding generation.

# Ensembl 105 gene positions.

ENSEMBL_105=${GBRS_REF_DIR}/ref.gene_pos.ordered_ensBuild_105.npzThis file contains the Ensembl 105 gene positions in a python format.

# GBRS 69K Marker Grid.

MARKER_GRID=${GBRS_REF_DIR}/ref.genome_grid.GRCm39.tsvThis file contains the pseudomarker genome grid which is used to report results. Each tissue expresses different gene, which leads GBRS to estimate genotypes at different genomic positions in each tissue. In order to standardize results, GBRS interpolates its founder haplotype estimates to a common grid of 74,165 positions.

# Bowtie index for GBRS.

BOWTIE_INDEX=/projects/compsci/omics_share/mouse/GRCm39/transcriptome/indices/imputed/rel_2112_v8/bowtie/bowtie.transcriptsThis is the path to the bowtie index files. There are multiple files and this variable should contain the directory and the file prefix for the index files. In this case, the file prefix is “bowtie.transcripts” and the files are named “bowtie.transcripts.1.ewbt”, “bowtie.transcripts.2.ewbt”, etc.

# GBRS path.

GBRS_GITHUB_PATH=TheJacksonLaboratory/cs-nf-pipelinesThis is the Github path to the Computational Science

nextflow pipelines.

# Singularity cache directory.

export NXF_SINGULARITY_CACHEDIR=${flashscratch}/singularity_cacheThis is the cache directory where Singularity will store containers which it downloads during the analysis run.

Entire GBRS Script

Here is the whole script in one piece.

#!/bin/bash

#SBATCH --qos=batch # job queue

#SBATCH --ntasks=1 # number of nodes

#SBATCH --cpus-per-task=1 # number of cores

#SBATCH --mem=1G # memory pool for all cores

#SBATCH --time=48:00:00 # wall clock time (D-HH:MM)

################################################################################

# Run GBRS on each sample.

# GRCm39. Ensembl 105.

#

# Daniel Gatti

# dan.gatti@jax.org

# 2022-09-28

################################################################################

##### VARIABLES #####

# Base directory for project.

BASE_DIR=/projects/bolcun-filas-lab/DO_Superovulation

# Sample metadata file.

SAMPLE_META_FILE=${BASE_DIR}/gbrs_sample_metadata.csv

# Base /flashscratch directory.

FLASHSCRATCH=/flashscratch/dgatti

# Temporary directory.

TMP_DIR=${FLASHSCRATCH}/tmp

# Output directory.

OUT_DIR=${FLASHSCRATCH}/results

# Final results directory (in backed up space)

DEST_DIR=${BASE_DIR}/results/gbrs/grcm39

# GBRS Reference File Directory

GBRS_REF_DIR=/projects/churchill-lab/projects/GBRS_GRCm39

# GBRS Transcripts.

GBRS_TRANSCRIPTS=${GBRS_REF_DIR}/emase.fullTranscripts.info

# GBRS/EMASE gene to transcript file.

GBRS_GENE2TRANS=${GBRS_REF_DIR}/emase.gene2transcripts.tsv

# GBRS Full Transcript.

GBRS_FULL_TRANSCRIPTS=${GBRS_REF_DIR}/emase.pooled.fullTranscripts.info

# GBRS Emission Probs.

GBRS_EMIS_PROBS=${GBRS_REF_DIR}/gbrs_emissions_all_tissues.avecs.npz

# GBRS Transmission Probs.

GBRS_TRANS_PROBS=${GBRS_REF_DIR}/transition_probabilities

# Ensembl 105 gene positions.

ENSEMBL_105=${GBRS_REF_DIR}/ref.gene_pos.ordered_ensBuild_105.npz

# GBRS 69K Marker Grid.

MARKER_GRID=${GBRS_REF_DIR}/ref.genome_grid.GRCm39.tsv

# Bowtie index for GBRS.

BOWTIE_INDEX=/projects/compsci/omics_share/mouse/GRCm39/transcriptome/indices/imputed/rel_2112_v8/bowtie/bowtie.transcripts

# GBRS path.

GBRS_GITHUB_PATH=TheJacksonLaboratory/cs-nf-pipelines

# Singularity cache directory.

export NXF_SINGULARITY_CACHEDIR=${flashscratch}/singularity_cache

##### MAIN #####

mkdir -p ${OUT_DIR}

mkdir -p ${DEST_DIR}

mkdir -p ${TMP_DIR}

mkdir -p ${NXF_SINGULARITY_CACHEDIR}

module load singularity

cd ${TMP_DIR}

nextflow run ${GBRS_GITHUB_PATH} \

-profile sumner \

--workflow gbrs \

--pubdir ${OUT_DIR} \

-w ${TMP_DIR} \

--bowtie_index ${BOWTIE_INDEX} \

--csv_input ${SAMPLE_META_FILE} \

--transcripts_info ${GBRS_TRANSCRIPTS} \

--gene2transcript_csv ${GBRS_GENE2TRANS} \

--full_transcript_info ${GBRS_FULL_TRANSCRIPTS} \

--emission_prob_avecs ${GBRS_EMIS_PROBS} \

--trans_prob_dir ${GBRS_TRANS_PROBS} \

--gene_position_file ${ENSEMBL_105} \

--genotype_grid ${MARKER_GRID} \

-resume

# Copy output files from OUT_DIR to DEST_DIR.

DIRS=`ls ${OUT_DIR}`

for i in ${DIRS}

do

CURR_DEST_DIR=${DEST_DIR}/$i

mkdir -p ${CURR_DEST_DIR}

cp ${OUT_DIR}/${i}/stats/* ${CURR_DEST_DIR}

cp ${OUT_DIR}/${i}/gbrs/*_counts ${CURR_DEST_DIR}

cp ${OUT_DIR}/${i}/gbrs/*.tsv ${CURR_DEST_DIR}

cp ${OUT_DIR}/${i}/gbrs/*.pdf ${CURR_DEST_DIR}

doneGBRS Output

GBRS will create one output directory per sample. Each directory will

be named with the sample ID and will contain NNN files.

In the table below, we denote the sample ID as

Each sample directory will contain three directories:

- emase

- gbrs

- stats

We will look at the contents of each directory one at a time.

emase Directory

<SAMPLE>.merged.compressed.emase.h5

<SAMPLE>.multiway.genes.alignment_counts

<SAMPLE>.multiway.genes.expected_read_counts

<SAMPLE>.multiway.genes.tpm

<SAMPLE>.multiway.isoforms.alignment_counts

<SAMPLE>.multiway.isoforms.expected_read_counts

<SAMPLE>.multiway.isoforms.tpmgbrs Directory

<SAMPLE>.merged.compressed.emase.h5

<SAMPLE>.multiway.genes.alignment_counts

<SAMPLE>.multiway.genes.expected_read_counts

<SAMPLE>.multiway.genes.tpm

<SAMPLE>.multiway.isoforms.alignment_counts

<SAMPLE>.multiway.isoforms.expected_read_counts

<SAMPLE>.multiway.isoforms.tpm

<SAMPLE>.diploid.genes.alignment_counts

<SAMPLE>.diploid.genes.expected_read_counts

<SAMPLE>.diploid.genes.tpm

<SAMPLE>.diploid.isoforms.alignment_counts

<SAMPLE>.diploid.isoforms.expected_read_counts

<SAMPLE>.diploid.isoforms.tpm

<SAMPLE>.gbrs.interpolated.genoprobs.npz

<SAMPLE>.gbrs.interpolated.genoprobs.tsv

<SAMPLE>.gbrs.plotted.genome.pdf

<SAMPLE>.genotypes.tsvstats Directory

<SAMPLE>.bowtie_R1.log

<SAMPLE>.bowtie_R2.logThese are the alignment log files generated by the Bowtie 1 aligner. Each file contains alignment statistics, such as the number of reads processed and the number of alignments. An example file is shown below:

# reads processed: 41617615

# reads with at least one alignment: 35083649 (84.30%)

# reads that failed to align: 6533966 (15.70%)

Reported 415621849 alignmentsIn this case, there were a total of 41,617,615 reads. The corresponds to the number of reads in the input FASTQ file. 35,083,649 (84.30% of the total reads) aligned to at least one location in the genome. Note that some of these may have aligned to multiple locations. 6,533,966 (15.70% of the total reads) did not align to the genome with sufficient accuracy to use. The total number of alignments was 415,621,849, which is an order or magnitude higher than the number of reads. This is because each read may align to the genome more than once.

| File Name | File Format | Description |

|---|---|---|

| Text | Bowtie 1 alignment log file for read 1 | |

| Text | Bowtie 1 alignment log file for read 2 | |

| Tab-delimited | Founder allele-specific gene alignment counts | |

| Tab-delimited | Founder allele-specific expected gene counts | |

| Tab-delimited | Founder allele-specific transcript isoform alignment counts | |

| Tab-delimited | Founder allele-specific expected transcript isoform counts | |

| Tab-delimited | Estimated founder allele probabilities | |

| Karyogram of estimated founder allele probabilities | ||

| Tab-delimited | Founder diplotype calls at each gene | |

| Binary alignment map |

Below, we review each output file in greater detail.

Founder Allele-specific Gene Alignment Counts

This file contains the counts for each of the eight DO founder alleles for each gene. There is one row per gene and 18 columns. An example of the top of one file is shown below.

locus aln_A aln_B aln_C aln_D aln_E aln_F aln_G aln_H uniq_A uniq_B uniq_C uniq_D uniq_E uniq_F uniq_G uniq_H locus_uniq

ENSMUSG00000000001 12124.0 12124.0 12124.0 12124.0 5066.0 9349.0 5751.0 12124.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 12126.0

ENSMUSG00000000003 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0

ENSMUSG00000000028 721.0 721.0 721.0 721.0 721.0 676.0 713.0 602.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 721.0

ENSMUSG00000000031 464.0 464.0 464.0 464.0 464.0 298.0 241.0 464.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 464.0

ENSMUSG00000000037 135.0 135.0 135.0 133.0 135.0 95.0 94.0 115.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 137.0

ENSMUSG00000000049 42.0 42.0 42.0 42.0 42.0 20.0 32.0 39.0 0.0 0.0 0.0 0.0 0.0 16.0 0.0 0.0 58.0

ENSMUSG00000000056 1453.0 1453.0 1324.0 1453.0 1453.0 844.0 776.0 1454.0 0.0 0.0 0.0 0.0 0.0 0.0 4.0 39.0 1506.0

ENSMUSG00000000058 1750.0 1972.0 1802.0 1972.0 1750.0 1055.0 674.0 252.0 0.0 0.0 0.0 0.0 0.0 2.0 0.0 0.0 1977.0

ENSMUSG00000000078 4255.0 4255.0 4255.0 4255.0 3951.0 4093.0 3189.0 3398.0 0.0 0.0 0.0 0.0 633.0 0.0 9.0 12.0 5134.0For each gene, the number of gene counts for each founder allele is shown in the columns which start with “aln”. The “aln” columns contains the estimated gene counts for reads which aligned to each of the 8 founder transcriptomes. The columns which begin wit “uniq” contains gene counts for reads which aligned uniquely to one of the 8 founder transcriptomes. The “locus_uniq” column contains ????.

<SAMPLE>.diploid.genes.expected_read_countsKey Points

- GBRS produces several output file for each sample.

Content from Preparing GBRS Pseudo-References

Last updated on 2024-09-06 | Edit this page

Estimated time: 75 minutes

Overview

Questions

- Why do we need to align to a non-reference genome?

- What is a pseudo-reference genome?

- How do we create a pseudo-reference genome?

Objectives

- Construct a pseudo-reference genome for Diversity Outbred mice.

Introduction

Genome-to-Genome Tools, (g2gtools) is a suite of tools which incorporates SNPs and indels from mouse strains into the reference genome. Inserting these variants may lead to changes in the length of the genome and g2gtools is able to map positions between the reference genome and the modified genome.

In this tutorial, we will show you how to use the pre-built

nextflow pipeline to build a pseudo-reference on the JAX

computing cluster (sumner2). Then, we will show you how each step work.

We expect that most users will use the nextflow pipeline

and have included the step-by-step pipeline for advanced users who may

want to use non-standard pipeline options.

Creating a Pseudo-Reference for Diversity Outbred Mice

The nextflow pipeline developed by Computational

Sciences contains default values to make pseudo-reference generation for

Diversity Outbred mice easy. You can run the commands with minimal

arguments.

There is a Quick Start Guide for JAX users at https://github.com/TheJacksonLaboratory/cs-nf-pipelines/wiki/Pipeline-Documentation#quick-start-for-jax-users.

This describes the essentials of running a nextflow

pipeline on sumner2 at JAX.

The documentation for the GBRS nextflow pipeline is at

https://github.com/TheJacksonLaboratory/cs-nf-pipelines/wiki/Generate-Pseudoreference-Pipeline-ReadMe.

This page lists the default values, which point reference files on

sumner2 and list the eight Diversity Outbred founder strains by default.

Some of the default files are listed in the table below.

| File Type | Argument Name | File Path | Description |

|---|---|---|---|

| Reference FASTA | –primary_reference_fasta | /projects/compsci/omics_share/mouse/GRCm39/genome/sequence/ensembl/v105/Mus_musculus.GRCm39.dna.primary_assembly.fa | primary reference fasta file for GRCm39 |

| SNP VCF | –snp_vcf | /projects/compsci/omics_share/mouse/GRCm39/genome/annotation/snps_indels/rel_2112_v8/mgp_REL2021_snps.vcf.gz | VCF containing SNPs in mouse strains from the Mouse Genomes Project version 8 |

| Indel VCF | –indel_vcf | /projects/compsci/omics_share/mouse/GRCm39/genome/annotation/snps_indels/rel_2112_v8/mgp_REL2021_indels.vcf.gz | VCF containing Indels in mouse strains from the Mouse Genomes Project version 8 |

| Reference GTF | –primary_reference_gtf | /projects/compsci/omics_share/mouse/GRCm39/transcriptome/annotation/ensembl/v105/Mus_musculus.GRCm39.105.gtf | Gene annotation for Ensembl 105 in GTF |

The default nextflow pipeline also include the DO

founder strains as a comma separated list:

| Argument | Value |

|---|---|

| –strain | 129S1_SvImJ,A_J,CAST_EiJ,NOD_ShiLtJ,NZO_HlLtJ,PWK_PhJ,WSB_EiJ |

To run the nextflow pipeline to generate a

pseudo-reference for DO mice on sumner2, use the following commands.

First, we load the nextflow module.

module use --append /projects/omics_share/meta/modules

module load nextflowNext, change into a directory where you will be working. We suggest using /flashscratch and creating a directory for this.

Create a variable for your user ID on sumner2:

USERID=<your User ID>cd /flashscratch/${USERID}Then use git to clone the cs-nf-pipelines

repository.

git clone https://github.com/TheJacksonLaboratory/cs-nf-pipelines.gitNext, we need to create a variable for the output directory name and create the directory.

OUTPUT_DIR=/flashscratch/${USERID}/gbrs

mkdir -p ${OUTPUT_DIR}The last step before running the pipeline is to create a temporary working directory. This should be on /flashscratch since many large files are created.

WORKING_DIR=/flashscratch/${USERID}/tmp

mkdir -p ${WORKING_DIR}There are two types of arguments in the nextflow call

below. Arguments to nextflow have a single ‘-’. These

are:

| Argument | Value | Description |

|---|---|---|

| -profile | sumner2 | This sets the resource values for the JAX sumner2 computing cluster |

| -w | ${WORKING_DIR} | The working directory for temporary files |

Arguments which start with ‘–’ go to the nextflow

pipeline. These are:

| Argument | Value | Description |

|---|---|---|

| –workflow | generate_pseudoreference | This tells nextflow which workflow to run |

| –pubdir | ${OUTPUT_DIR} | The directory where output should be written |

With this preparation, we can run the nextflow

pipeline.

nextflow cs-nf-pipelines/main.nf \

-profile sumner2 \

-w ${WORKING_DIR} \

--workflow generate_pseudoreference \

--pubdir ${OUTPUT_DIR}The entire process should take around 30 minutes. It should produce the following output on the terminal:

OUTPUT

N E X T F L O W ~ version 22.04.3

Launching `cs-nf-pipelines/main.nf` [gloomy_panini] DSL2 - revision: 6b82c2c015

_____ _______ _ _ _______ _______ __ _ ______ _______ _____ _____ _______

| |_____| \___/ ___ | |______ ___ | \ | | ____ |______ ___ | | |_____| |______

__| | | _/ \_ |_____ ______| | \_| |_____| ______| |_____| | ______|

GENERATE PSEUDOREFERENCE PARAMETER LOG

--comment:

Results Published to: /flashscratch/dgatti/gbrs

______________________________________________________

--workflow generate_pseudoreference

-w /flashscratch/dgatti/tmp

-c config/generate_pseudoreference.config

--snp_vcf /projects/compsci/omics_share/mouse/GRCm39/genome/annotation/snps_indels/rel_2112_v8/mgp_REL2021_snps.vcf.gz

--indel_vcf /projects/compsci/omics_share/mouse/GRCm39/genome/annotation/snps_indels/rel_2112_v8/mgp_REL2021_indels.vcf.gz

--primary_reference_fasta /projects/compsci/omics_share/mouse/GRCm39/genome/sequence/ensembl/v105/Mus_musculus.GRCm39.dna.primary_assembly.fa

--primary_reference_gtf /projects/compsci/omics_share/mouse/GRCm39/transcriptome/annotation/ensembl/v105/Mus_musculus.GRCm39.105.gtf

--gtf_biotype_include protein_coding,lncRNA,IG_C_gene,IG_D_gene,IG_J_gene,IG_LV_gene,IG_V_gene,TR_C_gene,TR_D_gene,TR_J_gene,TR_V_gene

--strain 129S1_SvImJ,A_J,CAST_EiJ,NOD_ShiLtJ,NZO_HlLtJ,PWK_PhJ,WSB_EiJ

--genome_version 39

--append_chromosomes true

--diploid false

--keep_fails false

--pass_only false

--quality_filter

--region

--bed

--keep_intermediate false

Project Directory: /gpfs/ctgs0/flashscratch/dgatti/nextflow/cs-nf-pipelines

Command line call:

nextflow cs-nf-pipelines/main.nf -profile sumner2 -w /flashscratch/dgatti/tmp --workflow generate_pseudoreference --pubdir /flashscratch/dgatti/gbrs

______________________________________________________

executor > slurm (72)

[9b/2185dd] process > GENERATE_PSEUDOREFERENCE:FILTER_GTF (Filtering GTF) [100%] 1 of 1 ✔

[86/d616d1] process > GENERATE_PSEUDOREFERENCE:G2GTOOLS_VCF2VCI (WSB_EiJ) [100%] 7 of 7 ✔

[4d/bce7df] process > GENERATE_PSEUDOREFERENCE:G2GTOOLS_PATCH (WSB_EiJ) [100%] 7 of 7 ✔

[5c/6c9c51] process > GENERATE_PSEUDOREFERENCE:G2GTOOLS_TRANSFORM (WSB_EiJ) [100%] 7 of 7 ✔

[97/91a924] process > GENERATE_PSEUDOREFERENCE:SAMTOOLS_FAIDX_G2GTOOLS (W... [100%] 7 of 7 ✔

[22/380cb8] process > GENERATE_PSEUDOREFERENCE:G2GTOOLS_CONVERT (CAST_EiJ) [100%] 7 of 7 ✔

[6f/5a91f3] process > GENERATE_PSEUDOREFERENCE:APPEND_DROPPED_CHROMS (CAS... [100%] 7 of 7 ✔

[fa/69434d] process > GENERATE_PSEUDOREFERENCE:G2GTOOLS_GTF2DB (CAST_EiJ) [100%] 7 of 7 ✔

[42/d32cda] process > GENERATE_PSEUDOREFERENCE:G2GTOOLS_EXTRACT_GENES (CA... [100%] 7 of 7 ✔

[c5/15243b] process > GENERATE_PSEUDOREFERENCE:G2GTOOLS_EXTRACT_TRANSCRIP... [100%] 7 of 7 ✔

[67/e947ad] process > GENERATE_PSEUDOREFERENCE:G2GTOOLS_EXTRACT_EXONS (CA... [100%] 7 of 7 ✔

[54/203383] process > GENERATE_PSEUDOREFERENCE:SAMTOOLS_FAIDX (primary_st... [100%] 1 of 1 ✔

Completed at: 14-Sep-2023 14:18:58

Duration : 23m 40s

CPU hours : 14.7

Succeeded : 72Let’s look at the files in the output directory.

ls ${OUTPUT_DIR}You should see the following files and directories:

OUTPUT

g2gtools generate_pseudoreference_report.html Mus_musculus.GRCm39.105.filtered.gtf traceLook for the reference files in the ‘g2gtools’ directory.

ls ${OUTPUT_DIR}/g2gtoolsOUTPUT

129S1_SvImJ.39_DroppedChromAppended.gtf NOD_ShiLtJ.39.genes.fa

129S1_SvImJ.39.exons.fa NOD_ShiLtJ.39.gtf.db

129S1_SvImJ.39.fa NOD_ShiLtJ.39.transcripts.fa

129S1_SvImJ.39.fa.fai NOD_ShiLtJ.39.vci.gz

129S1_SvImJ.39.genes.fa NOD_ShiLtJ.39.vci.gz.tbi

129S1_SvImJ.39.gtf.db NZO_HlLtJ.39_DroppedChromAppended.gtf

129S1_SvImJ.39.transcripts.fa NZO_HlLtJ.39.exons.fa

129S1_SvImJ.39.vci.gz NZO_HlLtJ.39.fa

129S1_SvImJ.39.vci.gz.tbi NZO_HlLtJ.39.fa.fai

A_J.39_DroppedChromAppended.gtf NZO_HlLtJ.39.genes.fa

A_J.39.exons.fa NZO_HlLtJ.39.gtf.db

A_J.39.fa NZO_HlLtJ.39.transcripts.fa

A_J.39.fa.fai NZO_HlLtJ.39.vci.gz

A_J.39.genes.fa NZO_HlLtJ.39.vci.gz.tbi

A_J.39.gtf.db PWK_PhJ.39_DroppedChromAppended.gtf

A_J.39.transcripts.fa PWK_PhJ.39.exons.fa

A_J.39.vci.gz PWK_PhJ.39.fa

A_J.39.vci.gz.tbi PWK_PhJ.39.fa.fai

CAST_EiJ.39_DroppedChromAppended.gtf PWK_PhJ.39.genes.fa

CAST_EiJ.39.exons.fa PWK_PhJ.39.gtf.db

CAST_EiJ.39.fa PWK_PhJ.39.transcripts.fa

CAST_EiJ.39.fa.fai PWK_PhJ.39.vci.gz

CAST_EiJ.39.genes.fa PWK_PhJ.39.vci.gz.tbi

CAST_EiJ.39.gtf.db WSB_EiJ.39_DroppedChromAppended.gtf

CAST_EiJ.39.transcripts.fa WSB_EiJ.39.exons.fa

CAST_EiJ.39.vci.gz WSB_EiJ.39.fa

CAST_EiJ.39.vci.gz.tbi WSB_EiJ.39.fa.fai

Mus_musculus.GRCm39.dna.primary_assembly.fa.fai WSB_EiJ.39.genes.fa

NOD_ShiLtJ.39_DroppedChromAppended.gtf WSB_EiJ.39.gtf.db

NOD_ShiLtJ.39.exons.fa WSB_EiJ.39.transcripts.fa

NOD_ShiLtJ.39.fa WSB_EiJ.39.vci.gz

NOD_ShiLtJ.39.fa.fai WSB_EiJ.39.vci.gz.tbiCreating Pseudo-Reference Genomes and Transcriptomes for Other Strains

If you are working with a cross comprised of other strains, you can change the “–strain” argument.

Challenge 1: Viewing Strain Names in a VCF

There are 50 strains in the VCF SNP file. What are their names? The

strain names are stored in the VCF header. The samtools

container also includes a tool called tabix, which queries

and indexes tab-delimited files. Run the samtools container

and look at the tabix help page. Find a command that will print out the

SNP VCF header and find the strain names.

singularity run ${SAMTOOLS} tabix --helpCall tabix with the ‘–only-header’ option and pass in

the SNP VCF. In this course, we are using long option names. You could

also use ‘-H’ to achieve the same result.

$ singularity run ${SAMTOOLS} tabix --only-header ${VCF_SNPS}OUTPUT

##fileformat=VCFv4.2

##FILTER=<ID=PASS,Description="All filters passed">

##bcftoolsVersion=1.13+htslib-1.13

##bcftoolsCommand=mpileup -f Mus_musculus.GRCm39.dna.toplevel.fa.gz -b samples -g 10 -a FORMAT/DP,FORMAT/AD,FORMAT/ADF,FORMAT/ADR,FORMAT/SP,INFO/AD -E -Q 0 -pm 3 -F 0.25 -d 500

##reference=Mus_musculus.GRCm39.dna.toplevel.fa.gz

##contig=<ID=1,length=195154279>

##contig=<ID=2,length=181755017>

##contig=<ID=3,length=159745316>

##contig=<ID=4,length=156860686>

##contig=<ID=5,length=151758149>

##contig=<ID=6,length=149588044>

##contig=<ID=7,length=144995196>

##contig=<ID=8,length=130127694>

##contig=<ID=9,length=124359700>

##contig=<ID=10,length=130530862>

##contig=<ID=11,length=121973369>

##contig=<ID=12,length=120092757>

##contig=<ID=13,length=120883175>

##contig=<ID=14,length=125139656>

##contig=<ID=15,length=104073951>

##contig=<ID=16,length=98008968>

##contig=<ID=17,length=95294699>

##contig=<ID=18,length=90720763>

##contig=<ID=19,length=61420004>

##contig=<ID=X,length=169476592>

##ALT=<ID=*,Description="Represents allele(s) other than observed.">

##INFO=<ID=INDEL,Number=0,Type=Flag,Description="Indicates that the variant is an INDEL.">

##INFO=<ID=IDV,Number=1,Type=Integer,Description="Maximum number of raw reads supporting an indel">

##INFO=<ID=IMF,Number=1,Type=Float,Description="Maximum fraction of raw reads supporting an indel">

##INFO=<ID=DP,Number=1,Type=Integer,Description="Raw read depth">

##FORMAT=<ID=PL,Number=G,Type=Integer,Description="List of Phred-scaled genotype likelihoods">

##FORMAT=<ID=DP,Number=1,Type=Integer,Description="Number of high-quality bases">

##FORMAT=<ID=AD,Number=R,Type=Integer,Description="Allelic depths (high-quality bases)">

##INFO=<ID=AD,Number=R,Type=Integer,Description="Total allelic depths (high-quality bases)">

##INFO=<ID=END,Number=1,Type=Integer,Description="End position of the variant described in this record">

##INFO=<ID=MinDP,Number=1,Type=Integer,Description="Minimum per-sample depth in this gVCF block">

##FORMAT=<ID=GT,Number=1,Type=String,Description="Genotype">

##FORMAT=<ID=GQ,Number=1,Type=Integer,Description="Phred-scaled Genotype Quality">

##INFO=<ID=DP4,Number=4,Type=Integer,Description="Number of high-quality ref-forward , ref-reverse, alt-forward and alt-reverse bases">

##INFO=<ID=MQ,Number=1,Type=Integer,Description="Average mapping quality">

##bcftools_callCommand=call -mAv -f GQ,GP -p 0.99; Date=Wed Aug 11 21:20:03 2021

##bcftools_normCommand=norm --fasta-ref Mus_musculus.GRCm39.dna.toplevel.fa.gz -m +indels; Date=Fri Aug 13 11:11:49 2021

##FORMAT=<ID=FI,Number=1,Type=Integer,Description="High confidence (1) or low confidence (0) based on soft filtering values">

##FILTER=<ID=LowQual,Description="Low quality variants">

##VEP="v104" time="2021-08-30 23:27:00" cache="mus_musculus/104_GRCm39" ensembl-funcgen=104.59ae779 ensembl-variation=104.6154f8b ensembl=104.1af1dce ensembl-io=104.1d3bb6e assembly="GRCm39" dbSNP="150" gencode="GENCODE M27" regbuild="1.0" sift="sift"

##INFO=<ID=CSQ,Number=.,Type=String,Description="Consequence annotations from Ensembl VEP. Format: Allele|Consequence|IMPACT|SYMBOL|Gene|Feature_type|Feature|BIOTYPE|EXON|INTRON|HGVSc|HGVSp|cDNA_position|CDS_position|Protein_position|Amino_acids|Codons|Existing_variation|DISTANCE|STRAND|FLAGS|VARIANT_CLASS|SYMBOL_SOURCE|HGNC_ID|SIFT|MOTIF_NAME|MOTIF_POS|HIGH_INF_POS|MOTIF_SCORE_CHANGE|TRANSCRIPTION_FACTORS">

##bcftools_viewVersion=1.13+htslib-1.13

##bcftools_viewCommand=view -i 'FORMAT/FI[*] = 1' mgp_REL2021_snps.vcf.gz; Date=Sat Dec 18 19:08:09 2021

##bcftools_annotateVersion=1.13+htslib-1.13

##bcftools_annotateCommand=annotate -x INFO/VDB,INFO/SGB,INFO/RPBZ,INFO/MQBZ,INFO/MQBZ,INFO/MQSBZ,INFO/BQBZ,INFO/SCBZ,INFO/FS,INFO/MQOF,INFO/AC,INFO/AN,FORMAT/SP,FORMAT/ADF,FORMAT/ADR,FORMAT/GP; Date=Sat Dec 18 19:08:09 2021

##INFO=<ID=AC,Number=A,Type=Integer,Description="Allele count in genotypes">

##INFO=<ID=AN,Number=1,Type=Integer,Description="Total number of alleles in called genotypes">

##bcftools_viewCommand=view -a -Oz -o final_mgp_REL2021_snps.vcf.gz; Date=Sat Dec 18 19:08:09 2021

##bcftools_annotateCommand=annotate -x INFO/MQ0F -Oz -o final_mgp_REL2021_snps.vcf.gz mgp_REL2021_snps.vcf.gz; Date=Mon Dec 20 07:12:23 2021

#CHROM POS ID REF ALT QUAL FILTER INFO FORMAT 129P2_OlaHsd 129S1_SvImJ 129S5SvEvBrd A_J AKR_J B10.RIII BALB_cByJ BALB_cJ BTBR_T+_Itpr3tf_J BUB_BnJC3H_HeH C3H_HeJ C57BL_10J C57BL_10SnJ C57BL_6NJ C57BR_cdJ C57L_J C58_J CAST_EiJCBA_J CE_J CZECHII_EiJ DBA_1J DBA_2J FVB_NJ I_LnJ JF1_MsJ KK_HiJ LEWES_EiJ LG_J LP_J MAMy_J MOLF_EiJ NOD_ShiLtJ NON_LtJ NZB_B1NJ NZO_HlLtJ NZW_LacJ PL_J PWK_PhJ QSi3 QSi5 RF_J RIIIS_J SEA_GnJ SJL_J SM_J SPRET_EiJ ST_bJ SWR_J WSB_EiJ ZALENDE_EiJThe VCF header contains information about the file format version, chromosomes, metadata, and tools used to create the VCF. The column names are on the last line of the VCF header and this line contains the strain names. If you had a cross comprised of other strains, you would query the VCF to find their names.

Step-by-Step Pseudo-Reference Generation

In general, most users will not use this strategy. We have included this section to document the steps for advanced users who may want to modify specific steps in the pipeline.

The first step in creating the reference files for GBRS is to create a set of genomes and transcriptomes with SNPs and Indels from other strains inserted into the reference genome.

Setup

We will use the g2gtools container stored in the public reference area on sumner2.

G2GTOOLS=/projects/omics_share/meta/containers/quay.io-jaxcompsci-g2gtools-74926ad.imgWe will also use a tool called samtools to query and modify sequence and annotation files.

SAMTOOLS=/projects/omics_share/meta/containers/quay.io-biocontainers-samtools-1.14--hb421002_0.imgWe need two input files and we will produce one output file per strain.

The first file is the reference genome in FASTA format.

REF_FASTA=/projects/omics_share/mouse/GRCm39/genome/sequence/ensembl/v105/Mus_musculus.GRCm39.dna.primary_assembly.faThe second file is a VCF containing the SNPs of one or more strains. This file should contain the strain(s) that you have in your mouse cross.

VCF_INDELS=/projects/omics_share/mouse/GRCm39/genome/annotation/snps_indels/rel_2112_v8/mgp_REL2021_indels.vcf.gz

VCF_SNPS=/projects/omics_share/mouse/GRCm39/genome/annotation/snps_indels/rel_2112_v8/mgp_REL2021_snps.vcf.gzNext, we need to pass in the name of the strain for which we are creating the VCI file. In this case, we will use DBA/2J. The strain name must exactly match the strain name in the VCF.

STRAIN=DBA_2JThis Challenge is used in above as well in “Creating Pseudo-Reference Genomes and Transcriptomes for Other Strains”.

Challenge 2: Viewing Strain Names in a VCF

There are 50 strains in the VCF SNP file. What are their names? The

strain names are stored in the VCF header. The samtools

container also includes a tool called tabix, which queries

and indexes tab-delimited files. Run the samtools container

and look at the tabix help page. Find a command that will print out the

SNP VCF header and find the strain names.

singularity run ${SAMTOOLS} tabix --helpCall tabix with the ‘–only-header’ option and pass in

the SNP VCF. In this course, we are using long option names. You could

also use ‘-H’ to achieve the same result.

$ singularity run ${SAMTOOLS} tabix --only-header ${VCF_SNPS}OUTPUT

##fileformat=VCFv4.2

##FILTER=<ID=PASS,Description="All filters passed">

##bcftoolsVersion=1.13+htslib-1.13

##bcftoolsCommand=mpileup -f Mus_musculus.GRCm39.dna.toplevel.fa.gz -b samples -g 10 -a FORMAT/DP,FORMAT/AD,FORMAT/ADF,FORMAT/ADR,FORMAT/SP,INFO/AD -E -Q 0 -pm 3 -F 0.25 -d 500

##reference=Mus_musculus.GRCm39.dna.toplevel.fa.gz

##contig=<ID=1,length=195154279>

##contig=<ID=2,length=181755017>

##contig=<ID=3,length=159745316>

##contig=<ID=4,length=156860686>

##contig=<ID=5,length=151758149>

##contig=<ID=6,length=149588044>

##contig=<ID=7,length=144995196>

##contig=<ID=8,length=130127694>

##contig=<ID=9,length=124359700>

##contig=<ID=10,length=130530862>

##contig=<ID=11,length=121973369>

##contig=<ID=12,length=120092757>

##contig=<ID=13,length=120883175>

##contig=<ID=14,length=125139656>

##contig=<ID=15,length=104073951>

##contig=<ID=16,length=98008968>

##contig=<ID=17,length=95294699>

##contig=<ID=18,length=90720763>

##contig=<ID=19,length=61420004>

##contig=<ID=X,length=169476592>

##ALT=<ID=*,Description="Represents allele(s) other than observed.">

##INFO=<ID=INDEL,Number=0,Type=Flag,Description="Indicates that the variant is an INDEL.">

##INFO=<ID=IDV,Number=1,Type=Integer,Description="Maximum number of raw reads supporting an indel">

##INFO=<ID=IMF,Number=1,Type=Float,Description="Maximum fraction of raw reads supporting an indel">

##INFO=<ID=DP,Number=1,Type=Integer,Description="Raw read depth">

##FORMAT=<ID=PL,Number=G,Type=Integer,Description="List of Phred-scaled genotype likelihoods">

##FORMAT=<ID=DP,Number=1,Type=Integer,Description="Number of high-quality bases">

##FORMAT=<ID=AD,Number=R,Type=Integer,Description="Allelic depths (high-quality bases)">

##INFO=<ID=AD,Number=R,Type=Integer,Description="Total allelic depths (high-quality bases)">

##INFO=<ID=END,Number=1,Type=Integer,Description="End position of the variant described in this record">

##INFO=<ID=MinDP,Number=1,Type=Integer,Description="Minimum per-sample depth in this gVCF block">

##FORMAT=<ID=GT,Number=1,Type=String,Description="Genotype">

##FORMAT=<ID=GQ,Number=1,Type=Integer,Description="Phred-scaled Genotype Quality">

##INFO=<ID=DP4,Number=4,Type=Integer,Description="Number of high-quality ref-forward , ref-reverse, alt-forward and alt-reverse bases">

##INFO=<ID=MQ,Number=1,Type=Integer,Description="Average mapping quality">

##bcftools_callCommand=call -mAv -f GQ,GP -p 0.99; Date=Wed Aug 11 21:20:03 2021

##bcftools_normCommand=norm --fasta-ref Mus_musculus.GRCm39.dna.toplevel.fa.gz -m +indels; Date=Fri Aug 13 11:11:49 2021

##FORMAT=<ID=FI,Number=1,Type=Integer,Description="High confidence (1) or low confidence (0) based on soft filtering values">

##FILTER=<ID=LowQual,Description="Low quality variants">

##VEP="v104" time="2021-08-30 23:27:00" cache="mus_musculus/104_GRCm39" ensembl-funcgen=104.59ae779 ensembl-variation=104.6154f8b ensembl=104.1af1dce ensembl-io=104.1d3bb6e assembly="GRCm39" dbSNP="150" gencode="GENCODE M27" regbuild="1.0" sift="sift"

##INFO=<ID=CSQ,Number=.,Type=String,Description="Consequence annotations from Ensembl VEP. Format: Allele|Consequence|IMPACT|SYMBOL|Gene|Feature_type|Feature|BIOTYPE|EXON|INTRON|HGVSc|HGVSp|cDNA_position|CDS_position|Protein_position|Amino_acids|Codons|Existing_variation|DISTANCE|STRAND|FLAGS|VARIANT_CLASS|SYMBOL_SOURCE|HGNC_ID|SIFT|MOTIF_NAME|MOTIF_POS|HIGH_INF_POS|MOTIF_SCORE_CHANGE|TRANSCRIPTION_FACTORS">

##bcftools_viewVersion=1.13+htslib-1.13

##bcftools_viewCommand=view -i 'FORMAT/FI[*] = 1' mgp_REL2021_snps.vcf.gz; Date=Sat Dec 18 19:08:09 2021

##bcftools_annotateVersion=1.13+htslib-1.13

##bcftools_annotateCommand=annotate -x INFO/VDB,INFO/SGB,INFO/RPBZ,INFO/MQBZ,INFO/MQBZ,INFO/MQSBZ,INFO/BQBZ,INFO/SCBZ,INFO/FS,INFO/MQOF,INFO/AC,INFO/AN,FORMAT/SP,FORMAT/ADF,FORMAT/ADR,FORMAT/GP; Date=Sat Dec 18 19:08:09 2021

##INFO=<ID=AC,Number=A,Type=Integer,Description="Allele count in genotypes">

##INFO=<ID=AN,Number=1,Type=Integer,Description="Total number of alleles in called genotypes">

##bcftools_viewCommand=view -a -Oz -o final_mgp_REL2021_snps.vcf.gz; Date=Sat Dec 18 19:08:09 2021

##bcftools_annotateCommand=annotate -x INFO/MQ0F -Oz -o final_mgp_REL2021_snps.vcf.gz mgp_REL2021_snps.vcf.gz; Date=Mon Dec 20 07:12:23 2021

#CHROM POS ID REF ALT QUAL FILTER INFO FORMAT 129P2_OlaHsd 129S1_SvImJ 129S5SvEvBrd A_J AKR_J B10.RIII BALB_cByJ BALB_cJ BTBR_T+_Itpr3tf_J BUB_BnJC3H_HeH C3H_HeJ C57BL_10J C57BL_10SnJ C57BL_6NJ C57BR_cdJ C57L_J C58_J CAST_EiJCBA_J CE_J CZECHII_EiJ DBA_1J DBA_2J FVB_NJ I_LnJ JF1_MsJ KK_HiJ LEWES_EiJ LG_J LP_J MAMy_J MOLF_EiJ NOD_ShiLtJ NON_LtJ NZB_B1NJ NZO_HlLtJ NZW_LacJ PL_J PWK_PhJ QSi3 QSi5 RF_J RIIIS_J SEA_GnJ SJL_J SM_J SPRET_EiJ ST_bJ SWR_J WSB_EiJ ZALENDE_EiJ

The VCF header contains information about the file format version, chromosomes, metadata, and tools used to create the VCF. The column names are on the last line of the VCF header and this line contains the strain names. If you had a cross comprised of other strains, you would query the VCF to find their names.

We will work on the sumner2 /flashscratch area. Create a directory with your user ID. Then create a directory called ‘gbrs’. For example:

mkdir -p /flashscratch/dgatti/gbrs/${STRAIN}Then change into the directory that you just created.

cd /flashscratch/dgatti/gbrsLoad in the Singularity software.

module load singularityUse g2gtools vcf2vci to Gather Strain-Specific SNPs and

Indels

In the first step, we insert indels from a specific strain into the

reference genome using the vcf2vci command. This is written

out to a text file in a format called “VCI”.

The arguments are:

$ g2gtools vcf2vci --help

╭─ Options ───────────────────────────────────────────────────────────────────────────────────────────────╮

│ * --vcf -i FILE VCF files can seperate files by "," or have multiple -i [default: None] │

│ [required] │

│ * --fasta -f FILE Fasta file matching VCF information [default: None] [required] │

│ * --strain -s TEXT Name of strain/sample (column in VCF file) [default: None] [required] │

│ --output -o FILE Name of output file [default: None] │

│ --diploid -d Create diploid VCI file │

│ --keep Keep track of VCF lines that could not be converted to VCI file │

│ --pass Use only VCF lines that have a PASS for the filter value │

│ --quality Filter on quality, FI=PASS │

│ --no-bgzip DO NOT compress and index output │

│ --verbose -v INTEGER specify multiple times for more verbose output [default: 0] │

│ --help Show this message and exit. │

╰─────────────────────────────────────────────────────────────────────────────────────────────────────────╯We will use a subset of the arguments, passing in the reference FASTA file, the SNPD and indel VCFs, the strain name to search for in the indel VCF, and the output file path.

Inputs | Argument | Description | |———-|————-| | –fasta | path to the GRCm39 reference FASTA file | | –vcf | path(s) to the Sanger VCFs for SNPs and indels. Both can be passed in. |

Output | Argument | Description | |———-|————-| |

–output | A VCI file, which is a custom file format, akin to a BED

format, which lists the positions and sequences of indels. Note that,

while we list the output file extension as “vci”, g2gtools

will gzip and index the file, so we will need to add the ‘.gz’ extension

when we use the file in downstream commands. |

STRAIN_VCI=${STRAIN}/REF-to-${STRAIN}.vci

singularity run ${G2GTOOLS} g2gtools vcf2vci \

--fasta ${REF_FASTA} \

--vcf ${VCF_INDELS} \

--vcf ${VCF_SNPS} \

--strain ${STRAIN} \

--output ${STRAIN_VCI}Use g2gtools patch to Insert Strain-Specific SNPs into

Reference

Next, we insert SNPs from a specific strain into the reference genome using the ‘patch’ command.

The arguments are:

$ g2gtools patch --help

╭─ Options ───────────────────────────────────────────────────────────────────────────────────────╮

│ * --input -i FILE Fasta file to extract from [default: None] [required] │

│ * --vci -c FILE VCI File to use [default: None] [required] │

│ --output -o FILE Name of output file [default: None] │

│ --bed -b FILE BED file name [default: None] │

│ --region -r TEXT Region to extract in chromosome:start-end format [default: None] │

│ --reverse Reverse the direction of VCI file │

│ --bgzip compress and index output │

│ --verbose -v INTEGER specify multiple times for more verbose output [default: 0] │

│ --help Show this message and exit. │

╰─────────────────────────────────────────────────────────────────────────────────────────────────╯We will use the following arguments:

Inputs | Argument | Description | |———-|————-| |

–input | path to the GRCm39 reference FASTA file | | –vci | path to the

gzipped VCI file created by vcf2vci. |

Output | Argument | Description | |———-|————-| | –output | path to patched FASTA file with SNPs inserted into the reference genome sequence. |

PATCHED_FASTA=${STRAIN}/${STRAIN}.patched.fa

singularity run ${G2GTOOLS} g2gtools patch \

--input ${REF_FASTA} \

--vci ${STRAIN_VCI}.gz \

--output ${PATCHED_FASTA}Use g2gtools transform to Insert Strain-Specific Indels

into Strain FASTA

The ‘transform’ function takes the strain-specific SNP-patched FASTA file, inserts indels from the VCI file, and outputs a FASTA file. This FASTA file contains the inferred sequence of a specific strain.

Next, we insert SNPs from a specific strain into the reference genome using the ‘patch’ command.

The arguments are:

$ g2gtools transform --help

╭─ Options ───────────────────────────────────────────────────────────────────────────────────────╮

│ * --input -i FILE Fasta file to extract from [default: None] [required] │

│ * --vci -c FILE VCI File to use [default: None] [required] │

│ --output -o FILE Name of output file [default: None] │

│ --bed -b FILE BED file name [default: None] │

│ --region -r TEXT Region to extract in chromosome:start-end format [default: None] │

│ --reverse Reverse the direction of VCI file │

│ --bgzip compress and index output │

│ --verbose -v INTEGER specify multiple times for more verbose output [default: 0] │

│ --help Show this message and exit. │

╰─────────────────────────────────────────────────────────────────────────────────────────────────╯We will use the following arguments:

Inputs | Argument | Description | |———-|————-| |

–input | path to the strain-specific patched FASTA file created by

patch. | | –vci | path to the gzipped VCI

file created by vcf2vci. |

Output | Argument | Description | |———-|————-| | –output | path to transformed FASTA file with SNPs and indels inserted into the reference genome sequence. |

We need to create a genome version variable to create informative file names.

GENOME_VERSION=GRCm39Next, we will run the transform command.

STRAIN_FASTA=${STRAIN}/${STRAIN}.${GENOME_VERSION}.fa

singularity run ${G2GTOOLS} g2gtools transform \

--input ${PATCHED_FASTA} \

--vci ${STRAIN_VCI}.gz \

--output ${STRAIN_FASTA}We now have a pseudo-reference genome in the strain-specific FASTA file.

Use samtools faidx to Index the Strain-Specific FASTA

file

This is a step which uses the samtools suite of tools to index the FASTA file.

Remember that we created a variable for the samtools

Singularity container at the top of the lesson.

Then we will use samtools to index the FASTA file.

singularity run ${SAMTOOLS} samtools faidx ${STRAIN_FASTA}Use g2gtools convert to Create a Strain-Specific GTF

File

The convert function takes the strain-specific FASTA

file, the VCI file, and the reference GTF and creates a strain-specific

annotation file in GTF.

The arguments are:

$ g2gtools convert --help

╭─ Options ────────────────────────────────────────────────────────────────────────────────────────────────────────────╮

│ * --input-file -i FILE Input file to convert to new coordinates [default: None] [required] │

│ * --vci-file -c FILE VCI file [default: None] [required] │

│ * --file-format -f [BAM|SAM|GFF|GTF|BED] Input file format [default: None] [required] │

│ --output -o FILE Name of output file [default: None] │

│ --reverse -r Reverse the direction of the conversion │

│ --verbose -v INTEGER specify multiple times for more verbose output [default: 0] │

│ --help Show this message and exit. │

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯We will use the following arguments:

Inputs | Argument | Description | |———-|————-| |

–input-file | path to the reference GTF. | | –vci-file | path to the

gzipped VCI file created by vcf2vci. | |

–file-format | string indicating a GTF file. Lower case since it will be

used as a file name extension. |

Output | Argument | Description | |———-|————-| | –output | path to converted GTF file with SNPs and indels inserted into the reference transcript and coordinates shifted to the strain-specific coordinates. |

Next, we need the path to the reference GTF. We have selected the Ensembl 105 annotation for this lesson.

REF_GTF=/projects/omics_share/mouse/GRCm39/transcriptome/annotation/ensembl/v105/Mus_musculus.GRCm39.105.chr.gtfWe also need to specify the annotation file format in lower case because it will be used as a file name extension.

ANNOT_FORMAT=gtfSTRAIN_GTF=${STRAIN}/${STRAIN}.${GENOME_VERSION}.${ANNOT_FORMAT}

singularity run ${G2GTOOLS} g2gtools convert \

--input-file ${REF_GTF} \

--vci-file ${STRAIN_VCI}.gz \

--file-format ${ANNOT_FORMAT} \

--output ${STRAIN_GTF}We now have two key files that are used by GBRS:

- the strain-specific FASTA file, which contains the strain’s inferred sequence, and

- the strain-specific GTF file, which contains the strain’s inferred annotation.

Challenge 3: Create a set of reference files for another strain in the VCF.

You will need to look back at Challenge 1 to see how to list the strains in the VCFs. You can query either the SNP or Indel VCF. Create an entire script on your compute instance to run the commands above

OUTPUT

[1] "This new lesson looks good"g2gtools transform -i ${STRAIN}/${STRAIN}.patched.fa -c ${STRAIN}/REF-to-${STRAIN}.chain -o ${STRAIN}/${STRAIN}.fa

g2gtools convert -c ${STRAIN}/REF-to-${STRAIN}.chain -i ${GTF} -f gtf -o ${STRAIN}/${STRAIN}.gtf

g2gtools gtf2db -i ${STRAIN}/${STRAIN}.gtf -o ${STRAIN}/${STRAIN}.gtf.db

g2gtools extract --transcripts -i ${STRAIN}/${STRAIN}.fa -db ${STRAIN}/${STRAIN}.gtf.db > ${STRAIN}/${STRAIN}.transcripts.fa